About the AI Explorer

Why?

The Harvard Art Museums is using computer vision and AI for two primary reasons. The first is to categorize, tag, describe, and annotate its collection of art in ways that the staff of curators don't. Since the computer lacks any context or formal training in art history, the machine views and annotates our collection as if walking into an art museum for the first time. The perspective offered by AI leans closer to reflecting the public rather than experts. Currently, the Harvard Art Museums’ search interface relies on descriptions written by art historians. The addition of AI-generated annotations makes the Harvard Art Museums’ art collection more accessible to non-specialists.

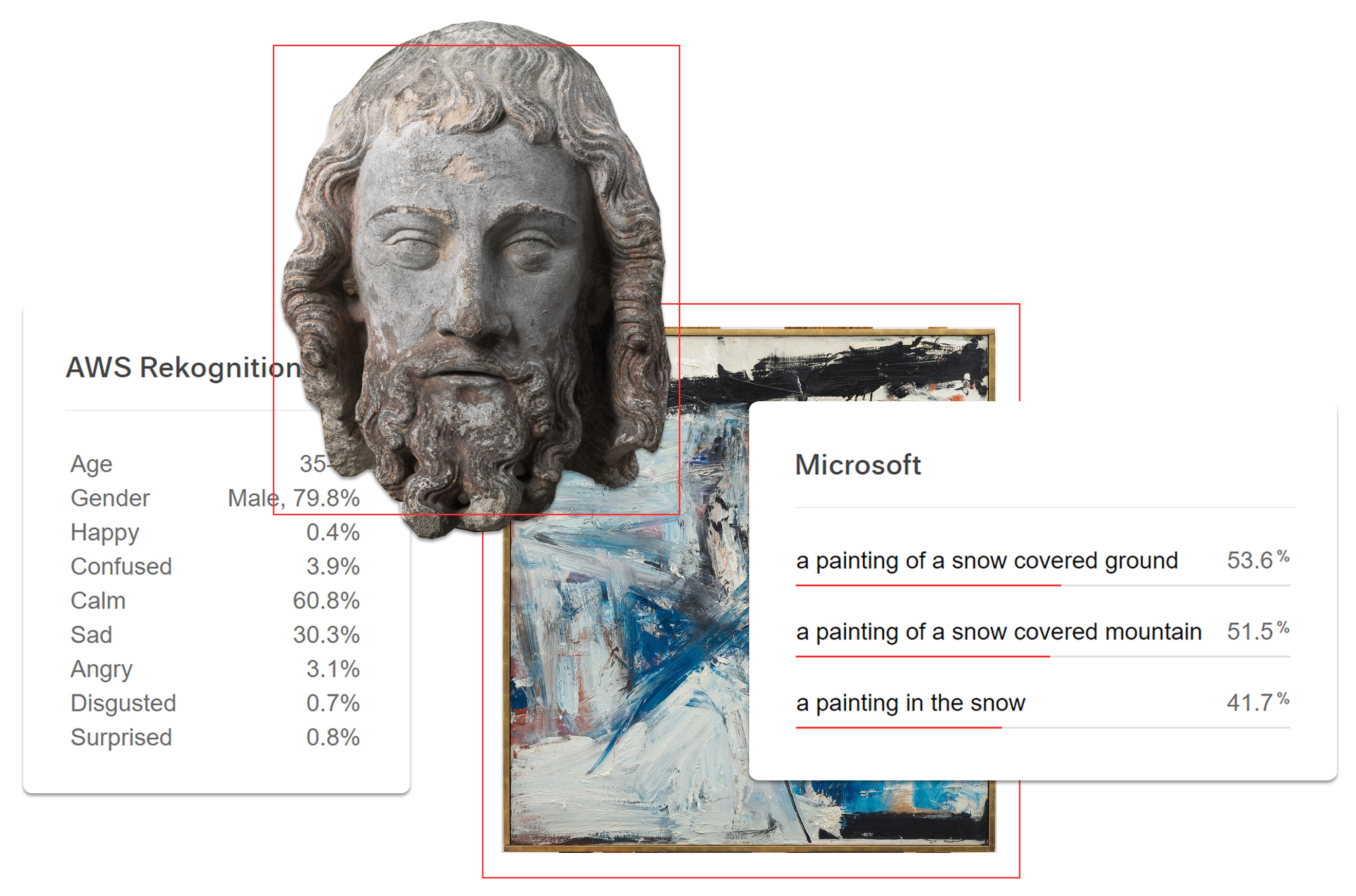

The second reason is to build a dataset for researching how AI services operate. All of the services we use are black boxes. This means the services do not disclose the algorithms and training sets used in their systems so we are left to guess how they operate. We use them and provide the data, in part, to call attention to the differences and biases inherent in AI services.

How?

The Harvard Art Museums collects artificially-generated data on artworks from nine different AI and computer-vision services: Amazon Nova, Amazon Rekognition, Clarifai, Imagga, Google Vision, Microsoft Cognitive Services, Azure OpenAI, Anthropic's Claude (via AWS Bedrock), and Meta's Llama (via AWS Bedrock). For each artwork, these services produce interpretations otherwise known as “annotations” that include generated descriptions, tags, and captions as well as object, face, and text recognition. When a user searches for a keyword, this site takes the user-inputted keyword and finds artworks that contain a matching machine-generated tag or phrase. From there, the user can go to an individual artwork to see and compare the annotations from the AI services.

What?

We've learned a bit about our collections thanks to these services. Hear about our discoveries in the presentation, ‘Elephants on Parade or: A Cavalcade of Discoveries from Five CV Systems‘, given by Jeff Steward at the AEOLIAN Network workshop ‘Reimagining Industry / Academic / Cultural Heritage Partnerships in AI’ on Monday, October 25, 2021. Then dive in to statistics about the annotations and services.

Additional Reading, Viewing, and Listening

The Pig and the Algorithm

Kate Palmer Albers, Plot, March 4, 2017

Computer Vision and Cultural Heritage: A Case Study

Catherine Nicole Coleman, AEOLIAN Network, April 2022

Surprise Machines

Rodighiero, Dario, Lins Derry, Douglas Duhaime, Jordan Kruguer, Maximilian C. Mueller, Christopher Pietsch, Jeffrey T. Schnapp, Jeff Steward, and metaLAB, Information Design Journal, December 2022

Dreaming of AI: Perspectives on AI Use in Cultural Heritage (YouTube)

BPOC Webinar, September 29, 2023