Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 18-30 |

| Gender | Female, 54.2% |

| Calm | 50.7% |

| Angry | 45.3% |

| Fear | 45.1% |

| Confused | 45.1% |

| Sad | 45.1% |

| Disgusted | 45.1% |

| Happy | 48.2% |

| Surprised | 45.5% |

Feature analysis

Amazon

| Person | 99.4% | |

Categories

Imagga

| paintings art | 99.6% | |

Captions

Microsoft

created on 2019-10-30

| a room with art on the wall | 63.3% | |

| a close up of a window | 41.2% | |

| a window in a room | 41.1% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2025-01-30

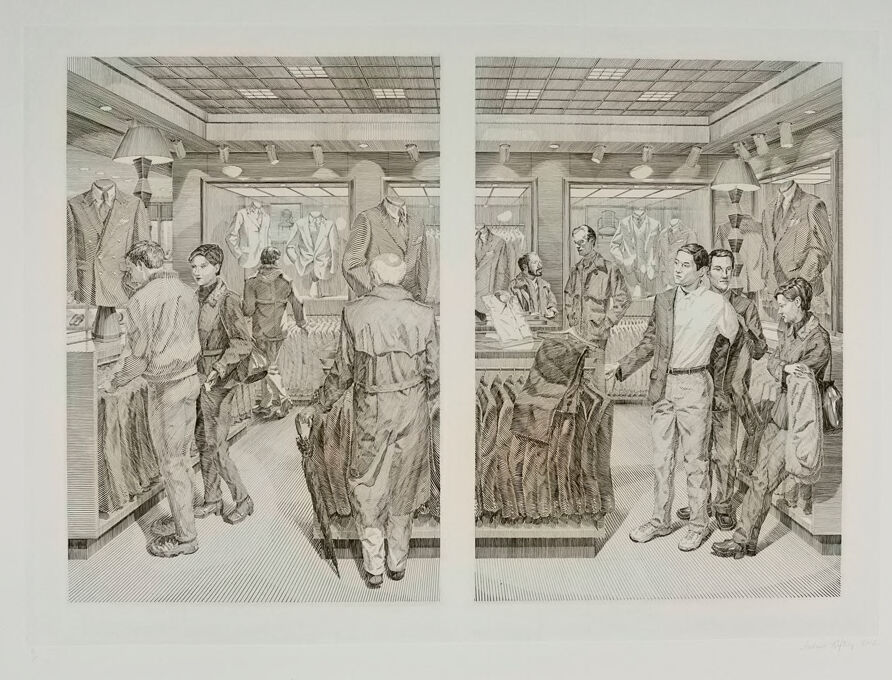

The image depicts a busy clothing store setting, where multiple people are engaged in activities such as browsing, trying on clothes, and conversing. The environment appears to be a men's apparel section, with suits and jackets prominently displayed on racks and hangers. The store interior features a well-lit and organized arrangement, with wood-paneled displays and a grid-like ceiling with recessed lighting. The composition is split into two panels, which are mirror images of each other, creating a symmetrical and balanced visual effect.

Created by gpt-4 on 2025-01-30

This image depicts a detailed and intricate drawing of an interior scene, likely inside a menswear store. The drawing is monochromatic and is divided into two panels as if looking through a set of double windows or a transparent barrier into the store. In the left panel, there are individuals who appear to be customers examining various articles of clothing. Some are looking through items on hangers, while another seems to be inspecting a piece of clothing more closely. Clothing racks and what might be shelves or dressing cubicles are also visible. On the right panel, we see a central figure standing confidently with one hand on the hip, surrounded by more clothing pieces and another individual sitting down to the right, possibly waiting or trying on shoes. In the background, a person appears to be standing behind a counter, and various clothing items are displayed throughout the scene. The attention to detail in the drawing is meticulous, showing textures and patterns of the clothes, shadow and light effects, and the demeanors of the individuals engaged in shopping activities. The ambiance suggests a calm and quiet shopping experience with patrons engrossed in their task of selecting clothes. The ceiling features lighting fixtures which illuminate the space evenly. The artwork has been placed on a larger canvas or paper, creating a substantial border around it. The entire composition offers a glimpse into a moment of everyday life with a sense of realism and precise depiction.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2025-01-30

This is a black and white illustration showing the interior of what appears to be a men's clothing store. The drawing is split into two panels showing different sections of the store. The space features display racks with suits and other menswear hanging on them, and track lighting on the ceiling. Several customers are browsing the merchandise while others appear to be having conversations. The store has a clean, organized layout with clothing displayed both on hangers and on mannequins. The style of the drawing and the clothing suggests this might be from the mid-20th century. The illustration technique uses fine lines and shading to create depth and detail in the scene.

Created by claude-3-opus-20240229 on 2025-01-30

The image shows two pencil sketches or drawings side by side, depicting a group of men in what appears to be a retail clothing store or tailor's shop. The men are dressed in mid-20th century style suits and hats. Some are browsing clothing items on racks, while others seem to be engaged in conversation or getting fitted by the store staff. The sketches capture a busy, lively scene inside the clothing store, with great attention paid to the details of the interior setting and the poses and interactions of the various male customers and employees.

Created by claude-3-haiku-20240307 on 2025-01-30

The image depicts the interior of a clothing store or tailor's shop. It shows two scenes, one on the left and one on the right. In the left scene, there are several people, both men and women, standing and examining various clothing items displayed on racks and shelves. In the right scene, there appears to be a customer or group of customers interacting with a store employee or tailor, possibly discussing or trying on clothing. The overall scene conveys a sense of activity and bustling in a retail or service-oriented establishment.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-01-30

The image presents a diptych, a two-panel artwork featuring a scene of men in a clothing store. The left panel depicts a man standing with his back to the viewer, facing another man who is partially obscured by a rack of clothes. In the background, several other men are visible, some standing and others sitting. The right panel shows a man in a white shirt and dark jacket standing in front of a counter, with another man sitting behind it. The background of this panel also features several other men, as well as racks of clothes and mannequins displaying suits. The artwork is rendered in black and white, with a focus on shading and texture to create depth and dimensionality. The overall atmosphere of the piece is one of quiet contemplation, inviting the viewer to reflect on the scene before them.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-01-30

The image is a black-and-white illustration of two people standing in front of a clothing store. The people are wearing formal attire. The store has a glass door and a glass window, and the interior is dimly lit. There are several mannequins dressed in formal wear, and some clothes are hanging on the racks. The ceiling has several lights, and the walls are white. The image is framed in a white border.

Created by amazon.nova-pro-v1:0 on 2025-01-30

The image is a black-and-white drawing of a store with two parts. In the left part, there are four people standing in front of a clothing store. The man on the left is holding a cane, and the man in the middle is wearing a hat. On the right, there are three people standing in front of a clothing store. The man in the middle is wearing a belt and a watch.