Machine Generated Data

Tags

Amazon

created on 2023-10-25

| Face | 99.5 | |

|

| ||

| Head | 99.5 | |

|

| ||

| Photography | 99.5 | |

|

| ||

| Portrait | 99.5 | |

|

| ||

| People | 98.9 | |

|

| ||

| Person | 98.4 | |

|

| ||

| Baby | 98.4 | |

|

| ||

| Person | 98 | |

|

| ||

| Adult | 98 | |

|

| ||

| Male | 98 | |

|

| ||

| Man | 98 | |

|

| ||

| Person | 97.4 | |

|

| ||

| Adult | 97.4 | |

|

| ||

| Male | 97.4 | |

|

| ||

| Man | 97.4 | |

|

| ||

| Person | 95.2 | |

|

| ||

| Clothing | 93.2 | |

|

| ||

| Hat | 93.2 | |

|

| ||

| Officer | 87.8 | |

|

| ||

| Person | 86.2 | |

|

| ||

| Adult | 86.2 | |

|

| ||

| Male | 86.2 | |

|

| ||

| Man | 86.2 | |

|

| ||

| City | 85.5 | |

|

| ||

| Formal Wear | 84.2 | |

|

| ||

| Suit | 84.2 | |

|

| ||

| Urban | 81.1 | |

|

| ||

| Military | 81 | |

|

| ||

| Captain | 77.1 | |

|

| ||

| Outdoors | 73.9 | |

|

| ||

| Coat | 70.5 | |

|

| ||

| Suit | 70.2 | |

|

| ||

| Glove | 57.9 | |

|

| ||

| Window | 57.8 | |

|

| ||

| Cap | 57.7 | |

|

| ||

| Military Uniform | 57.7 | |

|

| ||

| Transportation | 56.4 | |

|

| ||

| Vehicle | 56.4 | |

|

| ||

| Terminal | 56.4 | |

|

| ||

| Sailor Suit | 56.3 | |

|

| ||

| Nature | 56 | |

|

| ||

| Firearm | 56 | |

|

| ||

| Gun | 56 | |

|

| ||

| Rifle | 56 | |

|

| ||

| Weapon | 56 | |

|

| ||

| Weather | 55.7 | |

|

| ||

| Door | 55.2 | |

|

| ||

| Baseball | 55 | |

|

| ||

| Baseball Glove | 55 | |

|

| ||

| Sport | 55 | |

|

| ||

Clarifai

created on 2023-10-15

| people | 99.2 | |

|

| ||

| vehicle window | 96.9 | |

|

| ||

| window | 96.6 | |

|

| ||

| monochrome | 96.3 | |

|

| ||

| leader | 92.6 | |

|

| ||

| man | 92.5 | |

|

| ||

| group | 91.1 | |

|

| ||

| two | 86 | |

|

| ||

| indoors | 86 | |

|

| ||

| portrait | 83 | |

|

| ||

| child | 81.9 | |

|

| ||

| administration | 80.7 | |

|

| ||

| family | 80.5 | |

|

| ||

| woman | 78.6 | |

|

| ||

| adult | 78.4 | |

|

| ||

| aircraft | 78.1 | |

|

| ||

| interaction | 76.1 | |

|

| ||

| three | 75.5 | |

|

| ||

| street | 74.9 | |

|

| ||

| airplane | 74.8 | |

|

| ||

Imagga

created on 2019-02-01

| man | 32.2 | |

|

| ||

| people | 30.7 | |

|

| ||

| male | 24.9 | |

|

| ||

| person | 22.2 | |

|

| ||

| barbershop | 21.1 | |

|

| ||

| adult | 20.3 | |

|

| ||

| shop | 20 | |

|

| ||

| couple | 18.3 | |

|

| ||

| smiling | 18.1 | |

|

| ||

| television | 17.4 | |

|

| ||

| happy | 16.9 | |

|

| ||

| senior | 16.9 | |

|

| ||

| black | 16.8 | |

|

| ||

| looking | 16.8 | |

|

| ||

| home | 15.1 | |

|

| ||

| bow tie | 15 | |

|

| ||

| sitting | 14.6 | |

|

| ||

| portrait | 14.2 | |

|

| ||

| business | 14 | |

|

| ||

| office | 13.7 | |

|

| ||

| mercantile establishment | 13.7 | |

|

| ||

| family | 12.4 | |

|

| ||

| businessman | 12.4 | |

|

| ||

| necktie | 12.1 | |

|

| ||

| telecommunication system | 12 | |

|

| ||

| two | 11.8 | |

|

| ||

| old | 11.8 | |

|

| ||

| handsome | 11.6 | |

|

| ||

| lifestyle | 11.6 | |

|

| ||

| indoors | 11.4 | |

|

| ||

| face | 11.4 | |

|

| ||

| mature | 11.2 | |

|

| ||

| hair | 11.1 | |

|

| ||

| happiness | 11 | |

|

| ||

| worker | 10.5 | |

|

| ||

| expression | 10.2 | |

|

| ||

| employee | 10.2 | |

|

| ||

| bartender | 10.1 | |

|

| ||

| smile | 10 | |

|

| ||

| child | 9.8 | |

|

| ||

| professional | 9.8 | |

|

| ||

| window | 9.7 | |

|

| ||

| hairdresser | 9.7 | |

|

| ||

| elderly | 9.6 | |

|

| ||

| room | 9.6 | |

|

| ||

| women | 9.5 | |

|

| ||

| men | 9.4 | |

|

| ||

| day | 9.4 | |

|

| ||

| waiter | 9.3 | |

|

| ||

| house | 9.2 | |

|

| ||

| modern | 9.1 | |

|

| ||

| place of business | 9.1 | |

|

| ||

| attractive | 9.1 | |

|

| ||

| interior | 8.8 | |

|

| ||

| computer | 8.8 | |

|

| ||

| love | 8.7 | |

|

| ||

| corporate | 8.6 | |

|

| ||

| human | 8.2 | |

|

| ||

| girls | 8.2 | |

|

| ||

| building | 8 | |

|

| ||

| to | 8 | |

|

| ||

| mother | 7.9 | |

|

| ||

| glass | 7.8 | |

|

| ||

| executive | 7.7 | |

|

| ||

| desk | 7.7 | |

|

| ||

| wife | 7.6 | |

|

| ||

| females | 7.6 | |

|

| ||

| fashion | 7.5 | |

|

| ||

| dining-room attendant | 7.5 | |

|

| ||

| shirt | 7.5 | |

|

| ||

| silhouette | 7.4 | |

|

| ||

| indoor | 7.3 | |

|

| ||

| businesswoman | 7.3 | |

|

| ||

| aged | 7.2 | |

|

| ||

| dress | 7.2 | |

|

| ||

| holiday | 7.2 | |

|

| ||

| working | 7.1 | |

|

| ||

| together | 7 | |

|

| ||

Google

created on 2019-02-01

| Photograph | 97 | |

|

| ||

| Snapshot | 87.7 | |

|

| ||

| Black-and-white | 85.4 | |

|

| ||

| Standing | 79.6 | |

|

| ||

| Photography | 76.4 | |

|

| ||

| Monochrome | 75.8 | |

|

| ||

| Monochrome photography | 57.8 | |

|

| ||

| Window | 53.8 | |

|

| ||

| Style | 51 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 11-19 |

| Gender | Female, 99.9% |

| Happy | 93.9% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Calm | 3.9% |

| Sad | 2.2% |

| Confused | 1.4% |

| Angry | 0.2% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 53-61 |

| Gender | Male, 99.3% |

| Calm | 99.4% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Happy | 0.3% |

| Confused | 0.1% |

| Angry | 0.1% |

| Disgusted | 0% |

AWS Rekognition

| Age | 19-27 |

| Gender | Male, 99.7% |

| Calm | 77.3% |

| Sad | 9.9% |

| Surprised | 6.4% |

| Confused | 6.1% |

| Fear | 6.1% |

| Happy | 2.7% |

| Angry | 1% |

| Disgusted | 0.9% |

AWS Rekognition

| Age | 36-44 |

| Gender | Male, 89.7% |

| Happy | 40.9% |

| Calm | 36.8% |

| Sad | 10.5% |

| Surprised | 7.4% |

| Fear | 6.2% |

| Confused | 4% |

| Angry | 2.6% |

| Disgusted | 0.9% |

Microsoft Cognitive Services

| Age | 48 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 27 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 38.2% | |

|

| ||

| food drinks | 31.7% | |

|

| ||

| interior objects | 27.4% | |

|

| ||

| text visuals | 2% | |

|

| ||

Captions

Microsoft

created on 2019-02-01

| a man standing in front of a window | 92.2% | |

|

| ||

| a man standing in front of a mirror posing for the camera | 76.6% | |

|

| ||

| a man standing in front of a bus window | 66.5% | |

|

| ||

Clarifai

created by general-english-image-caption-blip on 2025-05-15

| a photograph of a man in a suit and tie standing in front of a window | -100% | |

|

| ||

Google Gemini

Created by gemini-2.0-flash on 2025-05-13

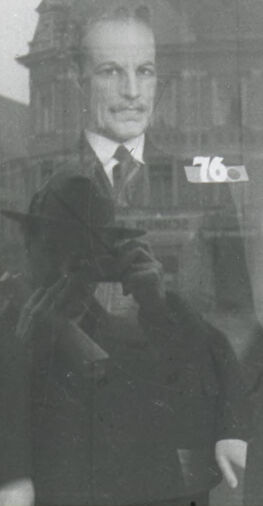

The image appears to be a black and white photograph of a storefront display with mannequins. The mannequins are dressed in period clothing.

There are four mannequins visible. The leftmost mannequin is a man in a suit, with a tag or sign that reads "76." Next to him is a young mannequin dressed in a sailor-style outfit, marked with "15." To the right and slightly behind them is another male mannequin in a suit and hat, tagged with "28." The fourth mannequin, on the right edge of the frame, is also dressed in a suit. All the mannequins have a somewhat lifelike appearance.

The reflection in the storefront window shows a building exterior. There is also an individual taking a photo, also wearing a dark suit and hat.

The overall tone is old-fashioned, suggesting the photograph was taken in the past. The contrast is moderate, allowing details to be visible.

Created by gemini-2.0-flash-lite on 2025-05-13

Here's a description of the image:

The image appears to be a black and white photograph, likely taken from a slide. It depicts a shop window displaying several mannequins. The mannequins are dressed in various outfits. One of the figures wears a formal suit with a tie, a sailor outfit, and a suit with a bow tie. The mannequins seem to be positioned in a way that suggests a fashion display. The numbers "76," "15," and "28" are visible on the mannequins' shoulders. The background behind the window shows a city scene with buildings visible. The overall impression is of an old, possibly vintage, photograph.

Text analysis

Amazon