Machine Generated Data

Tags

Amazon

created on 2021-12-15

Clarifai

created on 2023-10-15

Imagga

created on 2021-12-15

| metropolitan | 28.9 | |

|

| ||

| man | 24.9 | |

|

| ||

| male | 20.6 | |

|

| ||

| musical instrument | 20.1 | |

|

| ||

| person | 20.1 | |

|

| ||

| people | 18.4 | |

|

| ||

| uniform | 14.9 | |

|

| ||

| portrait | 14.9 | |

|

| ||

| face | 14.2 | |

|

| ||

| old | 13.9 | |

|

| ||

| fashion | 13.6 | |

|

| ||

| black | 13.2 | |

|

| ||

| adult | 12.8 | |

|

| ||

| dress | 12.6 | |

|

| ||

| art | 12 | |

|

| ||

| military uniform | 12 | |

|

| ||

| ruler | 12 | |

|

| ||

| dark | 11.7 | |

|

| ||

| religion | 11.6 | |

|

| ||

| wind instrument | 11.2 | |

|

| ||

| style | 11.1 | |

|

| ||

| clothing | 11.1 | |

|

| ||

| sitting | 10.3 | |

|

| ||

| percussion instrument | 10 | |

|

| ||

| mystery | 9.6 | |

|

| ||

| culture | 9.4 | |

|

| ||

| room | 9.4 | |

|

| ||

| religious | 9.4 | |

|

| ||

| musician | 8.9 | |

|

| ||

| interior | 8.8 | |

|

| ||

| spiritual | 8.6 | |

|

| ||

| statue | 8.6 | |

|

| ||

| wall | 8.5 | |

|

| ||

| vintage | 8.3 | |

|

| ||

| makeup | 8.2 | |

|

| ||

| one | 8.2 | |

|

| ||

| retro | 8.2 | |

|

| ||

| covering | 8.2 | |

|

| ||

| lady | 8.1 | |

|

| ||

| fantasy | 8.1 | |

|

| ||

| brass | 7.9 | |

|

| ||

| soldier | 7.8 | |

|

| ||

| prayer | 7.7 | |

|

| ||

| attractive | 7.7 | |

|

| ||

| expression | 7.7 | |

|

| ||

| sculpture | 7.7 | |

|

| ||

| human | 7.5 | |

|

| ||

| gold | 7.4 | |

|

| ||

| hat | 7.4 | |

|

| ||

| music | 7.4 | |

|

| ||

| inside | 7.4 | |

|

| ||

| light | 7.3 | |

|

| ||

| sexy | 7.2 | |

|

| ||

| looking | 7.2 | |

|

| ||

| home | 7.2 | |

|

| ||

| posing | 7.1 | |

|

| ||

| love | 7.1 | |

|

| ||

Google

created on 2021-12-15

| Photograph | 94.2 | |

|

| ||

| Furniture | 93.3 | |

|

| ||

| Chair | 87.3 | |

|

| ||

| Picture frame | 86.7 | |

|

| ||

| Art | 84.5 | |

|

| ||

| Suit | 81.5 | |

|

| ||

| Painting | 81.3 | |

|

| ||

| Table | 75.7 | |

|

| ||

| Classic | 75.3 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Vintage clothing | 74.2 | |

|

| ||

| Blazer | 72.7 | |

|

| ||

| Tie | 70.9 | |

|

| ||

| Illustration | 70.6 | |

|

| ||

| Visual arts | 68.4 | |

|

| ||

| Formal wear | 66.8 | |

|

| ||

| Stock photography | 66.6 | |

|

| ||

| Sitting | 63.3 | |

|

| ||

| Room | 62.5 | |

|

| ||

| History | 58.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

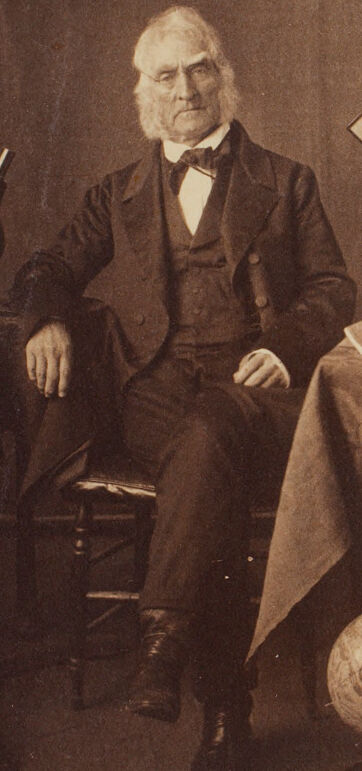

| Age | 51-69 |

| Gender | Male, 96.8% |

| Calm | 94.3% |

| Sad | 3.8% |

| Angry | 0.9% |

| Confused | 0.3% |

| Happy | 0.3% |

| Surprised | 0.2% |

| Disgusted | 0.1% |

| Fear | 0.1% |

Microsoft Cognitive Services

| Age | 67 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 95.4% | |

|

| ||

| interior objects | 2.8% | |

|

| ||

Captions

Microsoft

created on 2021-12-15

| a vintage photo of a person | 84.5% | |

|

| ||

| an old photo of a person | 84.4% | |

|

| ||

| a vintage photo of a person in a suit and tie | 84.3% | |

|

| ||