Machine Generated Data

Tags

Amazon

created on 2023-10-18

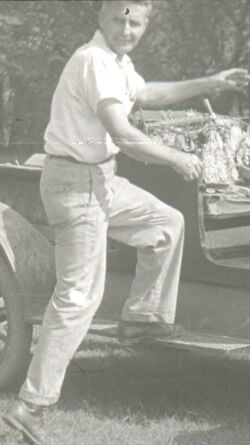

| Antique Car | 99.5 | |

|

| ||

| Model T | 99.5 | |

|

| ||

| Transportation | 99.5 | |

|

| ||

| Vehicle | 99.5 | |

|

| ||

| Person | 99 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Machine | 98.7 | |

|

| ||

| Wheel | 98.7 | |

|

| ||

| Wheel | 98.6 | |

|

| ||

| Car | 97.6 | |

|

| ||

| Wheel | 95.8 | |

|

| ||

| Spoke | 90.9 | |

|

| ||

| Face | 83.8 | |

|

| ||

| Head | 83.8 | |

|

| ||

| Photography | 78.1 | |

|

| ||

| Portrait | 78.1 | |

|

| ||

| Outdoors | 74.7 | |

|

| ||

| Tire | 69.1 | |

|

| ||

| Wheel | 60.1 | |

|

| ||

| Clothing | 57.5 | |

|

| ||

| Footwear | 57.5 | |

|

| ||

| Shoe | 57.5 | |

|

| ||

| Alloy Wheel | 56.5 | |

|

| ||

| Car Wheel | 56.5 | |

|

| ||

| Motorcycle | 56.4 | |

|

| ||

| Nature | 56.1 | |

|

| ||

| Shorts | 56 | |

|

| ||

| Buggy | 55.2 | |

|

| ||

Clarifai

created on 2023-10-18

Imagga

created on 2019-01-31

| wheeled vehicle | 100 | |

|

| ||

| tricycle | 100 | |

|

| ||

| vehicle | 78 | |

|

| ||

| conveyance | 50.2 | |

|

| ||

| child | 30.5 | |

|

| ||

| man | 20.2 | |

|

| ||

| people | 18.4 | |

|

| ||

| outdoors | 17.2 | |

|

| ||

| male | 17.1 | |

|

| ||

| black | 16.2 | |

|

| ||

| old | 16 | |

|

| ||

| outdoor | 15.3 | |

|

| ||

| portrait | 14.9 | |

|

| ||

| person | 13.9 | |

|

| ||

| youth | 12.8 | |

|

| ||

| adult | 12.3 | |

|

| ||

| happy | 11.3 | |

|

| ||

| outside | 11.1 | |

|

| ||

| park | 10.7 | |

|

| ||

| sitting | 10.3 | |

|

| ||

| love | 10.3 | |

|

| ||

| lifestyle | 10.1 | |

|

| ||

| family | 9.8 | |

|

| ||

| kid | 9.7 | |

|

| ||

| rural | 9.7 | |

|

| ||

| grass | 9.5 | |

|

| ||

| happiness | 9.4 | |

|

| ||

| relax | 9.3 | |

|

| ||

| face | 9.2 | |

|

| ||

| summer | 9 | |

|

| ||

| transportation | 9 | |

|

| ||

| couple | 8.7 | |

|

| ||

| antique | 8.7 | |

|

| ||

| bench | 8.4 | |

|

| ||

| attractive | 8.4 | |

|

| ||

| leisure | 8.3 | |

|

| ||

| childhood | 8.1 | |

|

| ||

| sexy | 8 | |

|

| ||

| boy | 7.8 | |

|

| ||

| color | 7.8 | |

|

| ||

| men | 7.7 | |

|

| ||

| head | 7.6 | |

|

| ||

| joy | 7.5 | |

|

| ||

| one | 7.5 | |

|

| ||

| sport | 7.4 | |

|

| ||

| vacation | 7.4 | |

|

| ||

| children | 7.3 | |

|

| ||

| looking | 7.2 | |

|

| ||

| cart | 7.1 | |

|

| ||

| work | 7.1 | |

|

| ||

| day | 7.1 | |

|

| ||

| travel | 7 | |

|

| ||

| sky | 7 | |

|

| ||

| together | 7 | |

|

| ||

Google

created on 2019-01-31

| Photograph | 96.8 | |

|

| ||

| Motor vehicle | 96.3 | |

|

| ||

| Snapshot | 85.9 | |

|

| ||

| Vehicle | 82.5 | |

|

| ||

| Mode of transport | 80.8 | |

|

| ||

| Black-and-white | 74.4 | |

|

| ||

| Photography | 70.6 | |

|

| ||

| Automotive wheel system | 65.4 | |

|

| ||

| Wheel | 65.2 | |

|

| ||

| Automotive tire | 63 | |

|

| ||

| Stock photography | 59.4 | |

|

| ||

| Classic | 56.7 | |

|

| ||

| Car | 56.4 | |

|

| ||

| Square | 54.2 | |

|

| ||

| Style | 52.5 | |

|

| ||

| Vintage car | 50.6 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 37-45 |

| Gender | Male, 86.9% |

| Happy | 80.2% |

| Surprised | 7.1% |

| Fear | 6.2% |

| Sad | 5.9% |

| Calm | 5.5% |

| Disgusted | 2.1% |

| Confused | 1.3% |

| Angry | 1.2% |

Microsoft Cognitive Services

| Age | 44 |

| Gender | Male |

Feature analysis

Categories

Imagga

| paintings art | 100% | |

|

| ||

Captions

Microsoft

created on 2019-01-31

| a vintage photo of a small child in a car | 66% | |

|

| ||

| a vintage photo of a person in a car | 65.9% | |

|

| ||

| a little girl riding on the back of a truck | 51.2% | |

|

| ||