Machine Generated Data

Tags

Amazon

created on 2019-11-07

Clarifai

created on 2019-11-07

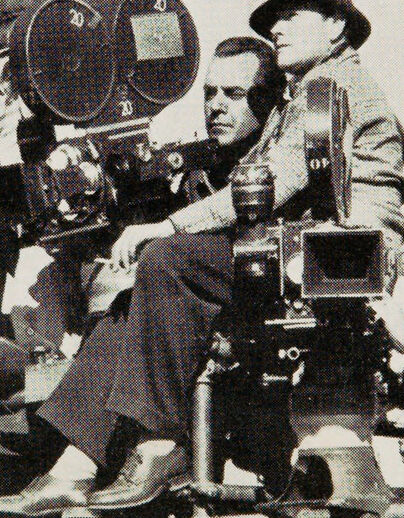

| people | 100 | |

|

| ||

| group together | 99.2 | |

|

| ||

| adult | 99.2 | |

|

| ||

| group | 99.1 | |

|

| ||

| many | 97.9 | |

|

| ||

| vehicle | 97.1 | |

|

| ||

| military | 92.6 | |

|

| ||

| man | 92.5 | |

|

| ||

| leader | 90.9 | |

|

| ||

| several | 90.4 | |

|

| ||

| transportation system | 89.2 | |

|

| ||

| outfit | 89.1 | |

|

| ||

| watercraft | 88.7 | |

|

| ||

| five | 88.2 | |

|

| ||

| three | 88.1 | |

|

| ||

| two | 87.1 | |

|

| ||

| wear | 86.7 | |

|

| ||

| war | 76.7 | |

|

| ||

| administration | 76.6 | |

|

| ||

| aircraft | 74.7 | |

|

| ||

Imagga

created on 2019-11-07

| projector | 100 | |

|

| ||

| automaton | 74.8 | |

|

| ||

| optical instrument | 50.5 | |

|

| ||

| device | 47.7 | |

|

| ||

| optical device | 27.8 | |

|

| ||

| technology | 26 | |

|

| ||

| metal | 25.7 | |

|

| ||

| power | 21.8 | |

|

| ||

| engine | 20.2 | |

|

| ||

| equipment | 19.6 | |

|

| ||

| machine | 19.1 | |

|

| ||

| industry | 18.8 | |

|

| ||

| transportation | 17 | |

|

| ||

| old | 16.7 | |

|

| ||

| part | 16.6 | |

|

| ||

| mechanical | 14.6 | |

|

| ||

| military | 14.5 | |

|

| ||

| steel | 14.1 | |

|

| ||

| vehicle | 14 | |

|

| ||

| motor | 13.6 | |

|

| ||

| war | 13.5 | |

|

| ||

| vintage | 13.2 | |

|

| ||

| futuristic | 12.6 | |

|

| ||

| chrome | 12.2 | |

|

| ||

| antique | 12.2 | |

|

| ||

| car | 12 | |

|

| ||

| safety | 12 | |

|

| ||

| retro | 11.5 | |

|

| ||

| weapon | 11.5 | |

|

| ||

| film | 11.4 | |

|

| ||

| future | 11.2 | |

|

| ||

| gun | 11.2 | |

|

| ||

| protection | 10.9 | |

|

| ||

| auto | 10.5 | |

|

| ||

| entertainment | 10.1 | |

|

| ||

| industrial | 10 | |

|

| ||

| metallic | 9.2 | |

|

| ||

| black | 9 | |

|

| ||

| soldier | 8.8 | |

|

| ||

| man | 8.8 | |

|

| ||

| mask | 8.6 | |

|

| ||

| automobile | 8.6 | |

|

| ||

| wheel | 8.5 | |

|

| ||

| fast | 8.4 | |

|

| ||

| speed | 8.2 | |

|

| ||

| danger | 8.2 | |

|

| ||

| movie | 8.2 | |

|

| ||

| tool | 8.1 | |

|

| ||

| new | 8.1 | |

|

| ||

| detail | 8 | |

|

| ||

| shiny | 7.9 | |

|

| ||

| cannon | 7.9 | |

|

| ||

| automotive | 7.8 | |

|

| ||

| parts | 7.8 | |

|

| ||

| cinema | 7.8 | |

|

| ||

| mechanic | 7.8 | |

|

| ||

| pipe | 7.8 | |

|

| ||

| lens | 7.7 | |

|

| ||

| modern | 7.7 | |

|

| ||

| fuel | 7.7 | |

|

| ||

| camera | 7.7 | |

|

| ||

| engineering | 7.6 | |

|

| ||

| light | 7.3 | |

|

| ||

| valve | 7.3 | |

|

| ||

| science | 7.1 | |

|

| ||

Google

created on 2019-11-07

| Photograph | 96 | |

|

| ||

| Photography | 70.6 | |

|

| ||

| Team | 67.7 | |

|

| ||

| Room | 65.7 | |

|

| ||

| Stock photography | 64 | |

|

| ||

| Art | 58.1 | |

|

| ||

| History | 57.6 | |

|

| ||

| Crew | 54.9 | |

|

| ||

| Illustration | 50.4 | |

|

| ||

| Collection | 50 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 48-66 |

| Gender | Male, 97% |

| Happy | 0.7% |

| Disgusted | 1.2% |

| Surprised | 0.9% |

| Calm | 85% |

| Fear | 0.4% |

| Angry | 3.6% |

| Sad | 4.1% |

| Confused | 4.1% |

AWS Rekognition

| Age | 9-19 |

| Gender | Female, 83.7% |

| Sad | 21.7% |

| Happy | 0% |

| Fear | 0.1% |

| Confused | 3.6% |

| Surprised | 0.2% |

| Calm | 73.8% |

| Angry | 0.6% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 29-45 |

| Gender | Male, 96.5% |

| Angry | 1% |

| Calm | 97.4% |

| Disgusted | 0.2% |

| Happy | 0% |

| Fear | 0.1% |

| Sad | 0.9% |

| Surprised | 0.1% |

| Confused | 0.2% |

AWS Rekognition

| Age | 23-37 |

| Gender | Male, 81.9% |

| Disgusted | 0.2% |

| Fear | 1.2% |

| Happy | 0.3% |

| Confused | 0.6% |

| Angry | 82.4% |

| Calm | 8.3% |

| Sad | 0.4% |

| Surprised | 6.5% |

Microsoft Cognitive Services

| Age | 56 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 44 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 29 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 77.1% | |

|

| ||

| people portraits | 20.6% | |

|

| ||

Captions

Microsoft

created on 2019-11-07

| a vintage photo of a group of people posing for the camera | 94.5% | |

|

| ||

| a vintage photo of a group of people posing for a picture | 94.4% | |

|

| ||

| a vintage photo of a group of people posing for a photo | 93.5% | |

|

| ||