Machine Generated Data

Tags

Amazon

created on 2022-05-28

Clarifai

created on 2023-10-30

Imagga

created on 2022-05-28

| person | 37 | |

|

| ||

| portrait | 36.3 | |

|

| ||

| adult | 33.1 | |

|

| ||

| male | 29.2 | |

|

| ||

| people | 29 | |

|

| ||

| attractive | 28.7 | |

|

| ||

| man | 28.2 | |

|

| ||

| hair | 23.8 | |

|

| ||

| fashion | 22.6 | |

|

| ||

| face | 22 | |

|

| ||

| pretty | 21 | |

|

| ||

| expression | 20.5 | |

|

| ||

| bow tie | 20 | |

|

| ||

| lady | 18.7 | |

|

| ||

| sexy | 18.5 | |

|

| ||

| black | 17.9 | |

|

| ||

| model | 17.9 | |

|

| ||

| comedian | 17.4 | |

|

| ||

| human | 17.3 | |

|

| ||

| suit | 17.2 | |

|

| ||

| performer | 16.8 | |

|

| ||

| lifestyle | 15.9 | |

|

| ||

| necktie | 15.5 | |

|

| ||

| body | 15.2 | |

|

| ||

| handsome | 15.2 | |

|

| ||

| happy | 15 | |

|

| ||

| dress | 14.5 | |

|

| ||

| smile | 13.5 | |

|

| ||

| sensual | 12.7 | |

|

| ||

| smiling | 12.3 | |

|

| ||

| eyes | 12.1 | |

|

| ||

| fun | 12 | |

|

| ||

| entertainer | 11.9 | |

|

| ||

| style | 11.9 | |

|

| ||

| casual | 11.9 | |

|

| ||

| love | 11.8 | |

|

| ||

| posing | 11.6 | |

|

| ||

| business | 11.5 | |

|

| ||

| cute | 11.5 | |

|

| ||

| serious | 11.4 | |

|

| ||

| couple | 11.3 | |

|

| ||

| makeup | 11.2 | |

|

| ||

| lips | 11.1 | |

|

| ||

| clothing | 11.1 | |

|

| ||

| sensuality | 10.9 | |

|

| ||

| make | 10.9 | |

|

| ||

| device | 10.6 | |

|

| ||

| businessman | 10.6 | |

|

| ||

| elevator | 10.5 | |

|

| ||

| looking | 10.4 | |

|

| ||

| youth | 10.2 | |

|

| ||

| dark | 10 | |

|

| ||

| room | 9.9 | |

|

| ||

| hand | 9.9 | |

|

| ||

| brunette | 9.6 | |

|

| ||

| elegant | 9.4 | |

|

| ||

| alone | 9.1 | |

|

| ||

| garment | 9 | |

|

| ||

| one | 9 | |

|

| ||

| together | 8.8 | |

|

| ||

| urban | 8.7 | |

|

| ||

| wig | 8.6 | |

|

| ||

| tie | 8.5 | |

|

| ||

| two | 8.5 | |

|

| ||

| lifting device | 8.4 | |

|

| ||

| guy | 8.3 | |

|

| ||

| mother | 8.2 | |

|

| ||

| world | 7.8 | |

|

| ||

| erotic | 7.8 | |

|

| ||

| old | 7.7 | |

|

| ||

| skin | 7.6 | |

|

| ||

| passion | 7.5 | |

|

| ||

| relationship | 7.5 | |

|

| ||

| call | 7.5 | |

|

| ||

| office | 7.3 | |

|

| ||

| women | 7.1 | |

|

| ||

| family | 7.1 | |

|

| ||

| interior | 7.1 | |

|

| ||

| happiness | 7.1 | |

|

| ||

| modern | 7 | |

|

| ||

| look | 7 | |

|

| ||

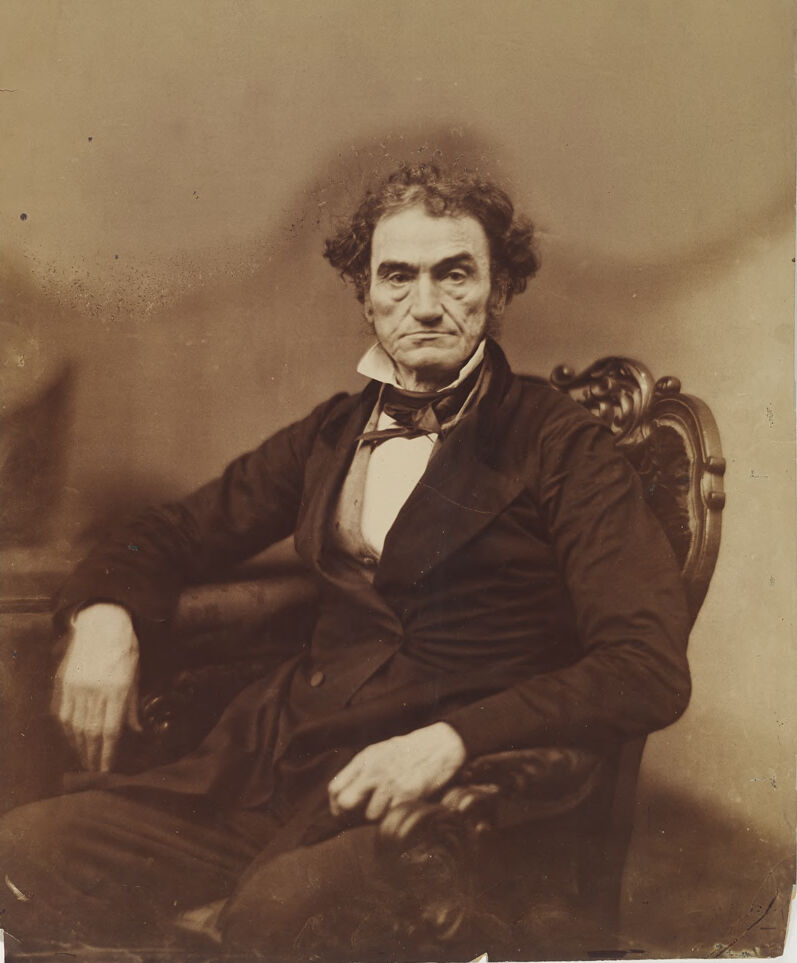

Google

created on 2022-05-28

| Coat | 86 | |

|

| ||

| Sleeve | 85.8 | |

|

| ||

| Collar | 83.4 | |

|

| ||

| Suit | 79.8 | |

|

| ||

| Blazer | 78.2 | |

|

| ||

| Tints and shades | 76.1 | |

|

| ||

| Vintage clothing | 73.6 | |

|

| ||

| Formal wear | 72.3 | |

|

| ||

| Art | 67.9 | |

|

| ||

| Classic | 67.5 | |

|

| ||

| Beard | 64.9 | |

|

| ||

| Sitting | 64.5 | |

|

| ||

| Stock photography | 64 | |

|

| ||

| Moustache | 61 | |

|

| ||

| Room | 59 | |

|

| ||

| Facial hair | 58 | |

|

| ||

| Portrait | 57.1 | |

|

| ||

| Retro style | 55.8 | |

|

| ||

| Rectangle | 53.7 | |

|

| ||

| History | 51.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

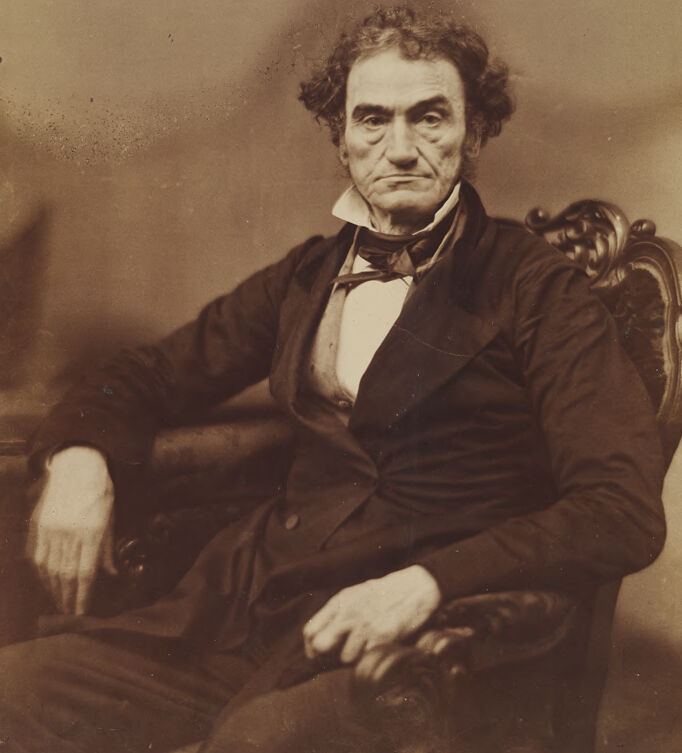

| Age | 56-64 |

| Gender | Male, 100% |

| Sad | 100% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Confused | 0.2% |

| Angry | 0.1% |

| Calm | 0% |

| Disgusted | 0% |

| Happy | 0% |

Microsoft Cognitive Services

| Age | 66 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| people portraits | 77.2% | |

|

| ||

| paintings art | 20.6% | |

|

| ||

| food drinks | 1.4% | |

|

| ||

Captions

Microsoft

created on 2022-05-28

| a vintage photo of Rufus Choate holding a book | 72.3% | |

|

| ||

| a vintage photo of Rufus Choate | 72.2% | |

|

| ||

| an old photo of Rufus Choate holding a book | 72.1% | |

|

| ||