Machine Generated Data

Tags

Amazon

created on 2022-05-27

Clarifai

created on 2023-10-30

Imagga

created on 2022-05-27

| cup | 32.1 | |

|

| ||

| container | 29.8 | |

|

| ||

| globe | 25 | |

|

| ||

| earth | 23.8 | |

|

| ||

| planet | 21.1 | |

|

| ||

| tableware | 20.6 | |

|

| ||

| world | 19.6 | |

|

| ||

| money | 17 | |

|

| ||

| map | 16.8 | |

|

| ||

| global | 16.4 | |

|

| ||

| currency | 16.1 | |

|

| ||

| china | 16.1 | |

|

| ||

| finance | 16 | |

|

| ||

| symbol | 14.8 | |

|

| ||

| black | 14.4 | |

|

| ||

| ware | 13.5 | |

|

| ||

| moon | 13.5 | |

|

| ||

| space | 13.2 | |

|

| ||

| coin | 12.4 | |

|

| ||

| business | 11.5 | |

|

| ||

| icon | 11.1 | |

|

| ||

| shiny | 11.1 | |

|

| ||

| sphere | 11 | |

|

| ||

| silhouette | 10.8 | |

|

| ||

| circle | 10.7 | |

|

| ||

| night | 10.6 | |

|

| ||

| device | 10.3 | |

|

| ||

| design | 10.2 | |

|

| ||

| dark | 10 | |

|

| ||

| porcelain | 9.5 | |

|

| ||

| art | 9.5 | |

|

| ||

| light | 9.4 | |

|

| ||

| economy | 9.3 | |

|

| ||

| cash | 9.1 | |

|

| ||

| sign | 9 | |

|

| ||

| financial | 8.9 | |

|

| ||

| universe | 8.8 | |

|

| ||

| north | 8.6 | |

|

| ||

| card | 8.6 | |

|

| ||

| ceramic ware | 8.5 | |

|

| ||

| fastener | 8.4 | |

|

| ||

| texture | 8.3 | |

|

| ||

| bank | 8.1 | |

|

| ||

| holiday | 7.9 | |

|

| ||

| continent | 7.8 | |

|

| ||

| coins | 7.7 | |

|

| ||

| geography | 7.7 | |

|

| ||

| reflection | 7.5 | |

|

| ||

| dollar | 7.4 | |

|

| ||

| shape | 7.4 | |

|

| ||

| banking | 7.3 | |

|

| ||

| digital | 7.3 | |

|

| ||

| metal | 7.2 | |

|

| ||

| computer | 7.2 | |

|

| ||

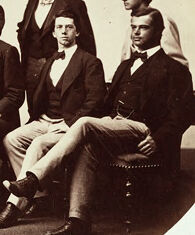

Google

created on 2022-05-27

| Font | 79.7 | |

|

| ||

| Oval | 78.3 | |

|

| ||

| Tints and shades | 77.3 | |

|

| ||

| Suit | 76.7 | |

|

| ||

| Art | 70.1 | |

|

| ||

| Event | 69.8 | |

|

| ||

| Metal | 68 | |

|

| ||

| Circle | 67.4 | |

|

| ||

| Team | 67.1 | |

|

| ||

| Sitting | 66 | |

|

| ||

| Vintage clothing | 61.6 | |

|

| ||

| Monochrome | 61.6 | |

|

| ||

| Crew | 56.4 | |

|

| ||

| Visual arts | 52.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 99.8% |

| Calm | 97.5% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.5% |

| Confused | 0.5% |

| Angry | 0.4% |

| Disgusted | 0.1% |

| Happy | 0.1% |

AWS Rekognition

| Age | 19-27 |

| Gender | Male, 100% |

| Calm | 96.8% |

| Surprised | 6.4% |

| Fear | 6% |

| Sad | 2.4% |

| Angry | 1.1% |

| Confused | 0.5% |

| Happy | 0.2% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 27-37 |

| Gender | Male, 99.8% |

| Calm | 87.6% |

| Surprised | 6.6% |

| Fear | 6% |

| Happy | 5.5% |

| Sad | 2.6% |

| Confused | 1.9% |

| Angry | 1.9% |

| Disgusted | 0.7% |

AWS Rekognition

| Age | 21-29 |

| Gender | Male, 100% |

| Calm | 98.2% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.6% |

| Angry | 0.2% |

| Confused | 0.1% |

| Disgusted | 0.1% |

| Happy | 0% |

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 100% |

| Sad | 76.1% |

| Calm | 62.6% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Confused | 1% |

| Angry | 0.5% |

| Happy | 0.3% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 35-43 |

| Gender | Male, 100% |

| Angry | 91% |

| Calm | 7.2% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.5% |

| Confused | 0.1% |

| Disgusted | 0.1% |

| Happy | 0.1% |

AWS Rekognition

| Age | 36-44 |

| Gender | Male, 99.8% |

| Calm | 99.8% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0.1% |

| Angry | 0% |

| Disgusted | 0% |

| Happy | 0% |

AWS Rekognition

| Age | 20-28 |

| Gender | Male, 99.9% |

| Calm | 99.1% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.3% |

| Happy | 0.1% |

| Angry | 0.1% |

| Confused | 0.1% |

| Disgusted | 0% |

AWS Rekognition

| Age | 26-36 |

| Gender | Male, 100% |

| Calm | 63.4% |

| Sad | 14.7% |

| Angry | 11.5% |

| Surprised | 6.8% |

| Fear | 6.3% |

| Confused | 5.6% |

| Disgusted | 1.9% |

| Happy | 1% |

AWS Rekognition

| Age | 19-27 |

| Gender | Male, 100% |

| Calm | 98.3% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.3% |

| Confused | 0.5% |

| Happy | 0.2% |

| Angry | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 33-41 |

| Gender | Male, 99.9% |

| Calm | 97.1% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.4% |

| Angry | 0.8% |

| Confused | 0.3% |

| Happy | 0.2% |

| Disgusted | 0.1% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 79.9% | |

|

| ||

| food drinks | 17.8% | |

|

| ||

| interior objects | 1.3% | |

|

| ||

Captions

Microsoft

created on 2022-05-27

| an old photo of a person | 77.4% | |

|

| ||

| a group of people posing for a photo | 74.1% | |

|

| ||

| old photo of a person | 74% | |

|

| ||