Machine Generated Data

Tags

Amazon

created on 2019-06-05

Clarifai

created on 2019-06-05

Imagga

created on 2019-06-05

| child | 19.7 | |

|

| ||

| people | 17.9 | |

|

| ||

| building | 17 | |

|

| ||

| old | 16.7 | |

|

| ||

| person | 15.8 | |

|

| ||

| statue | 15.7 | |

|

| ||

| architecture | 15.6 | |

|

| ||

| ancient | 15.6 | |

|

| ||

| man | 15.5 | |

|

| ||

| wall | 14.7 | |

|

| ||

| sculpture | 14.6 | |

|

| ||

| world | 14.1 | |

|

| ||

| human | 13.5 | |

|

| ||

| adult | 12.4 | |

|

| ||

| mother | 12.2 | |

|

| ||

| male | 11.7 | |

|

| ||

| city | 11.6 | |

|

| ||

| history | 11.6 | |

|

| ||

| historical | 11.3 | |

|

| ||

| monument | 11.2 | |

|

| ||

| parent | 11.1 | |

|

| ||

| kin | 11.1 | |

|

| ||

| stone | 11 | |

|

| ||

| tourism | 10.7 | |

|

| ||

| portrait | 10.4 | |

|

| ||

| model | 10.1 | |

|

| ||

| travel | 9.9 | |

|

| ||

| art | 9.2 | |

|

| ||

| historic | 9.2 | |

|

| ||

| brick | 8.9 | |

|

| ||

| couple | 8.7 | |

|

| ||

| love | 8.7 | |

|

| ||

| black | 8.4 | |

|

| ||

| house | 8.4 | |

|

| ||

| street | 8.3 | |

|

| ||

| fountain | 8.1 | |

|

| ||

| lady | 8.1 | |

|

| ||

| standing | 7.8 | |

|

| ||

| juvenile | 7.8 | |

|

| ||

| attractive | 7.7 | |

|

| ||

| culture | 7.7 | |

|

| ||

| grunge | 7.7 | |

|

| ||

| head | 7.6 | |

|

| ||

| fashion | 7.5 | |

|

| ||

| light | 7.4 | |

|

| ||

| tourist | 7.3 | |

|

| ||

| life | 7.3 | |

|

| ||

| aged | 7.2 | |

|

| ||

| dirty | 7.2 | |

|

| ||

| sexy | 7.2 | |

|

| ||

| landmark | 7.2 | |

|

| ||

| religion | 7.2 | |

|

| ||

| family | 7.1 | |

|

| ||

Google

created on 2019-06-05

| Photograph | 97.4 | |

|

| ||

| People | 95.9 | |

|

| ||

| Standing | 89 | |

|

| ||

| Snapshot | 88.9 | |

|

| ||

| Vintage clothing | 76.3 | |

|

| ||

| Family | 68.8 | |

|

| ||

| Photography | 67.8 | |

|

| ||

| Room | 65.7 | |

|

| ||

| Picture frame | 62.5 | |

|

| ||

| Stock photography | 62.1 | |

|

| ||

| History | 54.1 | |

|

| ||

Microsoft

created on 2019-06-05

| building | 99.3 | |

|

| ||

| clothing | 99 | |

|

| ||

| person | 98.2 | |

|

| ||

| outdoor | 94.4 | |

|

| ||

| old | 89.3 | |

|

| ||

| posing | 88.2 | |

|

| ||

| standing | 84.9 | |

|

| ||

| man | 82.3 | |

|

| ||

| human face | 78.5 | |

|

| ||

| child | 75.2 | |

|

| ||

| boy | 74.4 | |

|

| ||

| black | 70.8 | |

|

| ||

| photograph | 63.7 | |

|

| ||

| vintage clothing | 61.8 | |

|

| ||

| smile | 59.6 | |

|

| ||

| vintage | 25.5 | |

|

| ||

| stone | 14.6 | |

|

| ||

| picture frame | 11.2 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

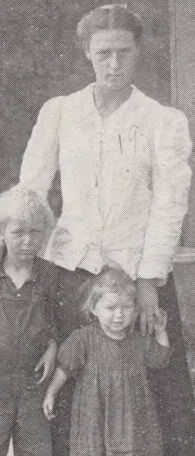

| Age | 35-52 |

| Gender | Male, 50.1% |

| Angry | 45.6% |

| Calm | 46.8% |

| Sad | 52% |

| Surprised | 45.1% |

| Disgusted | 45.1% |

| Happy | 45.1% |

| Confused | 45.1% |

AWS Rekognition

| Age | 6-13 |

| Gender | Female, 54.9% |

| Sad | 54.8% |

| Happy | 45% |

| Surprised | 45% |

| Disgusted | 45% |

| Confused | 45% |

| Calm | 45.1% |

| Angry | 45.1% |

AWS Rekognition

| Age | 38-57 |

| Gender | Male, 50% |

| Calm | 45.4% |

| Sad | 53.4% |

| Surprised | 45.2% |

| Angry | 45.3% |

| Happy | 45.3% |

| Confused | 45.3% |

| Disgusted | 45.2% |

AWS Rekognition

| Age | 11-18 |

| Gender | Female, 54.4% |

| Angry | 45.1% |

| Calm | 45.1% |

| Confused | 45.1% |

| Happy | 45.1% |

| Sad | 54.4% |

| Disgusted | 45.1% |

| Surprised | 45.1% |

AWS Rekognition

| Age | 10-15 |

| Gender | Female, 53.8% |

| Happy | 45.5% |

| Sad | 49.8% |

| Angry | 48.5% |

| Surprised | 45.3% |

| Calm | 45.3% |

| Confused | 45.3% |

| Disgusted | 45.3% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 52.8% |

| Happy | 45.3% |

| Sad | 49% |

| Surprised | 45.3% |

| Angry | 47.2% |

| Calm | 46.9% |

| Disgusted | 46% |

| Confused | 45.3% |

Microsoft Cognitive Services

| Age | 28 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 39 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 69 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| people portraits | 76.1% | |

|

| ||

| paintings art | 12.8% | |

|

| ||

| streetview architecture | 9.3% | |

|

| ||