Machine Generated Data

Tags

Amazon

created on 2022-01-09

Clarifai

created on 2023-10-25

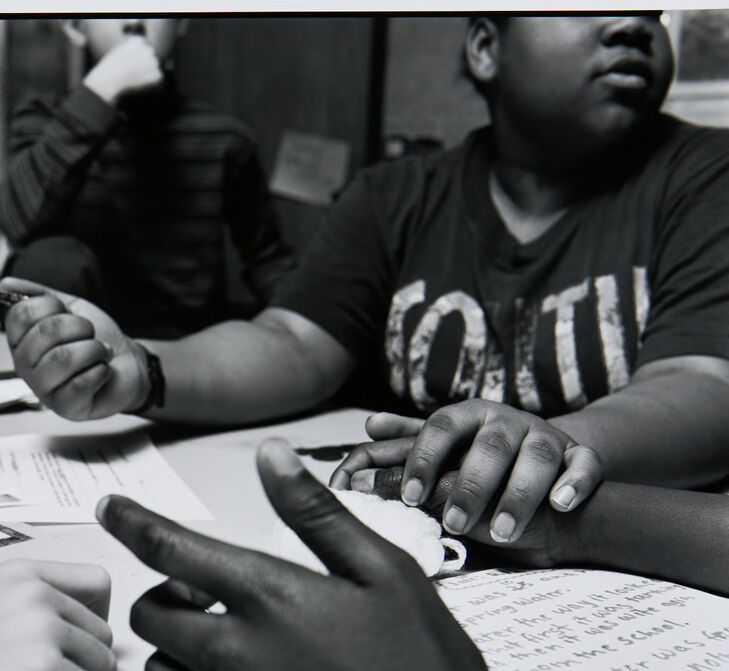

| people | 99.2 | |

|

| ||

| monochrome | 97.9 | |

|

| ||

| woman | 96.3 | |

|

| ||

| man | 94.3 | |

|

| ||

| adult | 94 | |

|

| ||

| child | 93.6 | |

|

| ||

| desk | 91.1 | |

|

| ||

| education | 90.3 | |

|

| ||

| two | 89.6 | |

|

| ||

| boy | 88.4 | |

|

| ||

| hand | 88 | |

|

| ||

| group | 87.3 | |

|

| ||

| composition | 87.2 | |

|

| ||

| school | 87 | |

|

| ||

| concentration | 86.2 | |

|

| ||

| table | 85 | |

|

| ||

| book series | 82.6 | |

|

| ||

| writing | 82.3 | |

|

| ||

| portrait | 81.7 | |

|

| ||

| girl | 81.7 | |

|

| ||

Imagga

created on 2022-01-09

Google

created on 2022-01-09

| Hand | 95.8 | |

|

| ||

| Black | 89.5 | |

|

| ||

| Black-and-white | 87.1 | |

|

| ||

| Table | 86.1 | |

|

| ||

| Finger | 85.7 | |

|

| ||

| Writing implement | 85.3 | |

|

| ||

| Gesture | 85.3 | |

|

| ||

| Font | 84.5 | |

|

| ||

| Style | 84.1 | |

|

| ||

| Adaptation | 79.4 | |

|

| ||

| Nail | 79.3 | |

|

| ||

| Art | 78.6 | |

|

| ||

| Office instrument | 78.2 | |

|

| ||

| Monochrome photography | 77.4 | |

|

| ||

| Writing instrument accessory | 76.8 | |

|

| ||

| Monochrome | 76.6 | |

|

| ||

| Beauty | 75 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| T-shirt | 74.1 | |

|

| ||

| Office supplies | 72.7 | |

|

| ||

Microsoft

created on 2022-01-09

| person | 99.3 | |

|

| ||

| text | 97.9 | |

|

| ||

| sitting | 97 | |

|

| ||

| indoor | 93 | |

|

| ||

| black and white | 90.4 | |

|

| ||

| drawing | 86.8 | |

|

| ||

| handwriting | 86.8 | |

|

| ||

| watch | 78.7 | |

|

| ||

| people | 58.1 | |

|

| ||

| clothing | 57.9 | |

|

| ||

| human face | 55.9 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 7-17 |

| Gender | Male, 57.2% |

| Calm | 98.4% |

| Happy | 0.5% |

| Surprised | 0.3% |

| Fear | 0.2% |

| Confused | 0.1% |

| Sad | 0.1% |

| Angry | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 19-27 |

| Gender | Male, 59.1% |

| Calm | 77.8% |

| Sad | 17.8% |

| Disgusted | 1.2% |

| Angry | 1.2% |

| Fear | 1.1% |

| Happy | 0.6% |

| Surprised | 0.2% |

| Confused | 0.2% |

Microsoft Cognitive Services

| Age | 5 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 50.5% | |

|

| ||

| people portraits | 38.8% | |

|

| ||

| food drinks | 8.4% | |

|

| ||

Captions

Microsoft

created by unknown on 2022-01-09

| a group of people sitting at a table | 86.7% | |

|

| ||

| a group of people sitting around a table | 86.1% | |

|

| ||

| a group of people sitting at a desk | 86% | |

|

| ||

Clarifai

created by general-english-image-caption-blip on 2025-05-18

| a photograph of a group of people sitting at a table with papers and papers | -100% | |

|

| ||

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

Here is a description of the image:

The black and white photograph shows three children at a table, likely engaged in a school activity. The main focus is on their hands and the papers spread out on the table in front of them.

In the foreground, a hand with dark skin is pointing, drawing attention to something on the paper. Another hand with lighter skin is resting on the table next to it. The hands are placed on top of paper that appears to have text, possibly a worksheet or assignment. The text on the page is barely discernible, though a phrase with the word "Water" can be made out. There is also a sketch with dark shading.

To the right, a child wearing a t-shirt with a large graphic or text is sitting. Their hands are resting on top of the first pair of hands. One hand is clutching a white object that appears to be made out of yarn or string. To the left, another child is holding a pencil.

In the background, there are two other children, blurred and out of focus, sitting at the same table. The one in the front is resting his chin.

Created by gemini-2.0-flash-lite on 2025-05-17

Here is a description of the image:

Overall Impression:

The image is a black and white photograph depicting a group of children engaged in what appears to be a collaborative learning activity. The focus is primarily on the hands and the interaction between them, highlighting the essence of teamwork and shared effort.

Composition and Perspective:

The shot is taken from a slightly elevated perspective, looking down onto a table where the children are seated. The close-up framing draws attention to the hands, with their varying skin tones, and the documents scattered on the table, creating a sense of intimacy and involvement.

Details:

- Hands: The prominent feature of the image is the hands of the children. Their positions suggest that they are working together, possibly pointing, holding a pencil, or touching a shared object.

- Children: The image features the torsos and hands of several children, with the faces of a few partially visible.

- Documents: Scattered on the table are several pages with writing on them. The text might be part of the exercise.

Mood and Atmosphere:

The black and white format and the concentration on hands and collaboration gives the photo a serious but encouraging tone. It could evoke themes of learning, cooperation, and diversity. The image suggests a moment of shared effort and the collaborative spirit of the children.

Text analysis

Amazon