Machine Generated Data

Tags

Amazon

created on 2022-01-09

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-09

| portrait | 43.4 | |

|

| ||

| adult | 37.5 | |

|

| ||

| face | 34.1 | |

|

| ||

| person | 34.1 | |

|

| ||

| attractive | 31.5 | |

|

| ||

| black | 31.4 | |

|

| ||

| sexy | 26.5 | |

|

| ||

| people | 26.2 | |

|

| ||

| pretty | 25.9 | |

|

| ||

| fashion | 24.9 | |

|

| ||

| hair | 23.8 | |

|

| ||

| model | 23.4 | |

|

| ||

| women | 21.4 | |

|

| ||

| happy | 21.3 | |

|

| ||

| brunette | 20.1 | |

|

| ||

| skin | 18 | |

|

| ||

| cute | 18 | |

|

| ||

| smile | 17.8 | |

|

| ||

| looking | 17.6 | |

|

| ||

| lips | 17.6 | |

|

| ||

| sensual | 17.3 | |

|

| ||

| sensuality | 17.3 | |

|

| ||

| world | 16.7 | |

|

| ||

| lifestyle | 16.6 | |

|

| ||

| eyes | 16.4 | |

|

| ||

| expression | 16.2 | |

|

| ||

| human | 15.8 | |

|

| ||

| child | 15.1 | |

|

| ||

| lady | 14.6 | |

|

| ||

| elegance | 14.3 | |

|

| ||

| love | 14.2 | |

|

| ||

| man | 14.1 | |

|

| ||

| smiling | 13.8 | |

|

| ||

| close | 13.7 | |

|

| ||

| make | 13.6 | |

|

| ||

| head | 13.4 | |

|

| ||

| eye | 13.4 | |

|

| ||

| style | 13.4 | |

|

| ||

| look | 13.2 | |

|

| ||

| male | 13.1 | |

|

| ||

| makeup | 12.9 | |

|

| ||

| youth | 12.8 | |

|

| ||

| two | 12.7 | |

|

| ||

| couple | 12.2 | |

|

| ||

| dress | 11.8 | |

|

| ||

| dark | 11.7 | |

|

| ||

| posing | 11.6 | |

|

| ||

| one | 11.2 | |

|

| ||

| elegant | 11.1 | |

|

| ||

| happiness | 11 | |

|

| ||

| girls | 10.9 | |

|

| ||

| 20s | 10.1 | |

|

| ||

| seat belt | 10 | |

|

| ||

| studio | 9.9 | |

|

| ||

| monochrome | 9.8 | |

|

| ||

| adolescent | 9.8 | |

|

| ||

| juvenile | 9.7 | |

|

| ||

| body | 9.6 | |

|

| ||

| hands | 9.6 | |

|

| ||

| car | 9.2 | |

|

| ||

| long | 9.2 | |

|

| ||

| cover girl | 9.1 | |

|

| ||

| teenager | 9.1 | |

|

| ||

| hand | 9.1 | |

|

| ||

| cheerful | 8.9 | |

|

| ||

| blond | 8.9 | |

|

| ||

| closeup | 8.8 | |

|

| ||

| bride | 8.6 | |

|

| ||

| bow tie | 8.5 | |

|

| ||

| casual | 8.5 | |

|

| ||

| mouth | 8.5 | |

|

| ||

| cosmetics | 8.4 | |

|

| ||

| joy | 8.4 | |

|

| ||

| emotion | 8.3 | |

|

| ||

| nice | 8.3 | |

|

| ||

| safety belt | 8 | |

|

| ||

| erotic | 7.9 | |

|

| ||

| together | 7.9 | |

|

| ||

| clothing | 7.8 | |

|

| ||

| married | 7.7 | |

|

| ||

| gorgeous | 7.3 | |

|

| ||

| lovely | 7.1 | |

|

| ||

| restraint | 7.1 | |

|

| ||

Google

created on 2022-01-09

| White | 92.2 | |

|

| ||

| Mouth | 90.7 | |

|

| ||

| Black | 89.8 | |

|

| ||

| Flash photography | 88.5 | |

|

| ||

| Happy | 85.7 | |

|

| ||

| Black-and-white | 85.7 | |

|

| ||

| Gesture | 85.3 | |

|

| ||

| Style | 84.1 | |

|

| ||

| Eyelash | 82.9 | |

|

| ||

| Cool | 81.5 | |

|

| ||

| Adaptation | 79.3 | |

|

| ||

| Monochrome photography | 75.5 | |

|

| ||

| Beauty | 75.3 | |

|

| ||

| Monochrome | 74.6 | |

|

| ||

| Font | 68.1 | |

|

| ||

| Event | 67.7 | |

|

| ||

| Child | 65.6 | |

|

| ||

| Stock photography | 64.2 | |

|

| ||

| Portrait photography | 61.2 | |

|

| ||

| Photo caption | 61.2 | |

|

| ||

Microsoft

created on 2022-01-09

| human face | 98.9 | |

|

| ||

| text | 97.4 | |

|

| ||

| person | 93.9 | |

|

| ||

| clothing | 86.3 | |

|

| ||

| black and white | 76 | |

|

| ||

| fashion accessory | 59.4 | |

|

| ||

| woman | 56.7 | |

|

| ||

| portrait | 55.2 | |

|

| ||

| hair | 48.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

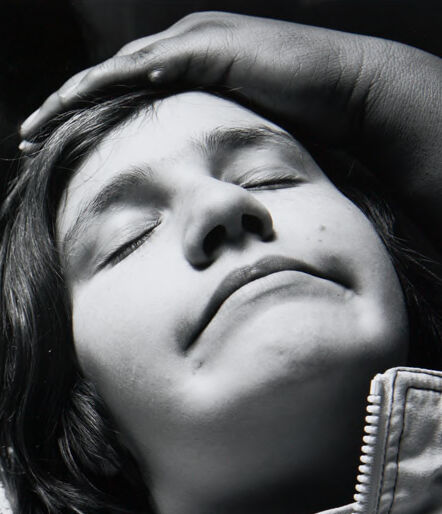

| Age | 20-28 |

| Gender | Female, 81.1% |

| Calm | 53.1% |

| Sad | 25.3% |

| Happy | 9.8% |

| Fear | 3% |

| Confused | 3% |

| Surprised | 2.2% |

| Disgusted | 2.1% |

| Angry | 1.6% |

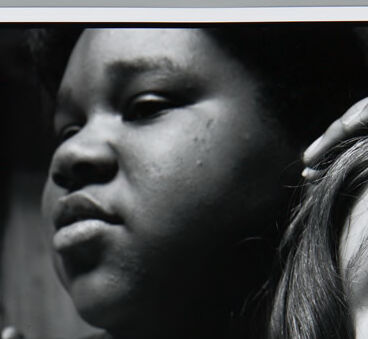

AWS Rekognition

| Age | 20-28 |

| Gender | Female, 85.9% |

| Angry | 33.3% |

| Sad | 28.7% |

| Calm | 24% |

| Disgusted | 7.5% |

| Fear | 3.2% |

| Surprised | 1.4% |

| Confused | 1.2% |

| Happy | 0.7% |

Microsoft Cognitive Services

| Age | 19 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 72.4% | |

|

| ||

| people portraits | 26.9% | |

|

| ||

Captions

Microsoft

created by unknown on 2022-01-09

| a man and a woman taking a selfie | 28.7% | |

|

| ||

Clarifai

created by general-english-image-caption-blip on 2025-05-15

| a photograph of a woman is sitting on a couch and a man is holding a fan | -100% | |

|

| ||

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-09

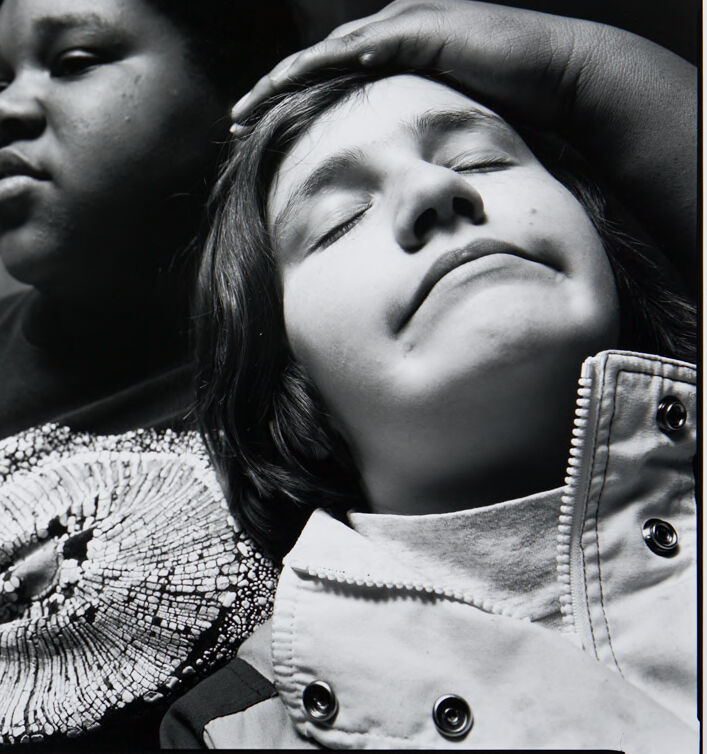

Here's a description of the image:

Overall Impression:

The image is a black and white close-up photograph that evokes a feeling of intimacy and tenderness. It features two individuals, likely in a moment of connection.

Subject and Composition:

- Two people are the main subjects, positioned close together.

- One person is looking up with their eyes closed.

- The other person has their hand on the first individual's head.

- The shot appears to be taken indoors.

Details and Atmosphere:

- The black and white format enhances the emotional impact, emphasizing the textures and contrasts in the scene.

- The light is focused on the faces, casting shadows and bringing the subjects forward.

- The overall composition and the gesture of the hand suggest a sense of comfort and care.

Possible interpretations:

The image could be interpreted as a moment of closeness, perhaps involving a relationship between the individuals. It may evoke feelings of care, empathy, and support.

Created by gemini-2.0-flash on 2025-05-09

The black and white photograph captures an intimate moment between two individuals. On the left, a person with dark skin is visible from the chest up. They are wearing a t-shirt with a distinctive design that looks like an abstract floral or organic pattern. They look off to the left side.

The individual on the right is positioned closer to the camera and slightly tilted, with their eyes closed. Their fair-toned face is illuminated, and their chin is tilted upwards. A hand, presumably belonging to the first person, rests gently on their forehead. They are wearing a collared jacket, and the details of the collar, zipper, and snaps are clearly visible.

The background is somewhat blurred and indistinct, drawing the viewer's focus to the subjects and their interaction. The photograph is a monochromatic study of textures, emotions, and the interplay of light and shadow.