Microsoft

created by unknown on 2022-01-09

Clarifai

created by general-english-image-caption-blip on 2025-05-03

Google Gemini

Created by gemini-2.0-flash on 2025-05-17

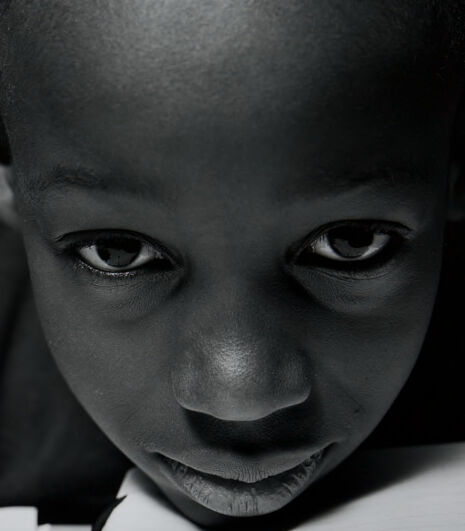

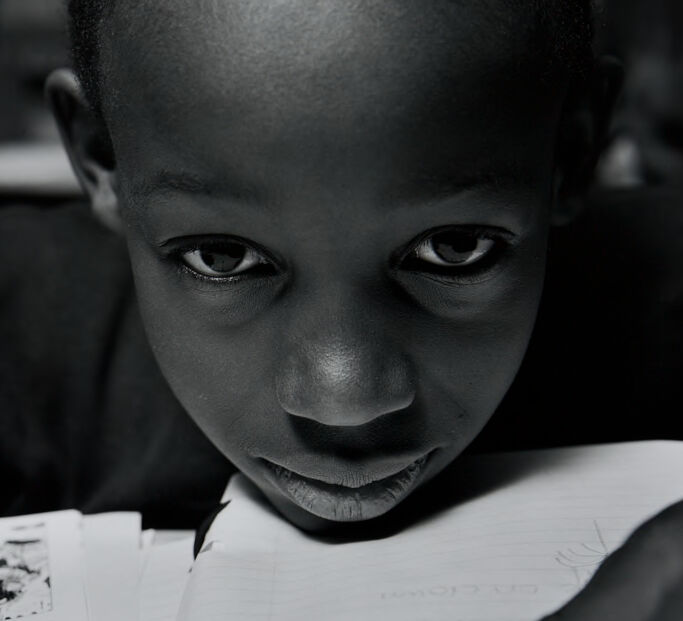

The image is a close-up, black and white portrait of a young boy. He is looking directly at the camera with a somewhat intense, yet gentle gaze. The camera angle is slightly above him, giving a sense of vulnerability. The boy has short, dark hair and smooth skin. His face is well-lit, emphasizing his features.

He is leaning over a notebook, suggesting that he is either writing or studying. Beside the notebook, there's a book with a picture and some text, along with what appears to be a crayon. The overall composition is simple and direct, with the focus solely on the boy and his expression. The black and white tones add a timeless and classic feel to the image.

Created by gemini-2.0-flash-lite on 2025-05-17

Here's a description of the image:

The image is a close-up, black and white photograph of a young Black boy. He is looking directly at the camera with a serious, focused expression. His face is the primary focus, filling most of the frame. His dark eyes have a subtle glint, and his lips are slightly parted. He is leaning over a notebook, possibly doing schoolwork. A few pages of what appears to be text and a dark crayon rest on the table. The lighting seems to be soft, creating a subtle contrast on his face and highlighting the details of his features. There is a white border around the image.