Machine Generated Data

Tags

Amazon

created on 2019-06-05

Clarifai

created on 2019-06-05

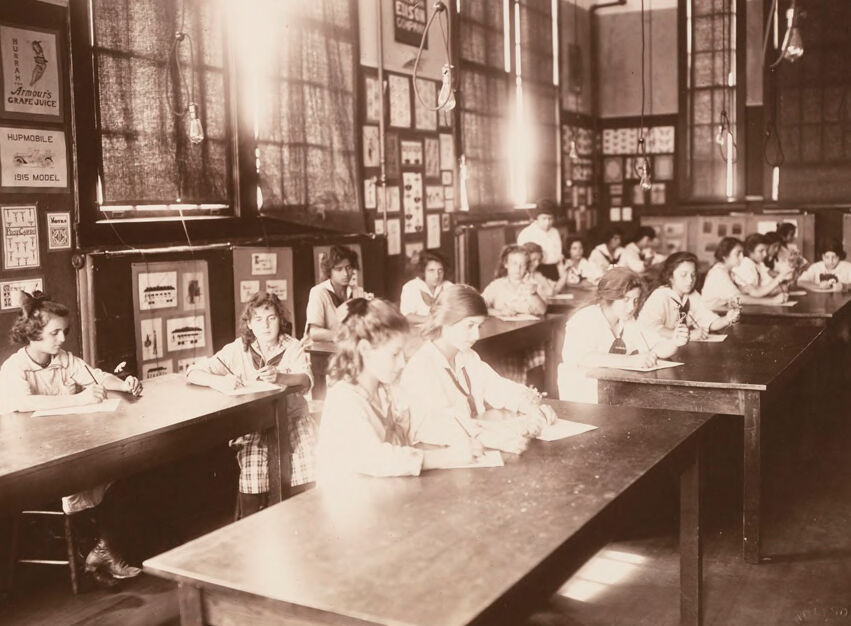

| people | 99.9 | |

|

| ||

| furniture | 99.8 | |

|

| ||

| room | 99.3 | |

|

| ||

| group | 99.2 | |

|

| ||

| many | 99.1 | |

|

| ||

| desk | 98.9 | |

|

| ||

| adult | 98.7 | |

|

| ||

| group together | 97.5 | |

|

| ||

| table | 96.8 | |

|

| ||

| chair | 95.9 | |

|

| ||

| man | 95.5 | |

|

| ||

| education | 94.8 | |

|

| ||

| woman | 93.8 | |

|

| ||

| seat | 93.6 | |

|

| ||

| indoors | 91.5 | |

|

| ||

| administration | 90.9 | |

|

| ||

| several | 90.4 | |

|

| ||

| dining room | 89 | |

|

| ||

| employee | 88 | |

|

| ||

| monochrome | 87.4 | |

|

| ||

Imagga

created on 2019-06-05

Google

created on 2019-06-05

| Photograph | 96.2 | |

|

| ||

| Snapshot | 87.2 | |

|

| ||

| Room | 86.3 | |

|

| ||

| Table | 71.7 | |

|

| ||

| Furniture | 69.1 | |

|

| ||

| Classroom | 64.6 | |

|

| ||

| Stock photography | 64 | |

|

| ||

| Photography | 62.4 | |

|

| ||

| Class | 60.4 | |

|

| ||

| History | 54.1 | |

|

| ||

| Building | 53.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 50.9% |

| Disgusted | 45.4% |

| Happy | 45.4% |

| Angry | 46.5% |

| Surprised | 45.7% |

| Sad | 48.1% |

| Confused | 46.9% |

| Calm | 46.9% |

AWS Rekognition

| Age | 14-25 |

| Gender | Male, 51.4% |

| Confused | 45.7% |

| Surprised | 45% |

| Angry | 46.1% |

| Happy | 45.1% |

| Sad | 47.1% |

| Calm | 50.9% |

| Disgusted | 45% |

AWS Rekognition

| Age | 9-14 |

| Gender | Male, 53.9% |

| Happy | 46.3% |

| Angry | 47.3% |

| Calm | 46.4% |

| Confused | 46.2% |

| Disgusted | 46% |

| Surprised | 46% |

| Sad | 46.9% |

AWS Rekognition

| Age | 16-27 |

| Gender | Female, 54.6% |

| Happy | 46.1% |

| Sad | 45.7% |

| Confused | 45.5% |

| Calm | 50.7% |

| Angry | 46% |

| Disgusted | 45.3% |

| Surprised | 45.6% |

AWS Rekognition

| Age | 19-36 |

| Gender | Female, 52.5% |

| Confused | 45.4% |

| Angry | 45.9% |

| Disgusted | 45.2% |

| Calm | 46.5% |

| Happy | 45.3% |

| Surprised | 45.3% |

| Sad | 51.3% |

AWS Rekognition

| Age | 23-38 |

| Gender | Female, 50.5% |

| Disgusted | 49.5% |

| Sad | 50% |

| Calm | 50% |

| Confused | 49.5% |

| Happy | 49.5% |

| Surprised | 49.5% |

| Angry | 49.5% |

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 53.7% |

| Calm | 46.3% |

| Happy | 45.1% |

| Sad | 52.6% |

| Angry | 45.4% |

| Confused | 45.2% |

| Disgusted | 45.1% |

| Surprised | 45.2% |

AWS Rekognition

| Age | 16-27 |

| Gender | Female, 50.3% |

| Disgusted | 49.6% |

| Confused | 49.5% |

| Happy | 49.5% |

| Sad | 49.5% |

| Surprised | 49.5% |

| Calm | 49.5% |

| Angry | 50.4% |

AWS Rekognition

| Age | 17-27 |

| Gender | Female, 50.2% |

| Happy | 49.6% |

| Surprised | 49.6% |

| Confused | 49.5% |

| Disgusted | 49.7% |

| Calm | 49.8% |

| Angry | 49.7% |

| Sad | 49.6% |

AWS Rekognition

| Age | 23-38 |

| Gender | Female, 50.2% |

| Angry | 49.6% |

| Disgusted | 49.6% |

| Sad | 49.6% |

| Surprised | 49.6% |

| Happy | 49.6% |

| Calm | 49.7% |

| Confused | 49.7% |

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 50.5% |

| Angry | 49.6% |

| Calm | 49.7% |

| Sad | 50% |

| Surprised | 49.5% |

| Disgusted | 49.5% |

| Happy | 49.6% |

| Confused | 49.5% |

AWS Rekognition

| Age | 15-25 |

| Gender | Female, 53.9% |

| Angry | 46.3% |

| Disgusted | 46.5% |

| Sad | 49.9% |

| Calm | 45.5% |

| Happy | 45.5% |

| Surprised | 45.7% |

| Confused | 45.6% |

AWS Rekognition

| Age | 17-27 |

| Gender | Female, 50.1% |

| Calm | 49.7% |

| Sad | 50.1% |

| Surprised | 49.5% |

| Angry | 49.6% |

| Happy | 49.5% |

| Confused | 49.5% |

| Disgusted | 49.5% |

AWS Rekognition

| Age | 35-52 |

| Gender | Female, 50.2% |

| Angry | 49.6% |

| Happy | 49.6% |

| Confused | 49.5% |

| Surprised | 49.6% |

| Sad | 49.6% |

| Disgusted | 50% |

| Calm | 49.6% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 50.5% |

| Happy | 49.8% |

| Angry | 49.6% |

| Calm | 49.7% |

| Sad | 49.7% |

| Surprised | 49.6% |

| Confused | 49.6% |

| Disgusted | 49.6% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 50.3% |

| Sad | 49.8% |

| Calm | 49.8% |

| Happy | 49.7% |

| Surprised | 49.5% |

| Angry | 49.6% |

| Disgusted | 49.5% |

| Confused | 49.6% |

Feature analysis

Categories

Imagga

| interior objects | 99.9% | |

|

| ||

Captions

Microsoft

created on 2019-06-05

| a dining room table | 96% | |

|

| ||

| a dining room table in front of a window | 93.1% | |

|

| ||

| a kitchen with a dining table | 89.9% | |

|

| ||

Text analysis

Amazon

Armour's

MODEL

EISO

ERSON

CoP

Armours

GRAPE JUICE

HUPMOBILE

191S MODEL

TT

TT

ALESO

ERSON

CoP

JUICE

191S

ALESO

Armours

GRAPE

HUPMOBILE

MODEL

TT