Machine Generated Data

Tags

Amazon

created on 2019-06-05

Clarifai

created on 2019-06-05

Imagga

created on 2019-06-05

| automaton | 34.5 | |

|

| ||

| old | 19.5 | |

|

| ||

| shop | 17 | |

|

| ||

| art | 15.2 | |

|

| ||

| home appliance | 14.1 | |

|

| ||

| machine | 14.1 | |

|

| ||

| sculpture | 14 | |

|

| ||

| antique | 13.7 | |

|

| ||

| sewing machine | 13.6 | |

|

| ||

| vintage | 13.2 | |

|

| ||

| retro | 13.1 | |

|

| ||

| decoration | 13 | |

|

| ||

| table | 12.7 | |

|

| ||

| history | 12.5 | |

|

| ||

| ancient | 12.1 | |

|

| ||

| mercantile establishment | 12.1 | |

|

| ||

| statue | 11.3 | |

|

| ||

| textile machine | 11 | |

|

| ||

| interior | 10.6 | |

|

| ||

| man | 10.5 | |

|

| ||

| historical | 10.3 | |

|

| ||

| architecture | 10.1 | |

|

| ||

| case | 10 | |

|

| ||

| appliance | 9.9 | |

|

| ||

| device | 9.8 | |

|

| ||

| glass | 9.5 | |

|

| ||

| party | 9.5 | |

|

| ||

| monument | 9.3 | |

|

| ||

| barbershop | 9.2 | |

|

| ||

| historic | 9.2 | |

|

| ||

| travel | 9.2 | |

|

| ||

| city | 9.1 | |

|

| ||

| room | 8.9 | |

|

| ||

| life | 8.8 | |

|

| ||

| home | 8.8 | |

|

| ||

| celebration | 8.8 | |

|

| ||

| house | 8.4 | |

|

| ||

| traditional | 8.3 | |

|

| ||

| tourism | 8.2 | |

|

| ||

| place of business | 8 | |

|

| ||

| detail | 8 | |

|

| ||

| medical | 7.9 | |

|

| ||

| holiday | 7.9 | |

|

| ||

| people | 7.8 | |

|

| ||

| cup | 7.7 | |

|

| ||

| men | 7.7 | |

|

| ||

| laboratory | 7.7 | |

|

| ||

| culture | 7.7 | |

|

| ||

| building | 7.6 | |

|

| ||

| famous | 7.4 | |

|

| ||

| kitchen | 7.3 | |

|

| ||

| metal | 7.2 | |

|

| ||

| day | 7.1 | |

|

| ||

Google

created on 2019-06-05

| Photograph | 96.1 | |

|

| ||

| Snapshot | 84.1 | |

|

| ||

| Stock photography | 71.8 | |

|

| ||

| Art | 67.7 | |

|

| ||

| Room | 65.7 | |

|

| ||

| Visual arts | 64.9 | |

|

| ||

| Classic | 56.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 78.6% |

| Sad | 50.3% |

| Angry | 38.6% |

| Calm | 5.2% |

| Confused | 1.1% |

| Happy | 0.9% |

| Disgusted | 2.7% |

| Surprised | 1.3% |

AWS Rekognition

| Age | 17-27 |

| Gender | Female, 50.4% |

| Calm | 54.4% |

| Disgusted | 45% |

| Surprised | 45% |

| Confused | 45.1% |

| Angry | 45.2% |

| Sad | 45.2% |

| Happy | 45.1% |

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 51.6% |

| Confused | 45.2% |

| Happy | 45.4% |

| Sad | 46.7% |

| Disgusted | 45.9% |

| Angry | 45.7% |

| Calm | 50.8% |

| Surprised | 45.4% |

AWS Rekognition

| Age | 23-38 |

| Gender | Female, 54.3% |

| Disgusted | 45.2% |

| Sad | 46.5% |

| Calm | 51.8% |

| Surprised | 45.1% |

| Angry | 45.6% |

| Happy | 45.3% |

| Confused | 45.5% |

AWS Rekognition

| Age | 14-23 |

| Gender | Male, 54% |

| Surprised | 46.1% |

| Happy | 45.2% |

| Calm | 49.6% |

| Disgusted | 45.3% |

| Confused | 46.5% |

| Sad | 46.4% |

| Angry | 46% |

AWS Rekognition

| Age | 17-27 |

| Gender | Female, 54.6% |

| Angry | 45.6% |

| Disgusted | 45.3% |

| Surprised | 45.8% |

| Happy | 46% |

| Calm | 50.6% |

| Sad | 45.7% |

| Confused | 46.1% |

Microsoft Cognitive Services

| Age | 23 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 24 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| interior objects | 97.3% | |

|

| ||

| paintings art | 1.6% | |

|

| ||

| food drinks | 1% | |

|

| ||

Captions

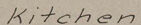

Microsoft

created on 2019-06-05

| a vintage photo of a person holding a book | 62% | |

|

| ||

| a vintage photo of a person | 61.9% | |

|

| ||

| a vintage photo of a person in a box | 61.8% | |

|

| ||

Text analysis

Amazon

the

In the Kitchen

In

Kitchen

ThE

DAANCER

24

1n the Ki tchen.

The

24

1n

the

Ki

tchen.

The