Machine Generated Data

Tags

Amazon

created on 2022-05-28

Clarifai

created on 2023-10-30

Imagga

created on 2022-05-28

| military uniform | 36.3 | |

|

| ||

| uniform | 35.5 | |

|

| ||

| man | 28.2 | |

|

| ||

| clothing | 26.2 | |

|

| ||

| person | 26 | |

|

| ||

| male | 24.1 | |

|

| ||

| people | 19 | |

|

| ||

| vehicle | 17.1 | |

|

| ||

| covering | 17.1 | |

|

| ||

| wheeled vehicle | 17.1 | |

|

| ||

| adult | 15.6 | |

|

| ||

| consumer goods | 14.6 | |

|

| ||

| motor vehicle | 14 | |

|

| ||

| black | 12.6 | |

|

| ||

| military | 12.6 | |

|

| ||

| golf equipment | 12.4 | |

|

| ||

| world | 12.3 | |

|

| ||

| portrait | 11.6 | |

|

| ||

| equipment | 11.6 | |

|

| ||

| fashion | 11.3 | |

|

| ||

| old | 11.1 | |

|

| ||

| weapon | 10.5 | |

|

| ||

| human | 10.5 | |

|

| ||

| danger | 10 | |

|

| ||

| gun | 10 | |

|

| ||

| attractive | 9.8 | |

|

| ||

| soldier | 9.8 | |

|

| ||

| art | 9.8 | |

|

| ||

| war | 9.6 | |

|

| ||

| couple | 9.6 | |

|

| ||

| sports equipment | 9.3 | |

|

| ||

| protection | 9.1 | |

|

| ||

| holding | 9.1 | |

|

| ||

| industrial | 9.1 | |

|

| ||

| camouflage | 9 | |

|

| ||

| sexy | 8.8 | |

|

| ||

| army | 8.8 | |

|

| ||

| mask | 8.8 | |

|

| ||

| lifestyle | 8.7 | |

|

| ||

| men | 8.6 | |

|

| ||

| commodity | 8.2 | |

|

| ||

| one | 8.2 | |

|

| ||

| music | 8.2 | |

|

| ||

| machine | 8.1 | |

|

| ||

| conveyance | 8 | |

|

| ||

| handsome | 8 | |

|

| ||

| worker | 8 | |

|

| ||

| work | 7.8 | |

|

| ||

| model | 7.8 | |

|

| ||

| device | 7.7 | |

|

| ||

| wall | 7.7 | |

|

| ||

| industry | 7.7 | |

|

| ||

| youth | 7.7 | |

|

| ||

| hand | 7.6 | |

|

| ||

| leisure | 7.5 | |

|

| ||

| sport | 7.4 | |

|

| ||

| safety | 7.4 | |

|

| ||

| light | 7.3 | |

|

| ||

| dress | 7.2 | |

|

| ||

| looking | 7.2 | |

|

| ||

| body | 7.2 | |

|

| ||

| religion | 7.2 | |

|

| ||

| women | 7.1 | |

|

| ||

Google

created on 2022-05-28

| Wheel | 96.9 | |

|

| ||

| Tire | 93.5 | |

|

| ||

| Motor vehicle | 92.3 | |

|

| ||

| Vehicle | 91.3 | |

|

| ||

| Hat | 89.9 | |

|

| ||

| Automotive design | 78.1 | |

|

| ||

| Art | 78 | |

|

| ||

| Tints and shades | 76.3 | |

|

| ||

| Fender | 75.5 | |

|

| ||

| Classic | 75.1 | |

|

| ||

| Vintage clothing | 74.8 | |

|

| ||

| Sitting | 71.1 | |

|

| ||

| Classic car | 69.7 | |

|

| ||

| Family car | 63.5 | |

|

| ||

| Antique car | 62.5 | |

|

| ||

| Stock photography | 62 | |

|

| ||

| History | 62 | |

|

| ||

| Vintage advertisement | 61.2 | |

|

| ||

| Car | 61 | |

|

| ||

| Vintage car | 60 | |

|

| ||

Microsoft

created on 2022-05-28

| man | 98.1 | |

|

| ||

| person | 96.9 | |

|

| ||

| clothing | 95.6 | |

|

| ||

| text | 92.1 | |

|

| ||

| old | 87.1 | |

|

| ||

| outdoor | 85.5 | |

|

| ||

| military vehicle | 63.6 | |

|

| ||

| photograph | 54.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

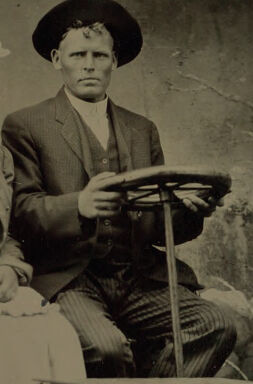

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 100% |

| Calm | 99.6% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0.1% |

| Angry | 0.1% |

| Disgusted | 0% |

| Happy | 0% |

AWS Rekognition

| Age | 27-37 |

| Gender | Male, 100% |

| Calm | 99.6% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.1% |

| Confused | 0.1% |

| Happy | 0% |

| Disgusted | 0% |

Microsoft Cognitive Services

| Age | 34 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| events parties | 94.3% | |

|

| ||

| streetview architecture | 2% | |

|

| ||

| text visuals | 1.9% | |

|

| ||

| paintings art | 1% | |

|

| ||

Captions

Microsoft

created on 2022-05-28

| an old photo of a man riding on the back of a truck | 72% | |

|

| ||

| an old photo of a man riding on the back of a vehicle | 71.5% | |

|

| ||

| an old photo of a man | 71.4% | |

|

| ||