Machine Generated Data

Tags

Amazon

created on 2022-01-09

Clarifai

created on 2023-10-25

Imagga

created on 2022-01-09

| sand | 35.1 | |

|

| ||

| tree | 30.2 | |

|

| ||

| beach | 27.2 | |

|

| ||

| landscape | 26.8 | |

|

| ||

| sky | 23.6 | |

|

| ||

| travel | 21.1 | |

|

| ||

| sun | 20.9 | |

|

| ||

| sea | 19.6 | |

|

| ||

| trees | 18.7 | |

|

| ||

| vacation | 17.2 | |

|

| ||

| sunset | 17.1 | |

|

| ||

| summer | 16.7 | |

|

| ||

| ocean | 16.6 | |

|

| ||

| snow | 16.1 | |

|

| ||

| resort | 15 | |

|

| ||

| park | 14.9 | |

|

| ||

| tourism | 14.9 | |

|

| ||

| water | 14 | |

|

| ||

| forest | 13.9 | |

|

| ||

| holiday | 13.6 | |

|

| ||

| cattle | 13.4 | |

|

| ||

| ox | 13.1 | |

|

| ||

| outdoors | 12.8 | |

|

| ||

| horse | 12.7 | |

|

| ||

| field | 12.6 | |

|

| ||

| rural | 12.3 | |

|

| ||

| tropical | 11.9 | |

|

| ||

| winter | 11.9 | |

|

| ||

| grass | 11.9 | |

|

| ||

| coast | 11.7 | |

|

| ||

| country | 11.4 | |

|

| ||

| shore | 11.4 | |

|

| ||

| tourist | 11.2 | |

|

| ||

| earth | 10.7 | |

|

| ||

| soil | 10.7 | |

|

| ||

| desert | 10.4 | |

|

| ||

| sunrise | 10.3 | |

|

| ||

| weather | 10.3 | |

|

| ||

| ranch | 10.1 | |

|

| ||

| island | 10.1 | |

|

| ||

| outdoor | 9.9 | |

|

| ||

| farm | 9.8 | |

|

| ||

| swimsuit | 9.8 | |

|

| ||

| scenic | 9.7 | |

|

| ||

| light | 9.4 | |

|

| ||

| old | 9.1 | |

|

| ||

| scenery | 9 | |

|

| ||

| people | 8.9 | |

|

| ||

| camel | 8.9 | |

|

| ||

| man | 8.7 | |

|

| ||

| bovine | 8.7 | |

|

| ||

| walking | 8.5 | |

|

| ||

| clouds | 8.5 | |

|

| ||

| palm | 8.4 | |

|

| ||

| lake | 8.2 | |

|

| ||

| peaceful | 8.2 | |

|

| ||

| countryside | 8.2 | |

|

| ||

| garment | 8.1 | |

|

| ||

| male | 7.9 | |

|

| ||

| wild | 7.8 | |

|

| ||

| rock | 7.8 | |

|

| ||

| sunny | 7.8 | |

|

| ||

| sport | 7.7 | |

|

| ||

| bikini | 7.7 | |

|

| ||

| paradise | 7.5 | |

|

| ||

| dark | 7.5 | |

|

| ||

| city | 7.5 | |

|

| ||

| silhouette | 7.5 | |

|

| ||

| environment | 7.4 | |

|

| ||

| morning | 7.2 | |

|

| ||

| mountain | 7.1 | |

|

| ||

| season | 7 | |

|

| ||

Google

created on 2022-01-09

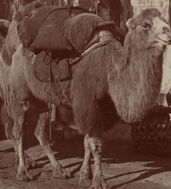

| Tree | 88.9 | |

|

| ||

| Working animal | 85.6 | |

|

| ||

| Landscape | 77 | |

|

| ||

| Tints and shades | 75.7 | |

|

| ||

| Vintage clothing | 71.2 | |

|

| ||

| Terrestrial animal | 67.2 | |

|

| ||

| History | 66.2 | |

|

| ||

| Pack animal | 66.1 | |

|

| ||

| Monochrome | 65.1 | |

|

| ||

| Visual arts | 62.2 | |

|

| ||

| Livestock | 62 | |

|

| ||

| Uniform | 61.8 | |

|

| ||

| Herd | 58.5 | |

|

| ||

| Military organization | 57.7 | |

|

| ||

| Paper product | 57.6 | |

|

| ||

| Monochrome photography | 56.1 | |

|

| ||

| Crowd | 55.6 | |

|

| ||

| Team | 54.7 | |

|

| ||

| Bovine | 53.6 | |

|

| ||

| House | 53 | |

|

| ||

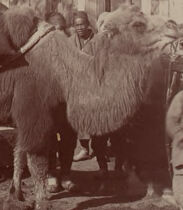

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 99.7% |

| Sad | 49.9% |

| Calm | 28.5% |

| Happy | 7.8% |

| Confused | 3.6% |

| Disgusted | 3.5% |

| Angry | 3.1% |

| Fear | 2.3% |

| Surprised | 1.3% |

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 97.7% |

| Sad | 42.8% |

| Calm | 15.9% |

| Fear | 14.4% |

| Happy | 9.5% |

| Disgusted | 6.8% |

| Angry | 4.4% |

| Surprised | 4.3% |

| Confused | 1.9% |

AWS Rekognition

| Age | 22-30 |

| Gender | Male, 98.7% |

| Calm | 36.6% |

| Sad | 33.3% |

| Disgusted | 17.8% |

| Fear | 6% |

| Angry | 2.4% |

| Happy | 2.3% |

| Confused | 1% |

| Surprised | 0.6% |

AWS Rekognition

| Age | 26-36 |

| Gender | Male, 99.1% |

| Disgusted | 88.6% |

| Calm | 5.6% |

| Happy | 1.5% |

| Angry | 1.2% |

| Sad | 0.9% |

| Surprised | 0.9% |

| Fear | 0.8% |

| Confused | 0.4% |

AWS Rekognition

| Age | 20-28 |

| Gender | Male, 97.5% |

| Sad | 98.4% |

| Fear | 1.3% |

| Calm | 0.1% |

| Angry | 0.1% |

| Disgusted | 0% |

| Confused | 0% |

| Happy | 0% |

| Surprised | 0% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very likely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Likely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| nature landscape | 70% | |

|

| ||

| paintings art | 21.9% | |

|

| ||

| pets animals | 4.6% | |

|

| ||

Captions

Microsoft

created on 2022-01-09

| a group of people in an old photo of a horse | 96.6% | |

|

| ||

| a vintage photo of a group of people standing next to a horse | 95.4% | |

|

| ||

| a group of people standing in an old photo of a horse | 95.3% | |

|

| ||

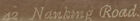

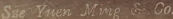

Text analysis

Amazon

Ming

Co.

Nanking

Road.

42 Nanking Road.

&

42

Sue Yuen Ming & Co.

58.

Sue

Yuen

52 Sse Yen Ming & Co.

42. Nankng Road.

52

Sse

Yen

Ming

&

Co.

42.

Nankng

Road.