Machine Generated Data

Tags

Amazon

created on 2019-05-31

Clarifai

created on 2019-05-31

Imagga

created on 2019-05-31

| furniture | 29.7 | |

|

| ||

| toaster | 24.9 | |

|

| ||

| box | 24.8 | |

|

| ||

| kitchen appliance | 24.1 | |

|

| ||

| car | 23.3 | |

|

| ||

| home appliance | 22.6 | |

|

| ||

| vehicle | 17.9 | |

|

| ||

| room | 17.6 | |

|

| ||

| appliance | 16.5 | |

|

| ||

| business | 16.4 | |

|

| ||

| equipment | 16.2 | |

|

| ||

| office | 15.6 | |

|

| ||

| furnishing | 15.2 | |

|

| ||

| container | 13.7 | |

|

| ||

| man | 13.4 | |

|

| ||

| work | 13.3 | |

|

| ||

| 3d | 13.2 | |

|

| ||

| floor | 13 | |

|

| ||

| apparatus | 12.8 | |

|

| ||

| home | 12.7 | |

|

| ||

| crib | 12.6 | |

|

| ||

| transportation | 12.5 | |

|

| ||

| people | 12.3 | |

|

| ||

| baby bed | 12 | |

|

| ||

| bedroom | 11.9 | |

|

| ||

| desk | 11.4 | |

|

| ||

| travel | 11.3 | |

|

| ||

| incubator | 10.9 | |

|

| ||

| house | 10.9 | |

|

| ||

| computer | 10.6 | |

|

| ||

| table | 10.5 | |

|

| ||

| device | 10.3 | |

|

| ||

| modern | 9.8 | |

|

| ||

| old | 9.7 | |

|

| ||

| interior | 9.7 | |

|

| ||

| technology | 9.6 | |

|

| ||

| render | 9.5 | |

|

| ||

| hospital | 9.4 | |

|

| ||

| inside | 9.2 | |

|

| ||

| transport | 9.1 | |

|

| ||

| crate | 9 | |

|

| ||

| chest | 8.9 | |

|

| ||

| metal | 8.8 | |

|

| ||

| auto | 8.6 | |

|

| ||

| luxury | 8.6 | |

|

| ||

| person | 8.5 | |

|

| ||

| professional | 8.4 | |

|

| ||

| machine | 8.4 | |

|

| ||

| wood | 8.3 | |

|

| ||

| window | 8.2 | |

|

| ||

| truck | 8 | |

|

| ||

| working | 7.9 | |

|

| ||

| lifestyle | 7.9 | |

|

| ||

| wooden | 7.9 | |

|

| ||

| male | 7.8 | |

|

| ||

| adult | 7.8 | |

|

| ||

| chair | 7.7 | |

|

| ||

| estate | 7.6 | |

|

| ||

| wheel | 7.5 | |

|

| ||

| seat | 7.4 | |

|

| ||

| monitor | 7.3 | |

|

| ||

| durables | 7.3 | |

|

| ||

| file | 7.2 | |

|

| ||

| black | 7.2 | |

|

| ||

| worker | 7.1 | |

|

| ||

Google

created on 2019-05-31

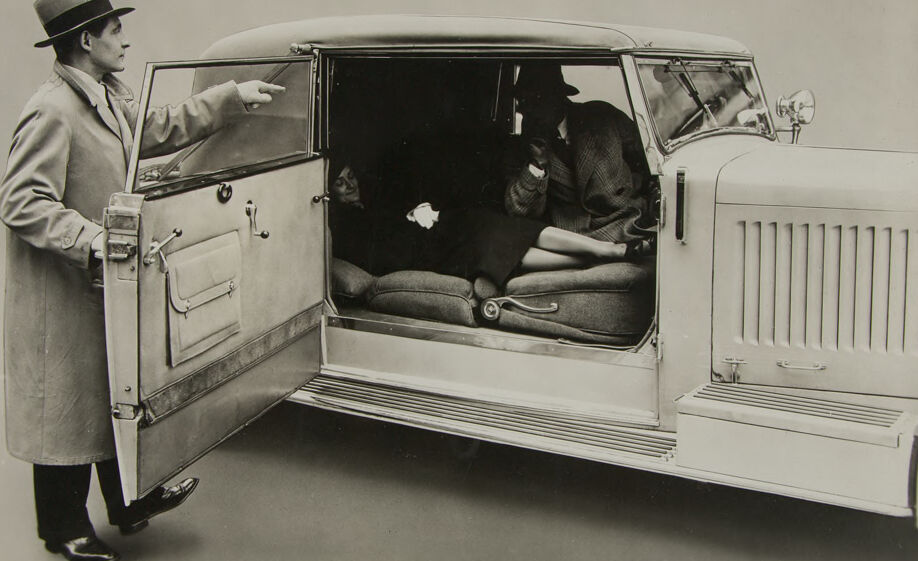

| Classic | 91.5 | |

|

| ||

| Motor vehicle | 90.1 | |

|

| ||

| Car | 88.4 | |

|

| ||

| Vehicle | 87.7 | |

|

| ||

| Vintage car | 62.5 | |

|

| ||

| Family car | 51.5 | |

|

| ||

Microsoft

created on 2019-05-31

| land vehicle | 92.8 | |

|

| ||

| vehicle | 92.7 | |

|

| ||

| old | 81.4 | |

|

| ||

| car | 59 | |

|

| ||

| wheel | 52.5 | |

|

| ||

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 52.1% |

| Angry | 45.2% |

| Sad | 45.4% |

| Surprised | 45.2% |

| Happy | 45.2% |

| Calm | 53.8% |

| Disgusted | 45.1% |

| Confused | 45.1% |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| interior objects | 99.2% | |

|

| ||

Captions

Microsoft

created on 2019-05-31

| a vintage photo of a truck | 69.9% | |

|

| ||

| a vintage photo of a person in a car | 54.9% | |

|

| ||

| a vintage photo of a person | 54.8% | |

|

| ||