Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 51.9% |

| Confused | 45.1% |

| Surprised | 45.2% |

| Sad | 45.3% |

| Angry | 45.2% |

| Happy | 45.1% |

| Calm | 54% |

| Disgusted | 45.1% |

Feature analysis

Amazon

| Person | 99.5% | |

Categories

Imagga

| interior objects | 96.7% | |

| food drinks | 1.2% | |

| paintings art | 1.1% | |

Captions

Microsoft

created by unknown on 2019-05-31

| a vintage photo of a truck | 80.8% | |

| an old photo of a truck | 80.7% | |

| old photo of a truck | 75.4% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-06

| a photograph of a man in a hat and coat is standing in front of a car | -100% | |

Google Gemini

Created by gemini-2.0-flash on 2025-05-07

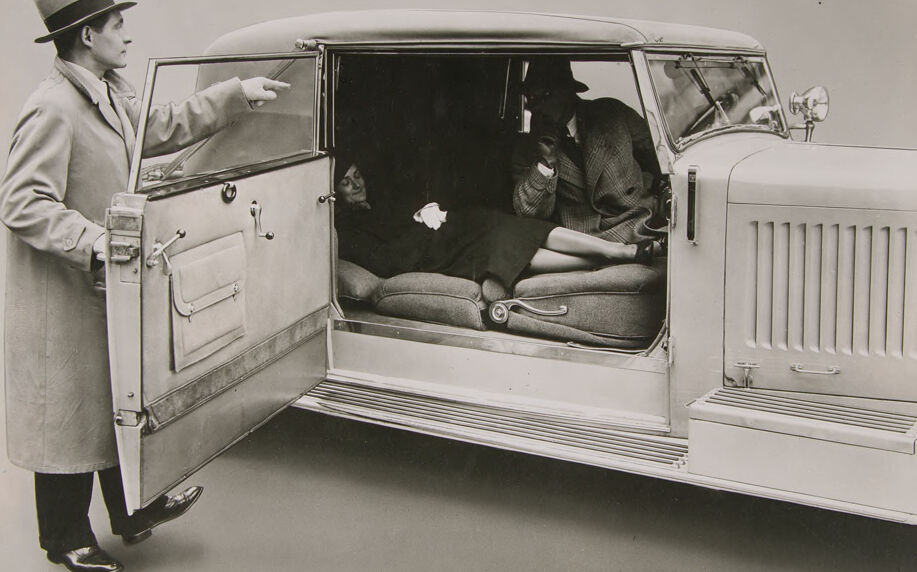

The image shows a 1930's car with a man standing in front of the open driver's side door. Inside the car, a woman is reclined on a pillow with a man sitting next to her.

The man standing in front of the car is wearing a fedora, long trench coat, dark pants, and dress shoes. He is pointing into the car with his right arm outstretched. The car is a light color, with a large, vertical grille. The inside of the car is outfitted with a long seat that looks like a bed, with the woman reclined upon it. The man sitting next to her is wearing a fedora and checkered suit jacket.

Created by gemini-2.0-flash-lite on 2025-05-07

Certainly! Here's a description of the image:

Overall Scene:

The image is a vintage, black-and-white photograph. It appears to be a studio shot or a carefully staged photograph. The focal point is a luxury car with a large open door.

The Car:

- The car is a classic, likely from the early to mid-20th century.

- It has a distinctive design, with a streamlined body and a prominent radiator grille.

- The car has a spacious interior, likely designed for passenger comfort.

The People:

- Man at the Door: A man in a hat, trench coat, and dark slacks is standing near the open door. He is pointing towards the interior, as if to draw attention to something inside.

- Inside the Car: There appear to be two other people inside the car. One is a woman resting, the other a man, perhaps in conversation or involved in an activity.

Atmosphere:

- The scene has a sense of luxury and exclusivity.

- The positioning of the individuals implies a story or a moment in time.

Let me know if you would like a more detailed analysis of any aspect of the image!