Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 10-15 |

| Gender | Female, 78.1% |

| Confused | 14.6% |

| Happy | 3.8% |

| Surprised | 7.2% |

| Calm | 17.9% |

| Sad | 31.4% |

| Angry | 23.5% |

| Disgusted | 1.7% |

Feature analysis

Amazon

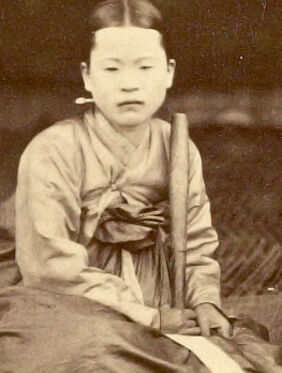

| Person | 99.5% | |

Categories

Imagga

| people portraits | 50.7% | |

| paintings art | 48.6% | |

Captions

Microsoft

created by unknown on 2019-07-07

| a vintage photo of a group of people posing for the camera | 95.5% | |

| a vintage photo of a group of people posing for a picture | 95.4% | |

| a vintage photo of a group of people sitting in chairs | 95.3% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-04

| a photograph of a group of people sitting on a blanket | -100% | |

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-02

Certainly! Here's a description of the image:

Overall Impression:

The image is a sepia-toned photograph depicting three young women in traditional Korean clothing. The scene seems to be a moment captured from everyday life, possibly from the late 19th or early 20th century, given the style of photography and clothing.

Details:

Clothing: All three women are wearing Hanbok, the traditional Korean dress. This includes a jacket-like top and a full skirt. Their hair is pulled back, some with their hair parted and tied at the back, and they are wearing small earrings.

Pose and Activities: The women are seated on a platform or a woven mat. In the center, a piece of what looks like equipment used for pounding or grinding. The woman in the center appears to be resting a white-cloth-covered leg on the equipment. The other two women sit on either side, holding what appear to be long wooden sticks.

Expression: They all have solemn or serious expressions.

Setting: The background is simple, hinting at an indoor or outdoor setting. There are some tools or items on the woven mat.

Overall Mood:

The photograph has a sense of history and a glimpse into the past. It conveys the simplicity and everyday life of the era.

Created by gemini-2.0-flash on 2025-05-02

Here is a description of the image:

The image is a vintage, sepia-toned photograph featuring three young women, possibly teenagers, dressed in traditional Korean attire called Hanbok. They are seated close together, arranged horizontally across the frame.

The woman on the left is wearing a Hanbok with a dark top and a light-colored skirt. She holds a wooden stick in her hand, and a pair of wooden shoes are visible on the mat in front of her. The woman in the middle is seated on a small wooden stool with a light-colored fabric draped over it. She is also wearing a Hanbok and has a slightly somber expression. The woman on the right is similarly dressed, with a light-colored Hanbok and is also holding a wooden stick. All three women have their hair neatly styled, and they appear to be of East Asian descent.

In the foreground, on the woven mat, lie several items, including the aforementioned wooden shoes and what looks like a traditional tool or apparatus. The background is a soft, out-of-focus blend of tones that do not reveal any specific details about the setting.

The overall impression is one of historical documentation, capturing a moment in time with subjects posed in a studio or outdoor setting. The photograph's style and tone suggest it was taken in the late 19th or early 20th century.