Machine Generated Data

Tags

Amazon

created on 2019-11-19

Clarifai

created on 2019-11-19

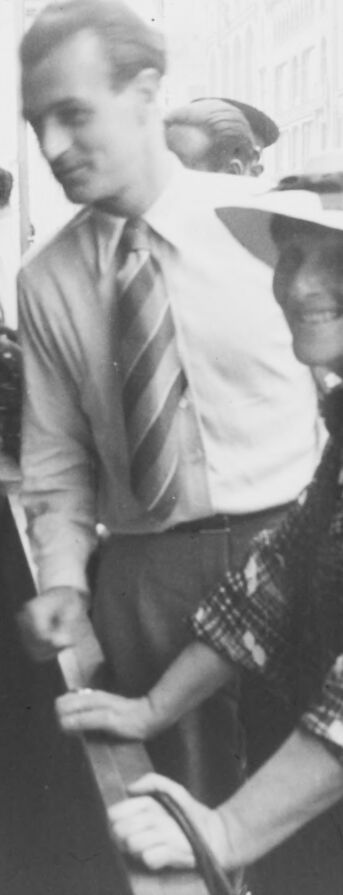

| people | 99.8 | |

|

| ||

| adult | 98 | |

|

| ||

| music | 97.6 | |

|

| ||

| musician | 96 | |

|

| ||

| group together | 95.9 | |

|

| ||

| two | 95.5 | |

|

| ||

| man | 95.4 | |

|

| ||

| group | 95.4 | |

|

| ||

| one | 94.6 | |

|

| ||

| wear | 93.7 | |

|

| ||

| singer | 92.4 | |

|

| ||

| administration | 92.4 | |

|

| ||

| vehicle | 91.9 | |

|

| ||

| microphone | 91.3 | |

|

| ||

| woman | 90.7 | |

|

| ||

| three | 90.3 | |

|

| ||

| several | 89.1 | |

|

| ||

| leader | 88 | |

|

| ||

| stringed instrument | 86 | |

|

| ||

| instrument | 85.3 | |

|

| ||

Imagga

created on 2019-11-19

Google

created on 2019-11-19

| Photograph | 97.3 | |

|

| ||

| Snapshot | 88.7 | |

|

| ||

| Gentleman | 77.6 | |

|

| ||

| Photography | 70.6 | |

|

| ||

| Black-and-white | 68.3 | |

|

| ||

| Stock photography | 59.4 | |

|

| ||

Microsoft

created on 2019-11-19

| person | 99.8 | |

|

| ||

| clothing | 97.6 | |

|

| ||

| human face | 93.5 | |

|

| ||

| man | 91.8 | |

|

| ||

| text | 80.9 | |

|

| ||

| black and white | 76.4 | |

|

| ||

| standing | 75.1 | |

|

| ||

| posing | 70.7 | |

|

| ||

| fashion accessory | 64.9 | |

|

| ||

| hat | 60.4 | |

|

| ||

| crowd | 0.9 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 26-42 |

| Gender | Male, 56.7% |

| Angry | 0.8% |

| Surprised | 1.1% |

| Fear | 0.5% |

| Happy | 59.6% |

| Sad | 1.9% |

| Confused | 1.1% |

| Calm | 34.5% |

| Disgusted | 0.5% |

AWS Rekognition

| Age | 8-18 |

| Gender | Female, 75.1% |

| Disgusted | 0.8% |

| Confused | 1.1% |

| Fear | 0.5% |

| Happy | 3.4% |

| Calm | 82% |

| Angry | 4.7% |

| Surprised | 1.4% |

| Sad | 6.1% |

AWS Rekognition

| Age | 48-66 |

| Gender | Female, 53.8% |

| Happy | 45.1% |

| Calm | 46.3% |

| Angry | 49.7% |

| Surprised | 45.1% |

| Disgusted | 45.3% |

| Confused | 45.6% |

| Fear | 45.2% |

| Sad | 47.7% |

Microsoft Cognitive Services

| Age | 37 |

| Gender | Male |

Feature analysis

Categories

Imagga

| people portraits | 68.9% | |

|

| ||

| events parties | 27.1% | |

|

| ||

| food drinks | 1.7% | |

|

| ||

Captions

Microsoft

created on 2019-11-19

| a group of people posing for a photo in front of a window | 88.1% | |

|

| ||

| a group of people standing in front of a window | 88% | |

|

| ||

| a group of people posing for a photo | 87.9% | |

|

| ||