Machine Generated Data

Tags

Amazon

created on 2023-01-09

| Clothing | 100 | |

|

| ||

| Path | 99.5 | |

|

| ||

| Sidewalk | 99.5 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Man | 99.3 | |

|

| ||

| Adult | 99.3 | |

|

| ||

| Male | 99.3 | |

|

| ||

| Shoe | 99 | |

|

| ||

| Footwear | 99 | |

|

| ||

| Person | 98.9 | |

|

| ||

| Man | 98.9 | |

|

| ||

| Adult | 98.9 | |

|

| ||

| Male | 98.9 | |

|

| ||

| Handbag | 98.8 | |

|

| ||

| Bag | 98.8 | |

|

| ||

| Accessories | 98.8 | |

|

| ||

| Person | 98.6 | |

|

| ||

| Man | 98.6 | |

|

| ||

| Adult | 98.6 | |

|

| ||

| Male | 98.6 | |

|

| ||

| Person | 98.6 | |

|

| ||

| Male | 98.6 | |

|

| ||

| Child | 98.6 | |

|

| ||

| Boy | 98.6 | |

|

| ||

| Person | 98.3 | |

|

| ||

| Person | 98.1 | |

|

| ||

| Person | 98.1 | |

|

| ||

| Man | 98.1 | |

|

| ||

| Adult | 98.1 | |

|

| ||

| Male | 98.1 | |

|

| ||

| Shoe | 98 | |

|

| ||

| Person | 97.7 | |

|

| ||

| Person | 97.7 | |

|

| ||

| Shoe | 97.6 | |

|

| ||

| Person | 97.6 | |

|

| ||

| Adult | 97.6 | |

|

| ||

| Woman | 97.6 | |

|

| ||

| Female | 97.6 | |

|

| ||

| Shoe | 97.6 | |

|

| ||

| Handbag | 97.5 | |

|

| ||

| Person | 97.2 | |

|

| ||

| Person | 97.1 | |

|

| ||

| Glasses | 96.6 | |

|

| ||

| Shoe | 95.9 | |

|

| ||

| Shoe | 95.7 | |

|

| ||

| City | 95.7 | |

|

| ||

| Shoe | 95.6 | |

|

| ||

| Shoe | 93 | |

|

| ||

| Shoe | 92.1 | |

|

| ||

| Shoe | 91.6 | |

|

| ||

| Shoe | 91.5 | |

|

| ||

| Hat | 91.4 | |

|

| ||

| Shoe | 91.2 | |

|

| ||

| Coat | 90.9 | |

|

| ||

| Shoe | 90.8 | |

|

| ||

| Shoe | 87.8 | |

|

| ||

| Handbag | 84.8 | |

|

| ||

| Shoe | 84.3 | |

|

| ||

| Glasses | 82.5 | |

|

| ||

| Overcoat | 81.9 | |

|

| ||

| Wheel | 80.7 | |

|

| ||

| Machine | 80.7 | |

|

| ||

| Car | 77.9 | |

|

| ||

| Vehicle | 77.9 | |

|

| ||

| Transportation | 77.9 | |

|

| ||

| Hat | 77.6 | |

|

| ||

| Street | 73.9 | |

|

| ||

| Urban | 73.9 | |

|

| ||

| Road | 73.9 | |

|

| ||

| Handbag | 70.3 | |

|

| ||

| Hat | 68.8 | |

|

| ||

| Glasses | 65.9 | |

|

| ||

| Hat | 65 | |

|

| ||

| Car | 63.7 | |

|

| ||

| Person | 60.4 | |

|

| ||

| Glasses | 60.2 | |

|

| ||

| Traffic Light | 58.9 | |

|

| ||

| Light | 58.9 | |

|

| ||

| Walking | 57.5 | |

|

| ||

| Pants | 57.1 | |

|

| ||

| Person | 56.7 | |

|

| ||

| Dress | 55.7 | |

|

| ||

| People | 55.5 | |

|

| ||

Clarifai

created on 2023-10-13

Imagga

created on 2023-01-09

| people | 27.9 | |

|

| ||

| sword | 24.8 | |

|

| ||

| weapon | 22.8 | |

|

| ||

| world | 22 | |

|

| ||

| man | 21.5 | |

|

| ||

| person | 20.5 | |

|

| ||

| city | 19.9 | |

|

| ||

| adult | 19.7 | |

|

| ||

| sport | 18.8 | |

|

| ||

| male | 16.4 | |

|

| ||

| urban | 14.9 | |

|

| ||

| clothing | 14.6 | |

|

| ||

| women | 14.2 | |

|

| ||

| active | 14.1 | |

|

| ||

| shop | 13.9 | |

|

| ||

| men | 13.7 | |

|

| ||

| group | 12.9 | |

|

| ||

| street | 12.9 | |

|

| ||

| team | 12.5 | |

|

| ||

| business | 11.5 | |

|

| ||

| holiday | 11.5 | |

|

| ||

| athlete | 11.4 | |

|

| ||

| performer | 11.3 | |

|

| ||

| legs | 11.3 | |

|

| ||

| fashion | 11.3 | |

|

| ||

| happy | 11.3 | |

|

| ||

| lifestyle | 10.8 | |

|

| ||

| player | 10.4 | |

|

| ||

| portrait | 10.4 | |

|

| ||

| black | 10.3 | |

|

| ||

| play | 10.3 | |

|

| ||

| action | 10.2 | |

|

| ||

| model | 10.1 | |

|

| ||

| shopping | 10.1 | |

|

| ||

| girls | 10 | |

|

| ||

| dress | 9.9 | |

|

| ||

| costume | 9.9 | |

|

| ||

| family | 9.8 | |

|

| ||

| bags | 9.7 | |

|

| ||

| interior | 9.7 | |

|

| ||

| standing | 9.6 | |

|

| ||

| walking | 9.5 | |

|

| ||

| child | 9.4 | |

|

| ||

| tradition | 9.2 | |

|

| ||

| traditional | 9.1 | |

|

| ||

| suit | 9 | |

|

| ||

| gift | 8.6 | |

|

| ||

| walk | 8.6 | |

|

| ||

| stand | 8.5 | |

|

| ||

| bag | 8.5 | |

|

| ||

| ball | 8.5 | |

|

| ||

| travel | 8.4 | |

|

| ||

| clothes | 8.4 | |

|

| ||

| hand | 8.4 | |

|

| ||

| mother | 8.3 | |

|

| ||

| indoor | 8.2 | |

|

| ||

| dancer | 8.2 | |

|

| ||

| style | 8.2 | |

|

| ||

| game | 8 | |

|

| ||

| celebration | 8 | |

|

| ||

| happiness | 7.8 | |

|

| ||

| mall | 7.8 | |

|

| ||

| motion | 7.7 | |

|

| ||

| attractive | 7.7 | |

|

| ||

| winter | 7.7 | |

|

| ||

| two | 7.6 | |

|

| ||

| hat | 7.6 | |

|

| ||

| human | 7.5 | |

|

| ||

| fun | 7.5 | |

|

| ||

| leisure | 7.5 | |

|

| ||

| silhouette | 7.4 | |

|

| ||

| dance | 7.3 | |

|

| ||

| window | 7.3 | |

|

| ||

| competition | 7.3 | |

|

| ||

| children | 7.3 | |

|

| ||

| present | 7.3 | |

|

| ||

| success | 7.2 | |

|

| ||

| smile | 7.1 | |

|

| ||

| uniform | 7.1 | |

|

| ||

Google

created on 2023-01-09

| Black-and-white | 84.8 | |

|

| ||

| Style | 83.9 | |

|

| ||

| Plant | 78.2 | |

|

| ||

| Monochrome photography | 76.7 | |

|

| ||

| Monochrome | 72.8 | |

|

| ||

| Window | 70.9 | |

|

| ||

| Boot | 70.4 | |

|

| ||

| Vintage clothing | 66.6 | |

|

| ||

| Luggage and bags | 65.8 | |

|

| ||

| Trench coat | 63.4 | |

|

| ||

| Stock photography | 61.9 | |

|

| ||

| Overcoat | 60 | |

|

| ||

| Bag | 59.4 | |

|

| ||

| Street | 58.9 | |

|

| ||

| Art | 56.2 | |

|

| ||

| Road | 52 | |

|

| ||

| Retro style | 50.2 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

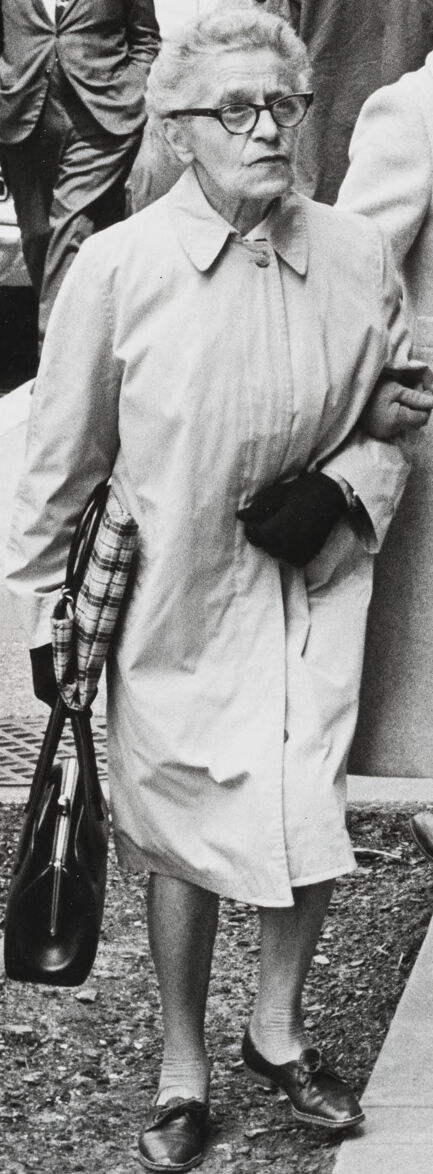

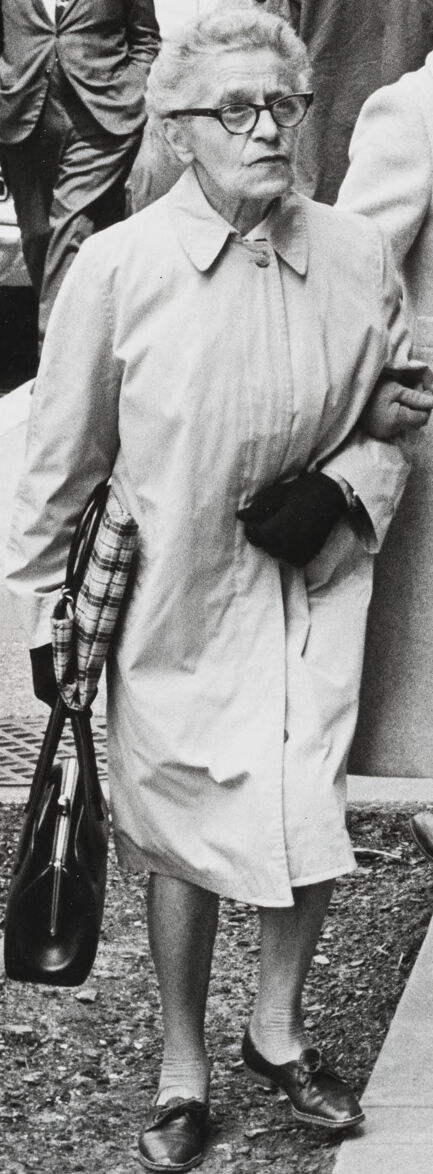

AWS Rekognition

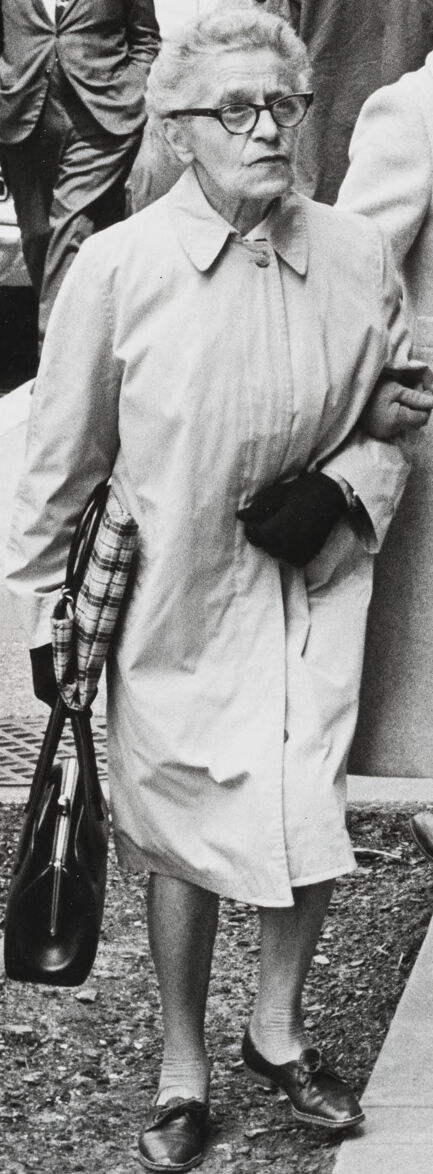

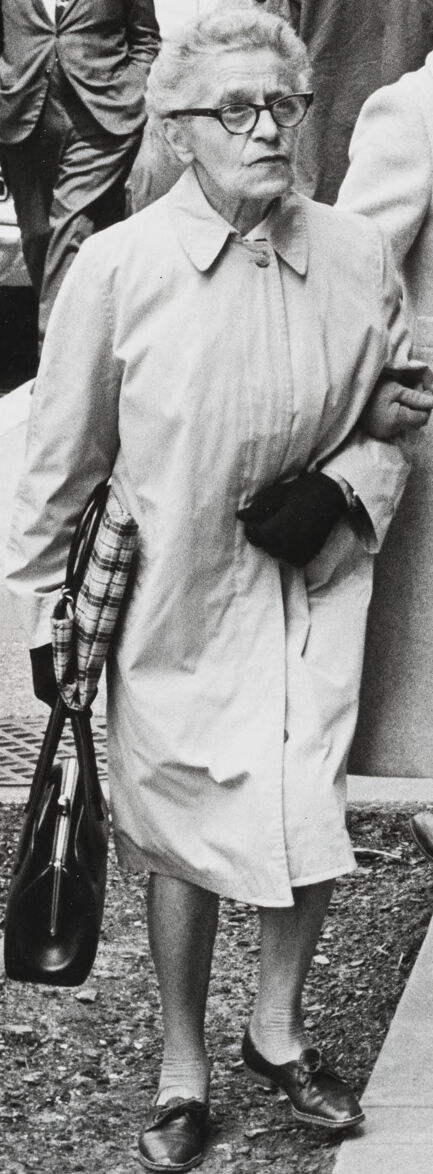

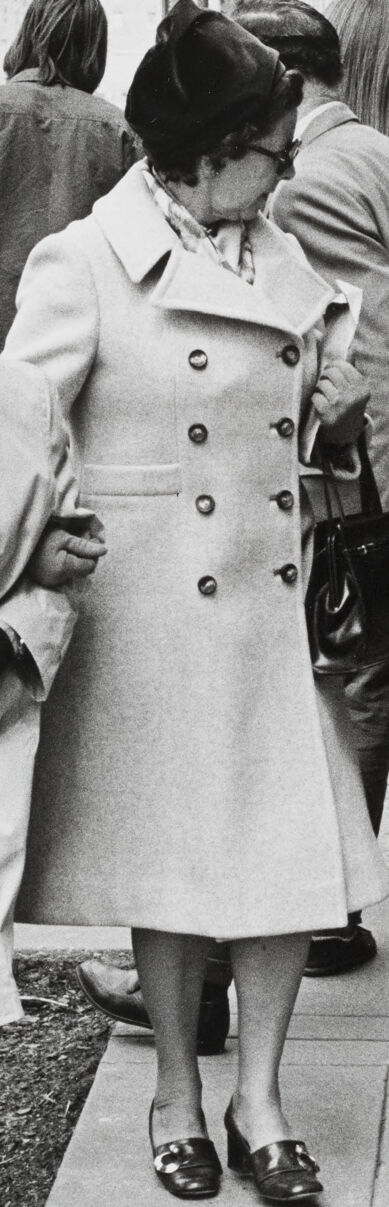

| Age | 60-70 |

| Gender | Female, 99.7% |

| Confused | 71.2% |

| Calm | 27.2% |

| Surprised | 6.6% |

| Fear | 5.9% |

| Sad | 2.2% |

| Angry | 0.3% |

| Disgusted | 0.1% |

| Happy | 0.1% |

AWS Rekognition

| Age | 71-81 |

| Gender | Male, 100% |

| Calm | 80.1% |

| Confused | 18.4% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Happy | 0.5% |

| Angry | 0.2% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 16-24 |

| Gender | Male, 50.2% |

| Calm | 76.4% |

| Happy | 9.7% |

| Sad | 8.1% |

| Fear | 6.6% |

| Surprised | 6.5% |

| Disgusted | 0.9% |

| Angry | 0.7% |

| Confused | 0.3% |

AWS Rekognition

| Age | 18-26 |

| Gender | Male, 100% |

| Calm | 54.1% |

| Confused | 35.3% |

| Surprised | 6.7% |

| Fear | 6% |

| Sad | 5.5% |

| Angry | 1.8% |

| Disgusted | 0.6% |

| Happy | 0.2% |

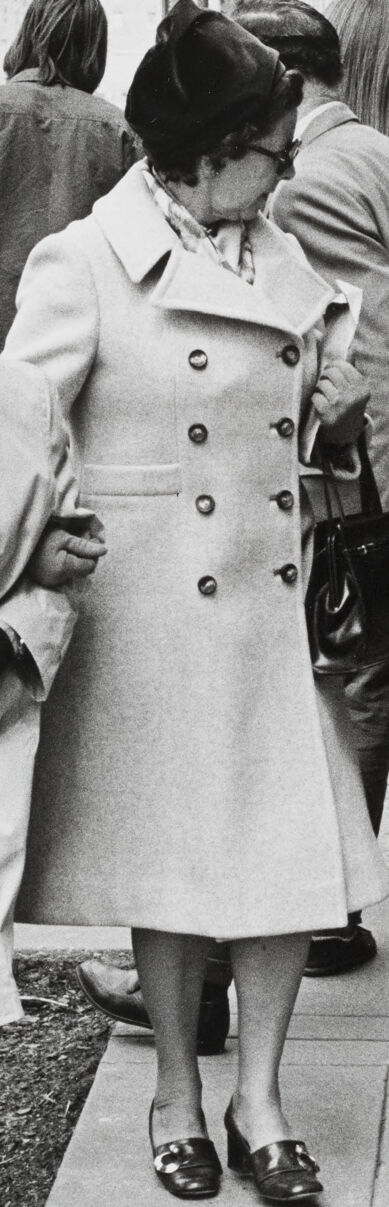

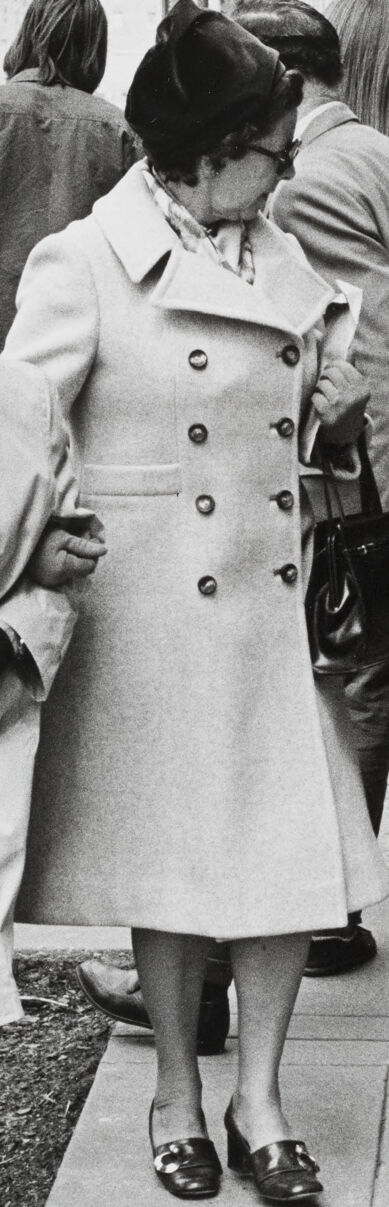

AWS Rekognition

| Age | 21-29 |

| Gender | Female, 80.5% |

| Calm | 66.8% |

| Sad | 63.7% |

| Surprised | 6.5% |

| Fear | 6% |

| Angry | 1.1% |

| Disgusted | 0.2% |

| Confused | 0.2% |

| Happy | 0.2% |

Microsoft Cognitive Services

| Age | 67 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 29 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 60 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

| Person | 99.3% | |

|

| ||

| Person | 98.9% | |

|

| ||

| Person | 98.6% | |

|

| ||

| Person | 98.6% | |

|

| ||

| Person | 98.3% | |

|

| ||

| Person | 98.1% | |

|

| ||

| Person | 98.1% | |

|

| ||

| Person | 97.7% | |

|

| ||

| Person | 97.7% | |

|

| ||

| Person | 97.6% | |

|

| ||

| Person | 97.2% | |

|

| ||

| Person | 97.1% | |

|

| ||

| Person | 60.4% | |

|

| ||

| Person | 56.7% | |

|

| ||

| Man | 99.3% | |

|

| ||

| Man | 98.9% | |

|

| ||

| Man | 98.6% | |

|

| ||

| Man | 98.1% | |

|

| ||

| Adult | 99.3% | |

|

| ||

| Adult | 98.9% | |

|

| ||

| Adult | 98.6% | |

|

| ||

| Adult | 98.1% | |

|

| ||

| Adult | 97.6% | |

|

| ||

| Male | 99.3% | |

|

| ||

| Male | 98.9% | |

|

| ||

| Male | 98.6% | |

|

| ||

| Male | 98.6% | |

|

| ||

| Male | 98.1% | |

|

| ||

| Shoe | 99% | |

|

| ||

| Shoe | 98% | |

|

| ||

| Shoe | 97.6% | |

|

| ||

| Shoe | 97.6% | |

|

| ||

| Shoe | 95.9% | |

|

| ||

| Shoe | 95.7% | |

|

| ||

| Shoe | 95.6% | |

|

| ||

| Shoe | 93% | |

|

| ||

| Shoe | 92.1% | |

|

| ||

| Shoe | 91.6% | |

|

| ||

| Shoe | 91.5% | |

|

| ||

| Shoe | 91.2% | |

|

| ||

| Shoe | 90.8% | |

|

| ||

| Shoe | 87.8% | |

|

| ||

| Shoe | 84.3% | |

|

| ||

| Handbag | 98.8% | |

|

| ||

| Handbag | 97.5% | |

|

| ||

| Handbag | 84.8% | |

|

| ||

| Handbag | 70.3% | |

|

| ||

| Child | 98.6% | |

|

| ||

| Boy | 98.6% | |

|

| ||

| Woman | 97.6% | |

|

| ||

| Female | 97.6% | |

|

| ||

| Glasses | 96.6% | |

|

| ||

| Glasses | 82.5% | |

|

| ||

| Glasses | 65.9% | |

|

| ||

| Glasses | 60.2% | |

|

| ||

| Hat | 91.4% | |

|

| ||

| Hat | 77.6% | |

|

| ||

| Hat | 68.8% | |

|

| ||

| Hat | 65% | |

|

| ||

| Coat | 90.9% | |

|

| ||

| Wheel | 80.7% | |

|

| ||

| Car | 77.9% | |

|

| ||

| Car | 63.7% | |

|

| ||

| Traffic Light | 58.9% | |

|

| ||

Categories

Imagga

| paintings art | 59.6% | |

|

| ||

| people portraits | 32.9% | |

|

| ||

| pets animals | 5.2% | |

|

| ||

| nature landscape | 1% | |

|

| ||

Captions

Microsoft

created by unknown on 2023-01-09

| a group of people on a sidewalk | 92.9% | |

|

| ||

| a group of people walking on a sidewalk | 92.8% | |

|

| ||

| a group of people standing on a sidewalk | 91.6% | |

|

| ||

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-31

The image depicts a busy urban scene on a city street. The photograph is in black and white, capturing a group of people in the foreground, some standing and others sitting or lying on the sidewalk. There appear to be several individuals assisting or interacting with the people on the ground. In the background, there are buildings, trees, and other pedestrians walking along the street. The overall atmosphere suggests a moment of activity or potential emergency on a city street.

Created by claude-3-5-sonnet-20241022 on 2024-12-31

This is a black and white street photograph that appears to be from the 1960s or 1970s. The scene shows a contrast between different generations and social groups on a city sidewalk. In the foreground, there are two women in light-colored trench coats, appearing to be of an older generation, walking past. In the background, there appears to be some youth culture activity, with people in casual clothing and jeans. One person is lying on the sidewalk while another crouches nearby, perhaps taking a photograph or engaging in some street activity. The setting looks like a downtown urban area with buildings visible in the background. The photograph captures an interesting moment of social contrast and changing times in what appears to be an American city.

Text analysis

Amazon