Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 72-82 |

| Gender | Male, 98.8% |

| Calm | 98.5% |

| Surprised | 6.5% |

| Fear | 6% |

| Sad | 2.2% |

| Disgusted | 0.2% |

| Confused | 0.2% |

| Angry | 0.2% |

| Happy | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.6% | |

Categories

Imagga

created on 2022-12-12

| paintings art | 90.9% | |

| people portraits | 7.8% | |

| food drinks | 1% | |

Captions

Microsoft

created by unknown on 2022-12-12

| Elizabeth Catlett sitting at a table looking at a book | 65.9% | |

| Elizabeth Catlett sitting at a table in front of a book | 64.7% | |

| Elizabeth Catlett sitting in front of a book | 50.7% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip on 2025-05-13

a photograph of a woman sitting at a table with a painting of a woman

Created by general-english-image-caption-blip-2 on 2025-07-01

an older woman sitting at a table with a drawing

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-17

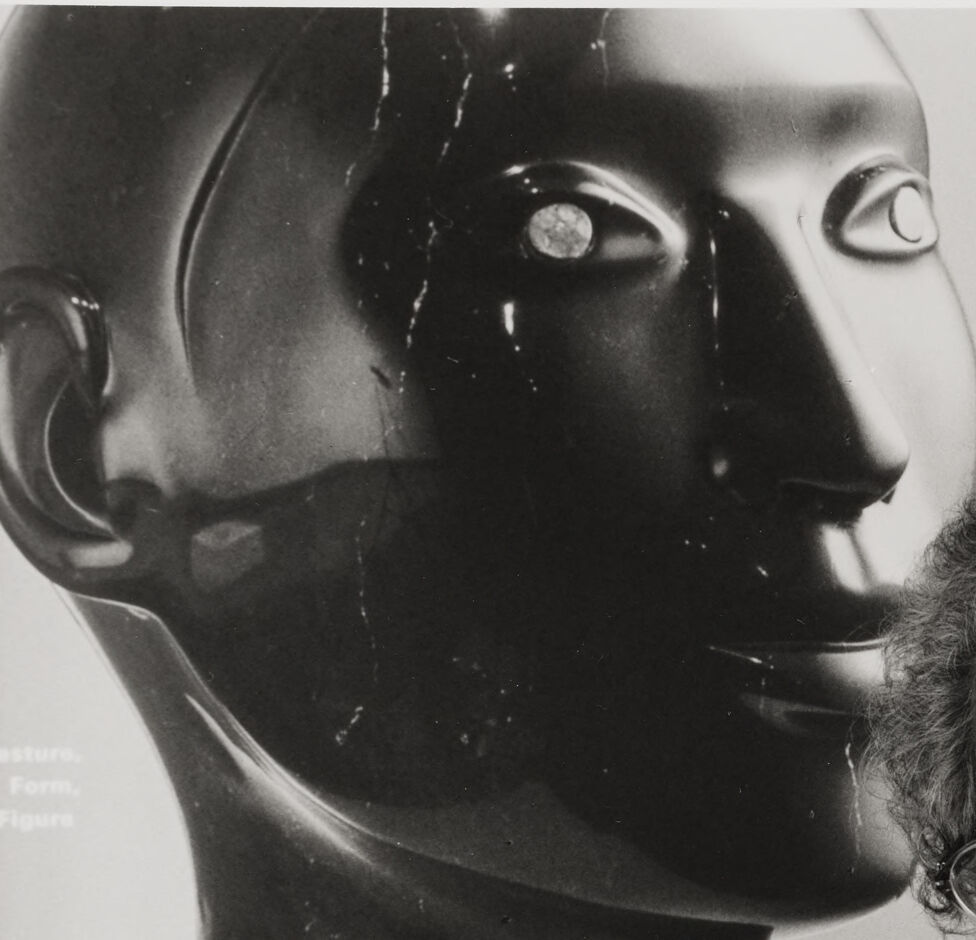

The image shows an individual seated at a table, wearing a light-colored shirt. The table features two striking black-and-white art pieces, which appear to be linocut or woodcut prints. One depicts a detailed portrait of a man, possibly in formal attire, while the other showcases a figure with draped attire in a contemplative pose. In the background, there is a sculpted mannequin head positioned on a shelf or surface. The setting suggests an artistic environment.

Created by gpt-4o-2024-08-06 on 2025-06-17

The image shows an elderly person wearing a light-colored blouse with subtle embroidered patterns, seated at a table with two black-and-white prints laid out in front of them. The prints appear to be artistic depictions of people. The first print on the left shows a portrait of a person with a prominent facial expression and an afro hairstyle, dressed in formal attire. The second print on the right depicts a person wearing a headscarf or hat, looking away from the viewer. In the background, on the left side, there is a mannequin head with a glossy surface resting on a shelf or table. The overall setting appears to be indoors, possibly an artist's studio or a gallery space.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-27

This black and white photograph shows an elderly artist wearing glasses and a white blouse, seated at what appears to be a work table. In front of them is a striking black and white woodcut or linocut print. Behind them is what appears to be a sculptural bust or head form in dark material. The image captures the artist in what seems to be their studio or workspace, examining or working on their bold, high-contrast printmaking artwork. The composition suggests this is a documentary-style photograph capturing an artist at work, likely from the mid to late 20th century.

Created by claude-3-haiku-20240307 on 2025-01-01

The image shows an older woman sitting at a table, examining some artwork or prints that are visible on the table in front of her. She appears to be an artist or someone with an interest in art, as suggested by the large sculpture or mask visible in the background behind her. The woman has curly grey hair and is wearing glasses, and she has a serious, contemplative expression on her face as she examines the artwork. Overall, the image conveys a sense of the woman's artistic sensibilities and creative focus.

Created by claude-3-opus-20240229 on 2025-01-01

The black and white photograph shows an elderly African American woman with glasses sitting at a table, looking thoughtfully at some artwork in front of her. Behind her is a sculpture or bust of a black male figure. The artwork on the table appears to be prints or drawings with bold, expressive lines depicting faces and figures. The composition suggests the woman is an artist, carefully examining and considering her creative works. The overall mood is contemplative and quietly powerful, capturing a moment of artistic reflection.

Created by claude-3-5-sonnet-20241022 on 2025-01-01

This black and white photograph shows an elderly artist sitting at a table with her artwork. She is wearing glasses and a light-colored embroidered top, and appears to be examining some black and white prints or woodcuts laid out on the table before her. In the background, there is a striking black mannequin head or sculptural bust with dramatic features. The artist appears to be in her later years, with gray curly hair, and the image suggests a lifetime dedicated to her artistic craft. The artwork visible on the table appears to be high-contrast prints or woodcuts showing portraits and figures rendered in bold black and white.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-10

The image is a black-and-white photograph of Elizabeth Catlett, an American artist, sitting at a table with her artwork.

Elizabeth Catlett is seated at a table, wearing a light-colored short-sleeved shirt and holding a piece of paper with her artwork on it. The artwork appears to be a print of a woman's face. She has short curly hair and is wearing glasses. Behind her, there is a large black sculpture of a human head on a shelf. The background of the image is a plain wall.

The overall atmosphere of the image suggests that Elizabeth Catlett is in her studio or workspace, surrounded by her art and tools. The presence of the sculpture behind her may indicate that she is working on a new project or experimenting with different mediums.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-10

The image depicts an elderly woman seated at a table, surrounded by artistic elements. She is dressed in a white short-sleeved shirt and has short, curly hair. The woman is intently focused on a piece of paper featuring a black-and-white drawing of a man's face, which she holds with both hands.

Behind her, a large black sculpture of a human head is visible, positioned on a table or shelf. The background of the image consists of a plain wall, suggesting that the scene may be set in an art studio or gallery. The overall atmosphere of the image conveys a sense of creativity and contemplation, as the woman appears to be deeply engaged with the artwork in front of her.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-05

A black and white photo of an old woman with glasses and a white shirt is sitting on a chair in front of a table. She is holding a piece of paper with a drawing of a man. She is looking at the drawing. Behind her is a big sculpture of a face with a blurry background. The sculpture is placed on a wooden shelf.

Created by amazon.nova-pro-v1:0 on 2025-06-05

The image is a black-and-white photograph of an elderly woman sitting at a desk. She is wearing glasses and a white shirt. Her hands are resting on two pieces of paper that appear to be artwork, possibly prints or drawings. Behind her, there is a large sculpture of a head, which seems to be made of a smooth, shiny material, possibly metal or polished stone. The sculpture has a modern, abstract style.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-06-01

Here's a description of the image:

This black and white photograph features an elderly woman seated at a table, surrounded by artwork. She is the clear focus of the image.

Here's a breakdown of the key elements:

- The Woman: She is an older woman with short, curly grey hair. She wears glasses and a simple white blouse. She's looking directly at the camera with a serious, thoughtful expression. She has her hands resting on the table in front of her.

- Artwork: On the table are two prints, black on white. One appears to be a portrait of a man, with a bold, graphic style. The other is a stylized depiction of a person in the act of some activity.

- Background: Behind the woman, there's a partial view of a smooth black sculpture of a human face and head. Above the sculpture, there's a poster with the words "Head, Form, Figure." The background is a simple, plain wall.

The overall composition suggests a portrait of an artist or someone involved in the creation of art. The lighting is soft and diffused, creating a sense of intimacy.

Created by gemini-2.0-flash on 2025-06-01

Here is a description of the image:

The image is a black and white portrait of an older African American woman, possibly an artist, seated at a table with her artwork. The woman is wearing glasses and a light-colored, loose-fitting shirt with subtle patterns. Her hair is short and curly. She is looking directly at the camera with a serious expression.

On the table in front of her, there are two distinct black and white prints. One print depicts a portrait of a man with an afro, while the other shows a figure wearing a head covering and apron.

Behind the woman, there is a dark bust on a stand and the bust also appears on a paper behind the stand. The bust has stylized features, and the stand has rungs or slots. The background is plain and light-colored. The overall composition suggests an artist in her studio, surrounded by her work.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-03

The image is a black-and-white photograph of an elderly woman who appears to be an artist. She is seated at a table with several pieces of artwork laid out in front of her. The artwork consists of black and white prints, likely woodcuts or linocuts, depicting faces and figures. The woman is wearing glasses and a light-colored blouse with short sleeves. She has short, curly hair and is looking directly at the camera with a composed expression.

Behind her, there is a large sculpture of a head, which seems to be made of a dark material. The sculpture has a stylized, abstract form with prominent facial features. The background is plain, emphasizing the focus on the woman and her artwork. The overall setting suggests that the photograph was taken in a studio or workspace. The image conveys a sense of creativity and artistic dedication.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-14

The image is a black-and-white photograph featuring an elderly woman in the foreground, sitting at a table and holding a piece of artwork. The artwork consists of a print or drawing depicting two figures, one with a headscarf, against a black background with intricate white details. The woman appears to be examining the artwork closely, with a pensive or thoughtful expression on her face.

In the background, there is a large, prominent sculpture of a human head with a reflective, polished surface, showcasing a stark contrast in style and subject matter compared to the artwork the woman is holding. The setting appears to be indoors, possibly a studio or gallery space, given the presence of the artwork and the sculpture. The overall mood of the photograph is reflective and artistic.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-14

This black-and-white image features an elderly woman sitting at a table, holding a piece of artwork. The artwork is a black-and-white print depicting a woman holding a baby. The woman in the photograph has short, curly hair, wears glasses, and is dressed in a light-colored shirt. Behind her, there is a large sculpture of a person's head and neck, which appears to be glossy and reflective. The setting seems to be indoors, possibly in an art studio or gallery. The overall mood of the image is contemplative and focused.