Machine Generated Data

Tags

Amazon

created on 2023-06-26

| Clothing | 100 | |

|

| ||

| Coat | 100 | |

|

| ||

| Furniture | 100 | |

|

| ||

| Architecture | 100 | |

|

| ||

| Building | 100 | |

|

| ||

| Indoors | 100 | |

|

| ||

| Living Room | 100 | |

|

| ||

| Room | 100 | |

|

| ||

| Jacket | 100 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Potted Plant | 98.9 | |

|

| ||

| Couch | 93.9 | |

|

| ||

| Face | 84.6 | |

|

| ||

| Head | 84.6 | |

|

| ||

| Body Part | 73 | |

|

| ||

| Finger | 73 | |

|

| ||

| Hand | 73 | |

|

| ||

| Fashion | 71.7 | |

|

| ||

| Plant | 71.5 | |

|

| ||

| Flower | 70.2 | |

|

| ||

| Flower Arrangement | 70.2 | |

|

| ||

| Wood | 64.5 | |

|

| ||

| Long Sleeve | 57.6 | |

|

| ||

| Sleeve | 57.6 | |

|

| ||

| Accessories | 56.8 | |

|

| ||

| Jewelry | 56.8 | |

|

| ||

| Ring | 56.8 | |

|

| ||

| Chair | 56.1 | |

|

| ||

| Fireplace | 56.1 | |

|

| ||

| Pants | 56.1 | |

|

| ||

| Flower Bouquet | 55.9 | |

|

| ||

Clarifai

created on 2023-10-13

Imagga

created on 2023-06-26

Google

created on 2023-06-26

| Couch | 88.4 | |

|

| ||

| Fashion | 88.1 | |

|

| ||

| Flash photography | 84.8 | |

|

| ||

| Plant | 83.3 | |

|

| ||

| Adaptation | 79.2 | |

|

| ||

| Happy | 77.3 | |

|

| ||

| Tints and shades | 76.9 | |

|

| ||

| Drawer | 75.8 | |

|

| ||

| Event | 71.3 | |

|

| ||

| Font | 71.1 | |

|

| ||

| Room | 67.6 | |

|

| ||

| Living room | 66.3 | |

|

| ||

| Sitting | 65.5 | |

|

| ||

| Stock photography | 65.4 | |

|

| ||

| Formal wear | 63 | |

|

| ||

| Chest of drawers | 62.6 | |

|

| ||

| Vintage clothing | 57.4 | |

|

| ||

| Visual arts | 55.7 | |

|

| ||

| Portrait photography | 54.9 | |

|

| ||

| Photographic paper | 50.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 41-49 |

| Gender | Female, 100% |

| Sad | 99.7% |

| Confused | 23.9% |

| Surprised | 6.4% |

| Fear | 6.1% |

| Angry | 4.1% |

| Disgusted | 2.3% |

| Happy | 0.5% |

| Calm | 0.2% |

Microsoft Cognitive Services

| Age | 47 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| food drinks | 64.9% | |

|

| ||

| people portraits | 22.4% | |

|

| ||

| interior objects | 7.7% | |

|

| ||

| pets animals | 2.9% | |

|

| ||

| events parties | 1.3% | |

|

| ||

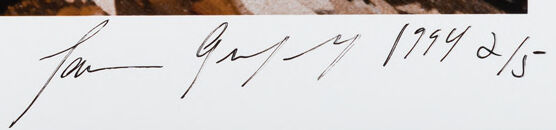

Captions

Microsoft

created on 2023-06-26

| a man and a woman standing in a room | 77.1% | |

|

| ||

| a person standing in a room | 77% | |

|

| ||

| a man and a woman taking a selfie in a room | 53.8% | |

|

| ||

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-31

The image shows a woman standing in what appears to be a damaged or cluttered living space. The woman has curly reddish-brown hair and is wearing a black leather jacket. Behind her, there are palm trees visible outside, suggesting an outdoor environment. The room is filled with debris and scattered items, creating a chaotic and unsettled atmosphere. The overall scene conveys a sense of disorder or disarray.

Created by claude-3-opus-20240229 on 2024-12-31

The image shows a woman with blonde curly hair standing in what appears to be a living room with a view of palm trees and a city skyline outside the window behind her. The living room is in disarray, with overturned furniture, papers scattered on the floor, and interior plants visible. The woman has her hand on her chest and a distressed or anxious expression on her face as she surveys the messy scene in the room.

Created by claude-3-5-sonnet-20241022 on 2024-12-31

This image appears to be taken in the aftermath of what might be the 1994 Northridge earthquake in Los Angeles, given the visible damage and palm trees in the background. The scene shows a damaged interior of what appears to be an apartment or home, with debris scattered around. Someone wearing a black leather jacket is standing among the destruction, with fallen walls, scattered belongings, and general disarray visible throughout the room. There's a couch visible on the left side, some houseplants, and what appears to be books or media scattered on the floor. Through the damaged walls, tall palm trees can be seen against a blue sky, typical of Southern California's landscape.

Text analysis

Amazon