Machine Generated Data

Tags

Amazon

created on 2023-01-12

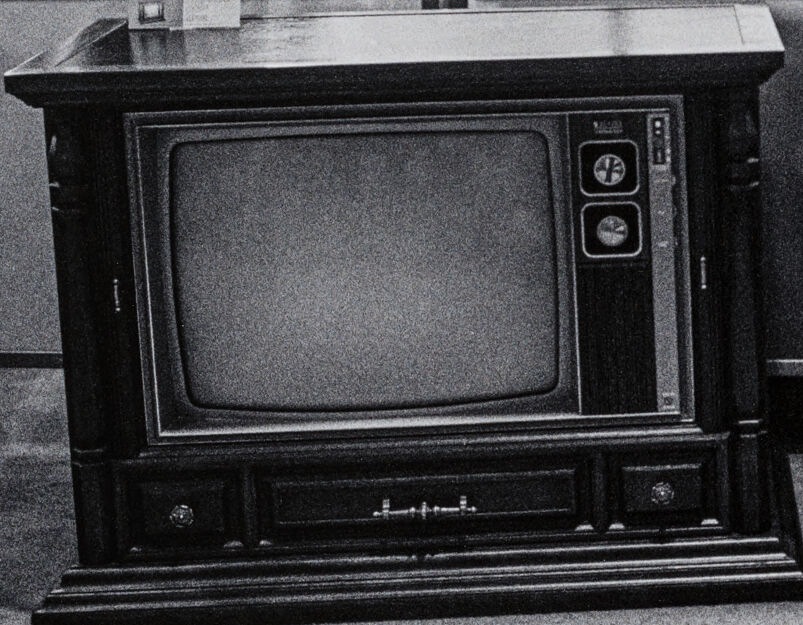

| Screen | 100 | |

|

| ||

| Computer Hardware | 100 | |

|

| ||

| Hardware | 100 | |

|

| ||

| Electronics | 100 | |

|

| ||

| TV | 100 | |

|

| ||

| Person | 98.9 | |

|

| ||

| Baby | 98.9 | |

|

| ||

| Person | 98.8 | |

|

| ||

| Person | 98.5 | |

|

| ||

| Person | 96.9 | |

|

| ||

| Person | 96.6 | |

|

| ||

| Car | 95.7 | |

|

| ||

| Vehicle | 95.7 | |

|

| ||

| Transportation | 95.7 | |

|

| ||

| Handbag | 95.3 | |

|

| ||

| Bag | 95.3 | |

|

| ||

| Accessories | 95.3 | |

|

| ||

| Plant | 94.4 | |

|

| ||

| Person | 91.4 | |

|

| ||

| Person | 89.5 | |

|

| ||

| Car | 88.7 | |

|

| ||

| Shoe | 84.7 | |

|

| ||

| Footwear | 84.7 | |

|

| ||

| Clothing | 84.7 | |

|

| ||

| Face | 83 | |

|

| ||

| Head | 83 | |

|

| ||

| Person | 82.5 | |

|

| ||

| Plant | 79.7 | |

|

| ||

| Person | 78.6 | |

|

| ||

| Person | 76.6 | |

|

| ||

| Wheel | 75.5 | |

|

| ||

| Machine | 75.5 | |

|

| ||

| Monitor | 75.5 | |

|

| ||

| Bicycle | 74.2 | |

|

| ||

| Person | 73.6 | |

|

| ||

| Wheel | 68.9 | |

|

| ||

| Handbag | 67.6 | |

|

| ||

| Handbag | 64.3 | |

|

| ||

| Monitor | 63.3 | |

|

| ||

| Person | 61.6 | |

|

| ||

| Entertainment Center | 56.5 | |

|

| ||

| Indoors | 55.9 | |

|

| ||

Clarifai

created on 2023-10-13

Imagga

created on 2023-01-12

Google

created on 2023-01-12

| Black | 89.5 | |

|

| ||

| Plant | 86.9 | |

|

| ||

| Rectangle | 85.5 | |

|

| ||

| Interior design | 84.9 | |

|

| ||

| Black-and-white | 84.9 | |

|

| ||

| Style | 83.8 | |

|

| ||

| Television set | 83 | |

|

| ||

| Monochrome | 76.9 | |

|

| ||

| Monochrome photography | 76.1 | |

|

| ||

| Houseplant | 74.8 | |

|

| ||

| Building | 74.6 | |

|

| ||

| Classic | 70 | |

|

| ||

| Room | 68.5 | |

|

| ||

| Audio equipment | 68.1 | |

|

| ||

| Display device | 65.2 | |

|

| ||

| Symmetry | 63.8 | |

|

| ||

| Club chair | 63 | |

|

| ||

| Font | 57.8 | |

|

| ||

| Window | 56.8 | |

|

| ||

| Multimedia | 55.6 | |

|

| ||

Microsoft

created on 2023-01-12

| black and white | 93 | |

|

| ||

| old | 83 | |

|

| ||

| text | 81.5 | |

|

| ||

| window | 81 | |

|

| ||

| white | 78.9 | |

|

| ||

| christmas tree | 70.8 | |

|

| ||

| television | 68.2 | |

|

| ||

| black | 68 | |

|

| ||

| vintage | 31.3 | |

|

| ||

| furniture | 25.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 23-33 |

| Gender | Female, 99.9% |

| Calm | 63.9% |

| Confused | 12.1% |

| Happy | 11.4% |

| Surprised | 7.6% |

| Fear | 6.4% |

| Sad | 4.3% |

| Angry | 2.2% |

| Disgusted | 1.6% |

AWS Rekognition

| Age | 23-33 |

| Gender | Male, 97.6% |

| Calm | 66% |

| Angry | 22.2% |

| Surprised | 7.3% |

| Fear | 7% |

| Disgusted | 3.2% |

| Sad | 2.5% |

| Confused | 2.3% |

| Happy | 0.5% |

AWS Rekognition

| Age | 18-26 |

| Gender | Female, 100% |

| Sad | 89.1% |

| Calm | 38.9% |

| Surprised | 9.7% |

| Fear | 6.6% |

| Confused | 6.4% |

| Angry | 3.9% |

| Disgusted | 1.3% |

| Happy | 0.6% |

Microsoft Cognitive Services

| Age | 36 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 68 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Possible |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

| Person | 98.9% | |

|

| ||

| Person | 98.8% | |

|

| ||

| Person | 98.5% | |

|

| ||

| Person | 96.9% | |

|

| ||

| Person | 96.6% | |

|

| ||

| Person | 91.4% | |

|

| ||

| Person | 89.5% | |

|

| ||

| Person | 82.5% | |

|

| ||

| Person | 78.6% | |

|

| ||

| Person | 76.6% | |

|

| ||

| Person | 73.6% | |

|

| ||

| Person | 61.6% | |

|

| ||

| Baby | 98.9% | |

|

| ||

| Car | 95.7% | |

|

| ||

| Car | 88.7% | |

|

| ||

| Handbag | 95.3% | |

|

| ||

| Handbag | 67.6% | |

|

| ||

| Handbag | 64.3% | |

|

| ||

| Plant | 94.4% | |

|

| ||

| Plant | 79.7% | |

|

| ||

| Shoe | 84.7% | |

|

| ||

| Wheel | 75.5% | |

|

| ||

| Wheel | 68.9% | |

|

| ||

| Monitor | 75.5% | |

|

| ||

| Monitor | 63.3% | |

|

| ||

| Bicycle | 74.2% | |

|

| ||

Categories

Imagga

| cars vehicles | 99.3% | |

|

| ||

Captions

Microsoft

created on 2023-01-12

| a vintage photo of a living room | 88% | |

|

| ||

| a vintage photo of an old building | 87.9% | |

|

| ||

| a vintage photo of a living room next to a window | 79.7% | |

|

| ||

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-01-01

The image shows a display of old television sets in what appears to be a retail or showroom setting. The televisions are displaying black and white images, likely from a historic television program or advertisement. In the background, there is a Christmas tree and decorations, suggesting this is a holiday-themed display. The overall atmosphere evokes a nostalgic, vintage feel, transporting the viewer to a bygone era of television technology and entertainment.

Created by claude-3-5-sonnet-20241022 on 2025-01-01

This black and white photograph appears to be taken in what looks like a television showroom or retail store, likely from the 1970s based on the style of the TVs. In the foreground are several console-style television sets, which were popular during that era. These are large wooden cabinet TVs, with one showing active programming while the other appears to be off. There are decorative plants placed on top of the TV sets. Through the store's window in the background, you can see two people standing outside looking in, and there's also a small evergreen tree visible. The image captures a moment in retail and consumer electronics history, showing how televisions were displayed and sold during that period.

Text analysis

Amazon