Machine Generated Data

Tags

Amazon

created on 2023-07-06

| Clothing | 100 | |

|

| ||

| Hat | 99.9 | |

|

| ||

| Cap | 99.9 | |

|

| ||

| Adult | 99.4 | |

|

| ||

| Male | 99.4 | |

|

| ||

| Man | 99.4 | |

|

| ||

| Person | 99.4 | |

|

| ||

| Adult | 98.3 | |

|

| ||

| Male | 98.3 | |

|

| ||

| Man | 98.3 | |

|

| ||

| Person | 98.3 | |

|

| ||

| Glove | 97.7 | |

|

| ||

| Person | 97.7 | |

|

| ||

| Adult | 96.6 | |

|

| ||

| Male | 96.6 | |

|

| ||

| Man | 96.6 | |

|

| ||

| Person | 96.6 | |

|

| ||

| Person | 96.1 | |

|

| ||

| Footwear | 95.9 | |

|

| ||

| Shoe | 95.9 | |

|

| ||

| Overcoat | 95.8 | |

|

| ||

| Person | 95.5 | |

|

| ||

| Glove | 94.2 | |

|

| ||

| Shoe | 90.9 | |

|

| ||

| Face | 89 | |

|

| ||

| Head | 89 | |

|

| ||

| Accessories | 85.3 | |

|

| ||

| Bag | 85.3 | |

|

| ||

| Handbag | 85.3 | |

|

| ||

| Jacket | 73.3 | |

|

| ||

| Jacket | 69.7 | |

|

| ||

| Coat | 62.4 | |

|

| ||

| Photography | 57.9 | |

|

| ||

| Portrait | 57.9 | |

|

| ||

| Beanie | 56.2 | |

|

| ||

| Lady | 56 | |

|

| ||

Clarifai

created on 2023-10-13

Imagga

created on 2023-07-06

| statue | 56.4 | |

|

| ||

| person | 26.7 | |

|

| ||

| man | 23.6 | |

|

| ||

| adult | 21.8 | |

|

| ||

| people | 20.1 | |

|

| ||

| male | 20 | |

|

| ||

| city | 19.9 | |

|

| ||

| mask | 17.1 | |

|

| ||

| clothing | 16.4 | |

|

| ||

| street | 14.7 | |

|

| ||

| building | 14.3 | |

|

| ||

| covering | 14.2 | |

|

| ||

| portrait | 13.6 | |

|

| ||

| dress | 13.5 | |

|

| ||

| travel | 13.4 | |

|

| ||

| black | 13.3 | |

|

| ||

| face | 12.8 | |

|

| ||

| sculpture | 12.5 | |

|

| ||

| business | 12.1 | |

|

| ||

| suit | 12 | |

|

| ||

| women | 11.9 | |

|

| ||

| urban | 11.4 | |

|

| ||

| fashion | 11.3 | |

|

| ||

| human | 11.2 | |

|

| ||

| men | 11.2 | |

|

| ||

| culture | 11.1 | |

|

| ||

| architecture | 10.9 | |

|

| ||

| bag | 10.7 | |

|

| ||

| outdoor | 10.7 | |

|

| ||

| pretty | 10.5 | |

|

| ||

| wind instrument | 10.2 | |

|

| ||

| model | 10.1 | |

|

| ||

| happy | 10 | |

|

| ||

| one | 9.7 | |

|

| ||

| walking | 9.5 | |

|

| ||

| smile | 9.3 | |

|

| ||

| holding | 9.1 | |

|

| ||

| businessman | 8.8 | |

|

| ||

| world | 8.7 | |

|

| ||

| tourist | 8.7 | |

|

| ||

| musical instrument | 8.7 | |

|

| ||

| corporate | 8.6 | |

|

| ||

| walk | 8.6 | |

|

| ||

| attractive | 8.4 | |

|

| ||

| old | 8.4 | |

|

| ||

| outdoors | 8.3 | |

|

| ||

| costume | 8.2 | |

|

| ||

| lady | 8.1 | |

|

| ||

| active | 8.1 | |

|

| ||

| hat | 8.1 | |

|

| ||

| religion | 8.1 | |

|

| ||

| posing | 8 | |

|

| ||

| performer | 8 | |

|

| ||

| job | 8 | |

|

| ||

| disguise | 7.9 | |

|

| ||

| standing | 7.8 | |

|

| ||

| sax | 7.8 | |

|

| ||

| jeans | 7.6 | |

|

| ||

| sport | 7.6 | |

|

| ||

| clothes | 7.5 | |

|

| ||

| alone | 7.3 | |

|

| ||

| landmark | 7.2 | |

|

| ||

| looking | 7.2 | |

|

| ||

| love | 7.1 | |

|

| ||

Google

created on 2023-07-06

| Photograph | 94.2 | |

|

| ||

| Smile | 92.6 | |

|

| ||

| White | 92.2 | |

|

| ||

| Black | 89.8 | |

|

| ||

| Infrastructure | 89.2 | |

|

| ||

| Standing | 86.4 | |

|

| ||

| Black-and-white | 84.8 | |

|

| ||

| Style | 84.1 | |

|

| ||

| People | 78.7 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Monochrome | 73.3 | |

|

| ||

| Monochrome photography | 72.7 | |

|

| ||

| Bag | 69.2 | |

|

| ||

| Road | 69 | |

|

| ||

| Street | 66.7 | |

|

| ||

| Eyewear | 64.7 | |

|

| ||

| Sitting | 64.5 | |

|

| ||

| Human leg | 63 | |

|

| ||

| Academic dress | 61.4 | |

|

| ||

| Luggage and bags | 60.1 | |

|

| ||

Microsoft

created on 2023-07-06

| outdoor | 99.3 | |

|

| ||

| person | 98.8 | |

|

| ||

| black and white | 97.2 | |

|

| ||

| jacket | 95.7 | |

|

| ||

| street | 95.3 | |

|

| ||

| clothing | 92.2 | |

|

| ||

| coat | 88.8 | |

|

| ||

| monochrome | 88.2 | |

|

| ||

| human face | 74.6 | |

|

| ||

| smile | 69.9 | |

|

| ||

| way | 43.5 | |

|

| ||

| sidewalk | 27.4 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

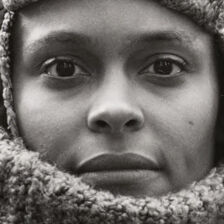

| Age | 24-34 |

| Gender | Female, 100% |

| Calm | 70.8% |

| Surprised | 23.2% |

| Fear | 6.7% |

| Sad | 3.2% |

| Happy | 2.1% |

| Confused | 2% |

| Angry | 1.7% |

| Disgusted | 1% |

Microsoft Cognitive Services

| Age | 26 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Categories

Imagga

| interior objects | 73.3% | |

|

| ||

| streetview architecture | 17.9% | |

|

| ||

| events parties | 3.7% | |

|

| ||

| cars vehicles | 1.6% | |

|

| ||

| food drinks | 1.4% | |

|

| ||

Captions

Microsoft

created on 2023-07-06

| a person sitting on a bench talking on a cell phone | 59.1% | |

|

| ||

| a person sitting on a bench | 59% | |

|

| ||

| a man and a woman sitting on a bench | 58.9% | |

|

| ||

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-01-01

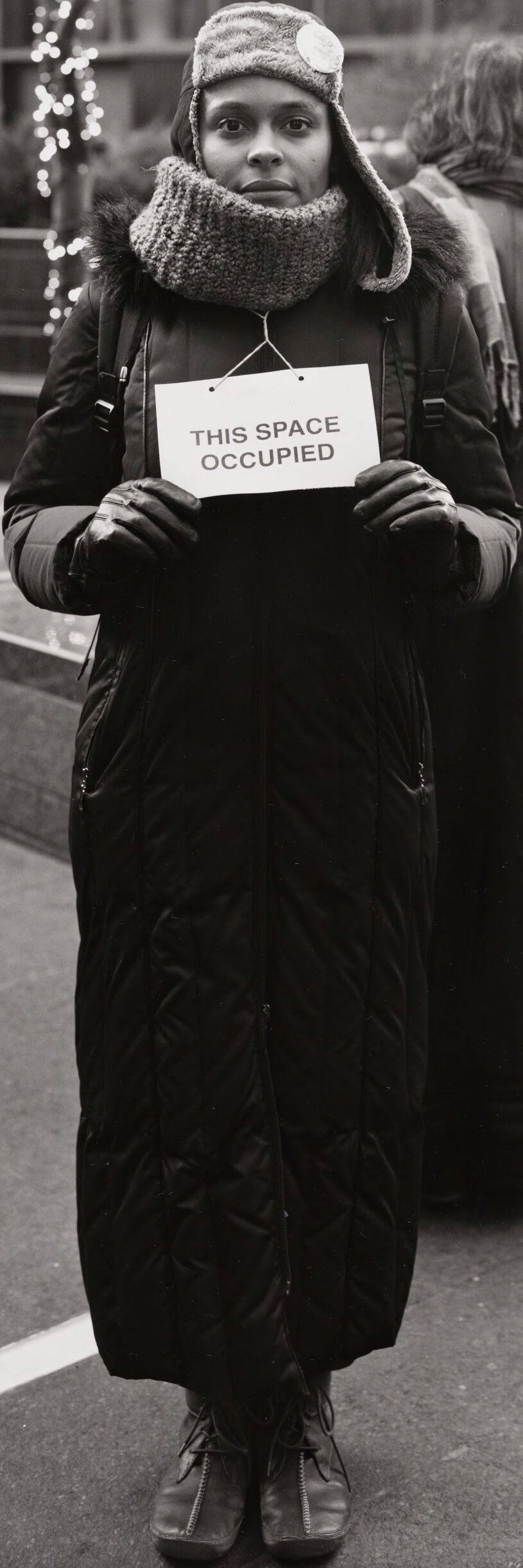

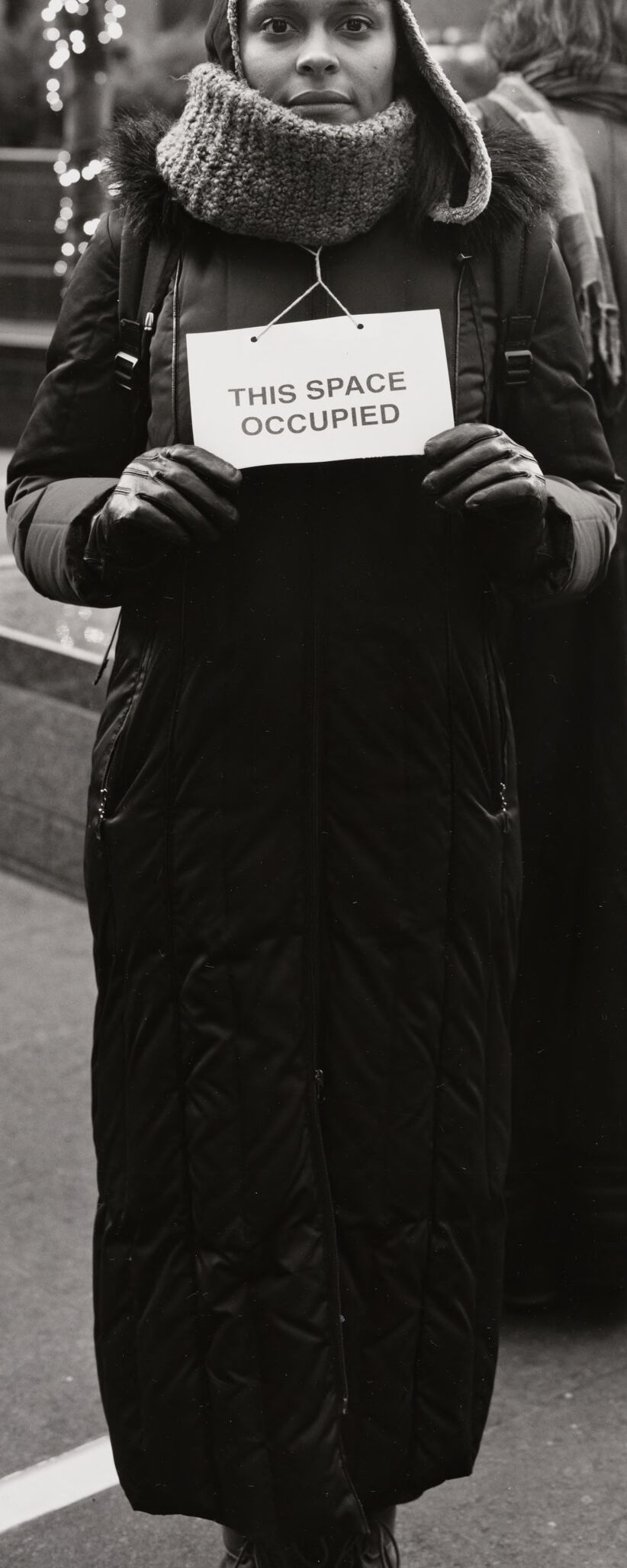

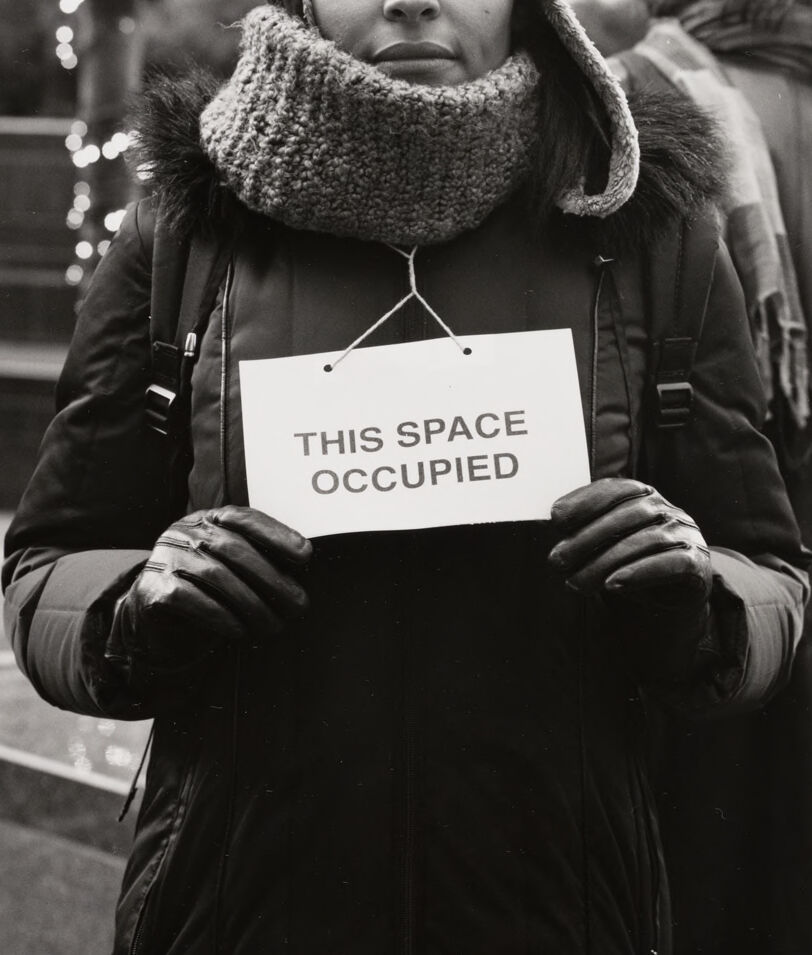

The image shows a person standing in an outdoor urban setting, holding a sign that says "THIS SPACE OCCUPIED". The person is wearing a heavy jacket, a scarf, and a hat, and appears to be in a public area. The background includes a city plaza with Christmas decorations and other people walking by. The overall image conveys a sense of protest or occupation of public space.

Created by claude-3-opus-20240229 on 2025-01-01

The black and white image shows a person standing on a city sidewalk holding a sign that reads "THIS SPACE OCCUPIED". The person is wearing a long winter coat, knit hat, scarf, and boots. They have a serious, determined expression as they face the camera directly, holding the simple handwritten sign in front of them. Other people are sitting on benches or steps in the background, with city buildings and holiday light decorations visible. The image seems to capture a moment of protest or activism, with the person boldly occupying the public space to make a statement.

Created by claude-3-5-sonnet-20241022 on 2025-01-01

This is a black and white photograph showing someone standing outdoors in winter clothing. They're wearing a long black puffy coat, a thick knit scarf, and a winter hat with ear flaps. The person is holding a sign that reads "THIS SPACE OCCUPIED." In the background, there appears to be a plaza or public space with some holiday lights visible, and other people can be seen sitting on what looks like stone benches. The image has a protest or demonstration feel to it, while also capturing the cold winter atmosphere through the subject's bundled-up appearance.

Text analysis

Amazon