Machine Generated Data

Tags

Amazon

created on 2023-07-07

| Body Part | 100 | |

|

| ||

| Finger | 100 | |

|

| ||

| Hand | 100 | |

|

| ||

| Cap | 99.9 | |

|

| ||

| Clothing | 99.9 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Adult | 99.6 | |

|

| ||

| Male | 99.6 | |

|

| ||

| Man | 99.6 | |

|

| ||

| Photography | 99.6 | |

|

| ||

| Face | 99.4 | |

|

| ||

| Head | 99.4 | |

|

| ||

| Portrait | 99.4 | |

|

| ||

| Footwear | 98.8 | |

|

| ||

| Shoe | 98.8 | |

|

| ||

| Musical Instrument | 96.3 | |

|

| ||

| Guitar | 94 | |

|

| ||

| Guitarist | 93.4 | |

|

| ||

| Leisure Activities | 93.4 | |

|

| ||

| Music | 93.4 | |

|

| ||

| Musician | 93.4 | |

|

| ||

| Performer | 93.4 | |

|

| ||

| Hat | 88.1 | |

|

| ||

| Machine | 84 | |

|

| ||

| Wheel | 84 | |

|

| ||

| Beanie | 57.7 | |

|

| ||

| Electrical Device | 56.7 | |

|

| ||

| Microphone | 56.7 | |

|

| ||

| Sitting | 55.3 | |

|

| ||

Clarifai

created on 2023-10-13

| people | 99.8 | |

|

| ||

| one | 99.5 | |

|

| ||

| portrait | 99.4 | |

|

| ||

| man | 98.7 | |

|

| ||

| music | 98.3 | |

|

| ||

| guitar | 98.3 | |

|

| ||

| adult | 98.2 | |

|

| ||

| monochrome | 98.2 | |

|

| ||

| stringed instrument | 95.9 | |

|

| ||

| musician | 94.8 | |

|

| ||

| street | 93.2 | |

|

| ||

| two | 92.3 | |

|

| ||

| sit | 91.3 | |

|

| ||

| instrument | 91 | |

|

| ||

| guitarist | 89.6 | |

|

| ||

| indoors | 89.1 | |

|

| ||

| wear | 86.9 | |

|

| ||

| recreation | 86.1 | |

|

| ||

| sitting | 85.1 | |

|

| ||

| singer | 82.8 | |

|

| ||

Imagga

created on 2023-07-07

Google

created on 2023-07-07

| Flash photography | 87.6 | |

|

| ||

| Black-and-white | 83.9 | |

|

| ||

| Style | 83.8 | |

|

| ||

| Monochrome | 71.3 | |

|

| ||

| Monochrome photography | 71.1 | |

|

| ||

| Human leg | 69.3 | |

|

| ||

| Knee | 68.1 | |

|

| ||

| Sitting | 67.9 | |

|

| ||

| Elbow | 67.7 | |

|

| ||

| Comfort | 65.2 | |

|

| ||

| Room | 65 | |

|

| ||

| Street | 58.2 | |

|

| ||

| Cap | 57 | |

|

| ||

| Bag | 55.7 | |

|

| ||

| Luggage and bags | 55.5 | |

|

| ||

| Motor vehicle | 53.6 | |

|

| ||

| Chair | 51.4 | |

|

| ||

| T-shirt | 50.5 | |

|

| ||

Microsoft

created on 2023-07-07

| person | 98.8 | |

|

| ||

| sitting | 98.7 | |

|

| ||

| clothing | 96.9 | |

|

| ||

| man | 88.2 | |

|

| ||

| black and white | 82 | |

|

| ||

| music | 81.9 | |

|

| ||

| human face | 79.8 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

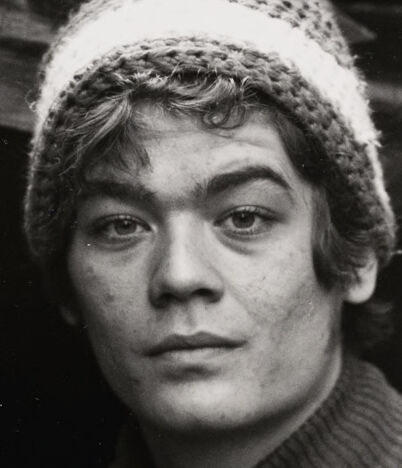

AWS Rekognition

| Age | 23-31 |

| Gender | Male, 87.8% |

| Calm | 91.7% |

| Surprised | 7.6% |

| Fear | 6.1% |

| Sad | 2.9% |

| Happy | 2.4% |

| Angry | 0.4% |

| Confused | 0.3% |

| Disgusted | 0.2% |

Microsoft Cognitive Services

| Age | 31 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Possible |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| people portraits | 64.3% | |

|

| ||

| food drinks | 17.5% | |

|

| ||

| paintings art | 8.8% | |

|

| ||

| events parties | 8.4% | |

|

| ||

Captions

Microsoft

created on 2023-07-07

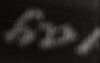

| a man sitting on a bench | 78.9% | |

|

| ||

| a man sitting on a table | 78.8% | |

|

| ||

| a man sitting in a chair | 78.7% | |

|

| ||

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-01-01

The image shows a young man sitting on the floor in what appears to be a cluttered room or studio. He is wearing a warm, woolen sweater and a knit hat, and is holding a musical instrument, likely a ukulele or small guitar. His expression is focused and pensive, suggesting he may be practicing or composing music. The image has a noir-like, black and white aesthetic, creating a moody and introspective atmosphere.

Created by claude-3-5-sonnet-20241022 on 2025-01-01

This is a black and white photograph that appears to be from an earlier era, showing someone sitting cross-legged while holding a musical instrument that appears to be a ukulele or small guitar. The person is wearing a knit winter hat, a ribbed sweater, and what looks like jeans. The setting appears to be indoors, possibly in a cramped or makeshift space, with some items and clutter visible in the background. The image has a casual, intimate quality typical of documentary-style photography.

Text analysis

Amazon