Machine Generated Data

Tags

Amazon

created on 2023-07-06

| Adult | 99 | |

|

| ||

| Male | 99 | |

|

| ||

| Man | 99 | |

|

| ||

| Person | 99 | |

|

| ||

| Person | 98.9 | |

|

| ||

| Person | 98.7 | |

|

| ||

| Wristwatch | 98.4 | |

|

| ||

| Accessories | 98.3 | |

|

| ||

| Glasses | 98.3 | |

|

| ||

| Adult | 96 | |

|

| ||

| Male | 96 | |

|

| ||

| Man | 96 | |

|

| ||

| Person | 96 | |

|

| ||

| Bag | 95.6 | |

|

| ||

| Handbag | 95.6 | |

|

| ||

| Clothing | 95.3 | |

|

| ||

| Footwear | 95.3 | |

|

| ||

| Shoe | 95.3 | |

|

| ||

| Person | 94.9 | |

|

| ||

| Person | 94 | |

|

| ||

| Person | 93.8 | |

|

| ||

| Person | 92.7 | |

|

| ||

| Face | 86.1 | |

|

| ||

| Head | 86.1 | |

|

| ||

| Body Part | 85.9 | |

|

| ||

| Finger | 85.9 | |

|

| ||

| Hand | 85.9 | |

|

| ||

| Shoe | 84.7 | |

|

| ||

| Adult | 83.4 | |

|

| ||

| Male | 83.4 | |

|

| ||

| Man | 83.4 | |

|

| ||

| Person | 83.4 | |

|

| ||

| Coat | 77.8 | |

|

| ||

| Person | 76.9 | |

|

| ||

| Fashion | 72.1 | |

|

| ||

| Shoe | 71.1 | |

|

| ||

| Person | 70.5 | |

|

| ||

| Handbag | 65.9 | |

|

| ||

| Person | 61.7 | |

|

| ||

| Bracelet | 61.5 | |

|

| ||

| Jewelry | 61.5 | |

|

| ||

| Cup | 58.3 | |

|

| ||

| Handbag | 58.2 | |

|

| ||

| Person | 58.1 | |

|

| ||

| Scarf | 57.8 | |

|

| ||

| Hat | 56.4 | |

|

| ||

| Turban | 56.1 | |

|

| ||

| Overcoat | 55.8 | |

|

| ||

| Bazaar | 55.7 | |

|

| ||

| Market | 55.7 | |

|

| ||

| Shop | 55.7 | |

|

| ||

| Lady | 55.3 | |

|

| ||

| People | 55.2 | |

|

| ||

| Text | 55.1 | |

|

| ||

Clarifai

created on 2023-10-13

Imagga

created on 2023-07-06

| mask | 77.2 | |

|

| ||

| man | 41 | |

|

| ||

| helmet | 39.1 | |

|

| ||

| covering | 35.9 | |

|

| ||

| male | 32.6 | |

|

| ||

| protection | 30.9 | |

|

| ||

| brass | 29.8 | |

|

| ||

| protective covering | 29.1 | |

|

| ||

| clothing | 28.9 | |

|

| ||

| military | 27 | |

|

| ||

| danger | 26.4 | |

|

| ||

| soldier | 25.4 | |

|

| ||

| wind instrument | 22.6 | |

|

| ||

| football helmet | 22.4 | |

|

| ||

| war | 21.2 | |

|

| ||

| person | 21 | |

|

| ||

| weapon | 20.3 | |

|

| ||

| people | 19.5 | |

|

| ||

| gun | 19.3 | |

|

| ||

| adult | 17.6 | |

|

| ||

| uniform | 17.5 | |

|

| ||

| headdress | 17.2 | |

|

| ||

| toxic | 16.6 | |

|

| ||

| safety | 16.6 | |

|

| ||

| protective | 16.6 | |

|

| ||

| gas | 16.4 | |

|

| ||

| sport | 15.7 | |

|

| ||

| radiation | 15.7 | |

|

| ||

| cornet | 15.5 | |

|

| ||

| musical instrument | 15.4 | |

|

| ||

| bass | 15 | |

|

| ||

| black | 14.9 | |

|

| ||

| equipment | 14.7 | |

|

| ||

| disaster | 14.6 | |

|

| ||

| chemical | 13.5 | |

|

| ||

| portrait | 12.9 | |

|

| ||

| radioactive | 12.8 | |

|

| ||

| army | 12.7 | |

|

| ||

| nuclear | 12.6 | |

|

| ||

| human | 12 | |

|

| ||

| security | 11.9 | |

|

| ||

| industrial | 11.8 | |

|

| ||

| camouflage | 11.8 | |

|

| ||

| warrior | 11.7 | |

|

| ||

| men | 11.2 | |

|

| ||

| training | 11.1 | |

|

| ||

| suit | 10.8 | |

|

| ||

| disguise | 10.3 | |

|

| ||

| smoke | 10.2 | |

|

| ||

| competition | 10.1 | |

|

| ||

| outdoor | 9.9 | |

|

| ||

| environment | 9.9 | |

|

| ||

| battle | 9.8 | |

|

| ||

| metal | 9.7 | |

|

| ||

| pollution | 9.6 | |

|

| ||

| photographer | 9.4 | |

|

| ||

| industry | 9.4 | |

|

| ||

| holding | 9.1 | |

|

| ||

| dirty | 9 | |

|

| ||

| rifle | 9 | |

|

| ||

| fun | 9 | |

|

| ||

| armed | 8.8 | |

|

| ||

| consumer goods | 8.7 | |

|

| ||

| urban | 8.7 | |

|

| ||

| fight | 8.7 | |

|

| ||

| power | 8.4 | |

|

| ||

| respirator | 8.2 | |

|

| ||

| trombone | 8.1 | |

|

| ||

| armor | 8 | |

|

| ||

| gas mask | 7.9 | |

|

| ||

| bobsled | 7.8 | |

|

| ||

| destruction | 7.8 | |

|

| ||

| player | 7.5 | |

|

| ||

| dark | 7.5 | |

|

| ||

| looking | 7.2 | |

|

| ||

| active | 7.2 | |

|

| ||

| team | 7.2 | |

|

| ||

| game | 7.1 | |

|

| ||

| handsome | 7.1 | |

|

| ||

Google

created on 2023-07-06

| Black | 89.6 | |

|

| ||

| Black-and-white | 84.7 | |

|

| ||

| Style | 84 | |

|

| ||

| Hat | 82.1 | |

|

| ||

| Monochrome | 72.5 | |

|

| ||

| Monochrome photography | 72.1 | |

|

| ||

| Event | 71.9 | |

|

| ||

| Street | 65.9 | |

|

| ||

| History | 64.7 | |

|

| ||

| Tradition | 61.1 | |

|

| ||

| Costume | 59.8 | |

|

| ||

| Road | 59.6 | |

|

| ||

| Public event | 54.8 | |

|

| ||

| City | 54.4 | |

|

| ||

| Vintage clothing | 53.4 | |

|

| ||

| Motor vehicle | 52.7 | |

|

| ||

| Masque | 51 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

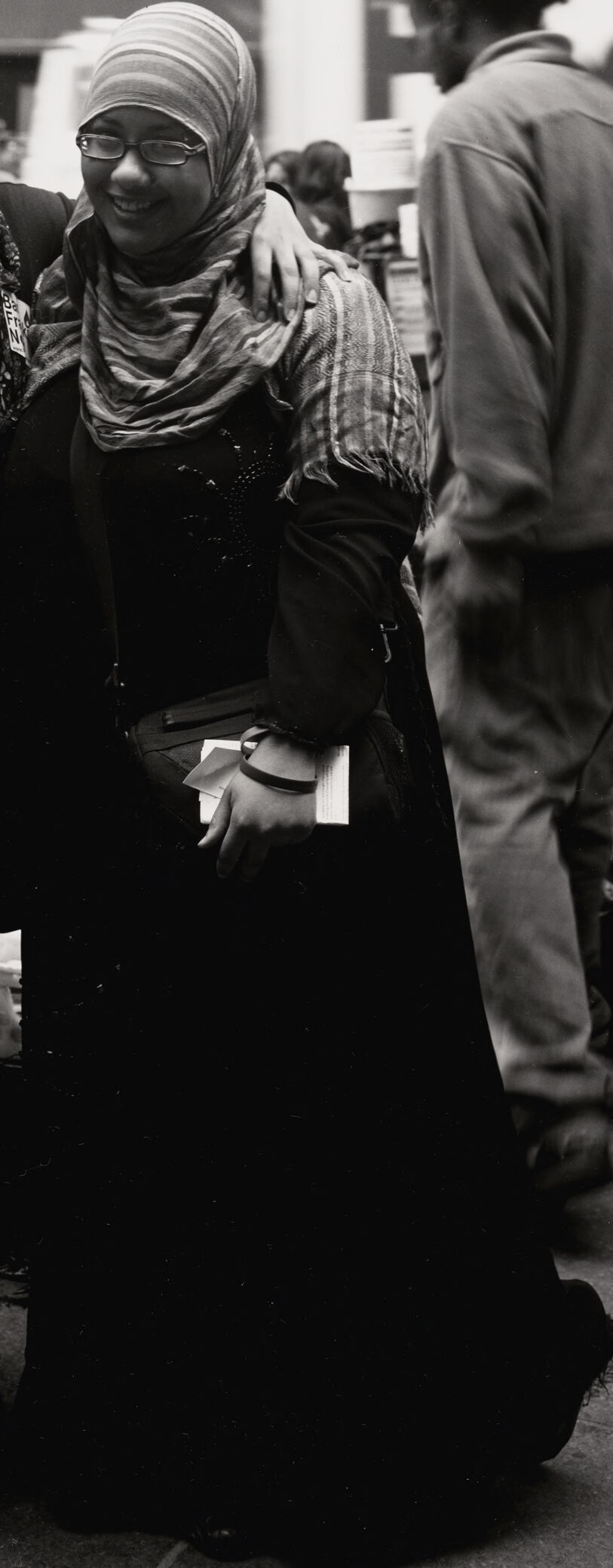

| Age | 21-29 |

| Gender | Female, 100% |

| Happy | 83.3% |

| Surprised | 7.8% |

| Fear | 7.5% |

| Calm | 3.8% |

| Confused | 2.6% |

| Sad | 2.5% |

| Disgusted | 1.4% |

| Angry | 1.2% |

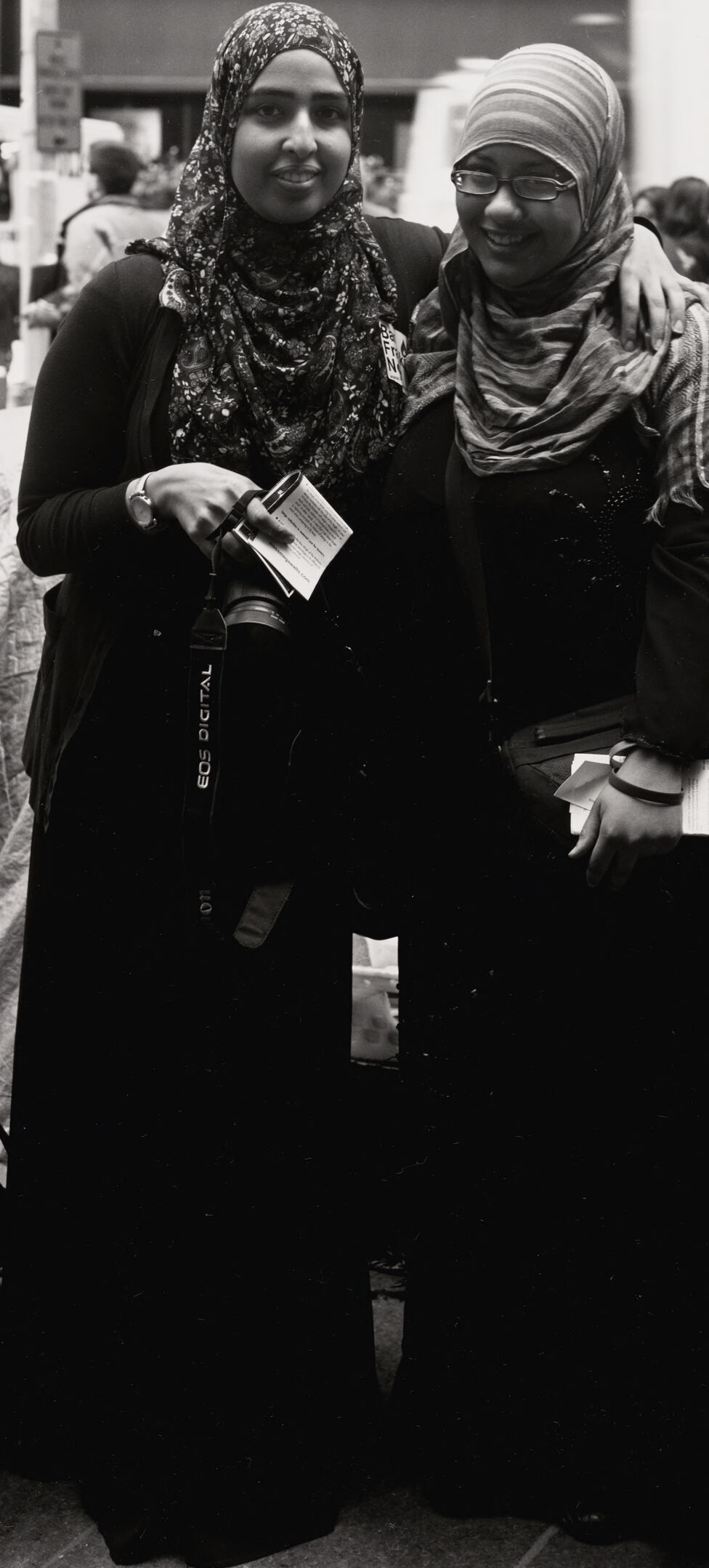

AWS Rekognition

| Age | 20-28 |

| Gender | Female, 100% |

| Happy | 99% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0.3% |

| Disgusted | 0.2% |

| Angry | 0.1% |

| Calm | 0% |

AWS Rekognition

| Age | 22-30 |

| Gender | Female, 56.5% |

| Angry | 47.3% |

| Disgusted | 20% |

| Calm | 17.4% |

| Fear | 6.8% |

| Surprised | 6.8% |

| Happy | 5.3% |

| Confused | 3.7% |

| Sad | 3.3% |

AWS Rekognition

| Age | 16-22 |

| Gender | Male, 71.8% |

| Angry | 51.9% |

| Calm | 33.3% |

| Sad | 8.7% |

| Surprised | 6.4% |

| Fear | 6.2% |

| Confused | 1.4% |

| Happy | 1% |

| Disgusted | 0.8% |

Microsoft Cognitive Services

| Age | 23 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 21 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

| Adult | 99% | |

|

| ||

| Adult | 96% | |

|

| ||

| Adult | 83.4% | |

|

| ||

| Male | 99% | |

|

| ||

| Male | 96% | |

|

| ||

| Male | 83.4% | |

|

| ||

| Man | 99% | |

|

| ||

| Man | 96% | |

|

| ||

| Man | 83.4% | |

|

| ||

| Person | 99% | |

|

| ||

| Person | 98.9% | |

|

| ||

| Person | 98.7% | |

|

| ||

| Person | 96% | |

|

| ||

| Person | 94.9% | |

|

| ||

| Person | 94% | |

|

| ||

| Person | 93.8% | |

|

| ||

| Person | 92.7% | |

|

| ||

| Person | 83.4% | |

|

| ||

| Person | 76.9% | |

|

| ||

| Person | 70.5% | |

|

| ||

| Person | 61.7% | |

|

| ||

| Person | 58.1% | |

|

| ||

| Wristwatch | 98.4% | |

|

| ||

| Glasses | 98.3% | |

|

| ||

| Handbag | 95.6% | |

|

| ||

| Handbag | 65.9% | |

|

| ||

| Handbag | 58.2% | |

|

| ||

| Shoe | 95.3% | |

|

| ||

| Shoe | 84.7% | |

|

| ||

| Shoe | 71.1% | |

|

| ||

| Bracelet | 61.5% | |

|

| ||

| Cup | 58.3% | |

|

| ||

Categories

Imagga

| paintings art | 52.2% | |

|

| ||

| people portraits | 25.6% | |

|

| ||

| interior objects | 9% | |

|

| ||

| food drinks | 3.7% | |

|

| ||

| events parties | 2.9% | |

|

| ||

| streetview architecture | 2.7% | |

|

| ||

| cars vehicles | 1.9% | |

|

| ||

Captions

Microsoft

created on 2023-07-06

| a group of people standing next to a suitcase | 93.3% | |

|

| ||

| a group of people standing on top of a suitcase | 89.7% | |

|

| ||

| a group of people standing next to luggage | 89.6% | |

|

| ||

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-31

The image shows two women dressed in traditional Islamic clothing, wearing black abayas and hijabs. They appear to be in a crowded public space, with other individuals visible in the background. The women are standing close together and have a friendly demeanor, suggesting they may be conversing or spending time together. The image has a black and white tone, contributing to the sense of an urban setting.

Created by claude-3-5-sonnet-20241022 on 2024-12-31

This black and white photograph shows two women wearing hijabs and long black dresses or abayas. One is wearing a patterned hijab with a floral design, while the other has on a simpler, solid-colored head covering and is wearing glasses. They appear to be at some kind of public gathering or event, as there are other people visible in the background. One of the women is holding what appears to be a camera or device. They both have warm, friendly expressions and seem to be sharing a moment of companionship. The image captures a sense of friendship and community.

Text analysis

Amazon