Machine Generated Data

Tags

Amazon

created on 2023-07-06

| Person | 99.5 | |

|

| ||

| Clothing | 99.4 | |

|

| ||

| Footwear | 99.4 | |

|

| ||

| Shoe | 99.4 | |

|

| ||

| Shoe | 99.2 | |

|

| ||

| Accessories | 98.1 | |

|

| ||

| Bag | 98.1 | |

|

| ||

| Photography | 97.7 | |

|

| ||

| Person | 97.7 | |

|

| ||

| Adult | 97.7 | |

|

| ||

| Male | 97.7 | |

|

| ||

| Man | 97.7 | |

|

| ||

| Shoe | 97.4 | |

|

| ||

| Face | 96.4 | |

|

| ||

| Head | 96.4 | |

|

| ||

| Portrait | 96.4 | |

|

| ||

| Shoe | 95.9 | |

|

| ||

| Person | 95.8 | |

|

| ||

| Adult | 95.8 | |

|

| ||

| Male | 95.8 | |

|

| ||

| Man | 95.8 | |

|

| ||

| Person | 95 | |

|

| ||

| Adult | 95 | |

|

| ||

| Male | 95 | |

|

| ||

| Man | 95 | |

|

| ||

| Handbag | 90 | |

|

| ||

| Person | 87.3 | |

|

| ||

| Person | 82.3 | |

|

| ||

| Coat | 81.9 | |

|

| ||

| Handbag | 81.6 | |

|

| ||

| Person | 75.2 | |

|

| ||

| People | 72 | |

|

| ||

| Handbag | 63.4 | |

|

| ||

| Text | 62 | |

|

| ||

| Boot | 57.4 | |

|

| ||

| Purse | 57.3 | |

|

| ||

| Blackboard | 56.7 | |

|

| ||

| Sneaker | 56.6 | |

|

| ||

| City | 56.2 | |

|

| ||

| Road | 56.2 | |

|

| ||

| Street | 56.2 | |

|

| ||

| Urban | 56.2 | |

|

| ||

| Box | 56.1 | |

|

| ||

| Flag | 55.4 | |

|

| ||

Clarifai

created on 2023-10-13

Imagga

created on 2023-07-06

| robe | 51.9 | |

|

| ||

| garment | 49.9 | |

|

| ||

| clothing | 47.6 | |

|

| ||

| black | 36.6 | |

|

| ||

| covering | 28.1 | |

|

| ||

| people | 27.9 | |

|

| ||

| person | 26.2 | |

|

| ||

| man | 24.2 | |

|

| ||

| adult | 21.4 | |

|

| ||

| male | 21.3 | |

|

| ||

| mask | 21.1 | |

|

| ||

| fashion | 19.6 | |

|

| ||

| portrait | 19.4 | |

|

| ||

| dress | 19 | |

|

| ||

| model | 18.7 | |

|

| ||

| clothes | 16.9 | |

|

| ||

| dark | 16.7 | |

|

| ||

| attractive | 16.1 | |

|

| ||

| consumer goods | 15.9 | |

|

| ||

| face | 15.6 | |

|

| ||

| pretty | 14.7 | |

|

| ||

| lady | 13.8 | |

|

| ||

| sexy | 13.6 | |

|

| ||

| looking | 13.6 | |

|

| ||

| style | 12.6 | |

|

| ||

| posing | 11.5 | |

|

| ||

| women | 11.1 | |

|

| ||

| business | 10.9 | |

|

| ||

| lifestyle | 10.8 | |

|

| ||

| night | 10.7 | |

|

| ||

| elegant | 10.3 | |

|

| ||

| elegance | 10.1 | |

|

| ||

| gorgeous | 10 | |

|

| ||

| holding | 9.9 | |

|

| ||

| hair | 9.5 | |

|

| ||

| indoors | 8.8 | |

|

| ||

| brunette | 8.7 | |

|

| ||

| standing | 8.7 | |

|

| ||

| party | 8.6 | |

|

| ||

| adults | 8.5 | |

|

| ||

| costume | 8.4 | |

|

| ||

| hand | 8.4 | |

|

| ||

| pose | 8.2 | |

|

| ||

| wet suit | 8 | |

|

| ||

| nightlife | 7.8 | |

|

| ||

| happy | 7.5 | |

|

| ||

| city | 7.5 | |

|

| ||

| one | 7.5 | |

|

| ||

| silhouette | 7.4 | |

|

| ||

| 20s | 7.3 | |

|

| ||

| make | 7.3 | |

|

| ||

| body | 7.2 | |

|

| ||

| love | 7.1 | |

|

| ||

| disguise | 7 | |

|

| ||

Google

created on 2023-07-06

| Photograph | 94.2 | |

|

| ||

| White | 92.2 | |

|

| ||

| Black | 90.2 | |

|

| ||

| Infrastructure | 88.7 | |

|

| ||

| Black-and-white | 87.8 | |

|

| ||

| Standing | 86.4 | |

|

| ||

| Style | 84.2 | |

|

| ||

| Grey | 84.2 | |

|

| ||

| Headgear | 81.8 | |

|

| ||

| Monochrome | 79.7 | |

|

| ||

| Monochrome photography | 78.8 | |

|

| ||

| Road | 78.2 | |

|

| ||

| City | 78 | |

|

| ||

| Luggage and bags | 74.7 | |

|

| ||

| Fashion design | 74.6 | |

|

| ||

| Snapshot | 74.3 | |

|

| ||

| Event | 71.8 | |

|

| ||

| Street | 70.1 | |

|

| ||

| Pedestrian | 69.4 | |

|

| ||

| Human leg | 68.3 | |

|

| ||

Microsoft

created on 2023-07-06

| clothing | 98.6 | |

|

| ||

| person | 96.8 | |

|

| ||

| black and white | 94.1 | |

|

| ||

| street | 91.7 | |

|

| ||

| footwear | 84.7 | |

|

| ||

| woman | 83.2 | |

|

| ||

| human face | 78.2 | |

|

| ||

| monochrome | 70.3 | |

|

| ||

| girl | 63.3 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

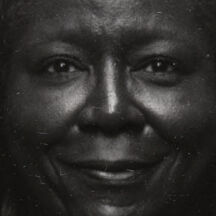

| Age | 49-57 |

| Gender | Female, 100% |

| Happy | 85.4% |

| Surprised | 6.7% |

| Fear | 6.7% |

| Calm | 4.7% |

| Sad | 3.1% |

| Confused | 1.8% |

| Disgusted | 1.6% |

| Angry | 1% |

AWS Rekognition

| Age | 4-10 |

| Gender | Female, 78.6% |

| Calm | 79.2% |

| Fear | 8% |

| Happy | 7.3% |

| Surprised | 6.6% |

| Sad | 3.1% |

| Disgusted | 2.6% |

| Angry | 2% |

| Confused | 0.8% |

AWS Rekognition

| Age | 6-16 |

| Gender | Female, 99.3% |

| Calm | 56.7% |

| Sad | 28.6% |

| Fear | 7% |

| Surprised | 6.7% |

| Happy | 6% |

| Confused | 5.3% |

| Disgusted | 4.8% |

| Angry | 3.1% |

Microsoft Cognitive Services

| Age | 54 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Amazon

Categories

Imagga

| interior objects | 84.4% | |

|

| ||

| streetview architecture | 7.2% | |

|

| ||

| food drinks | 6.1% | |

|

| ||

| paintings art | 1.3% | |

|

| ||

Captions

Microsoft

created on 2023-07-06

| a person is walking down the street | 86.6% | |

|

| ||

| a person walking down a street | 85.9% | |

|

| ||

| a couple of people that are standing in the street | 74.1% | |

|

| ||

Anthropic Claude

Created by claude-3-haiku-20240307 on 2025-01-01

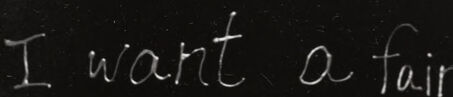

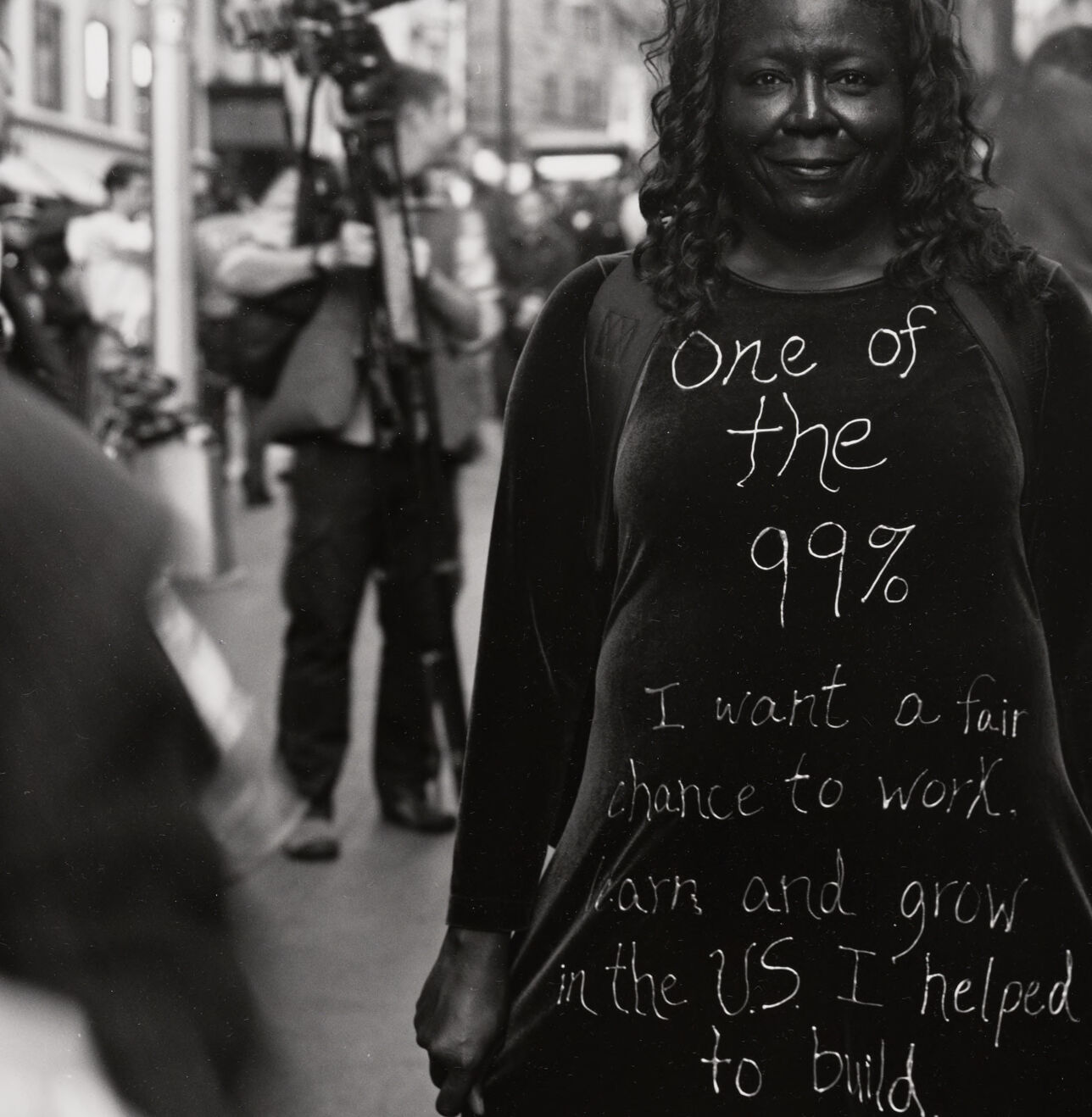

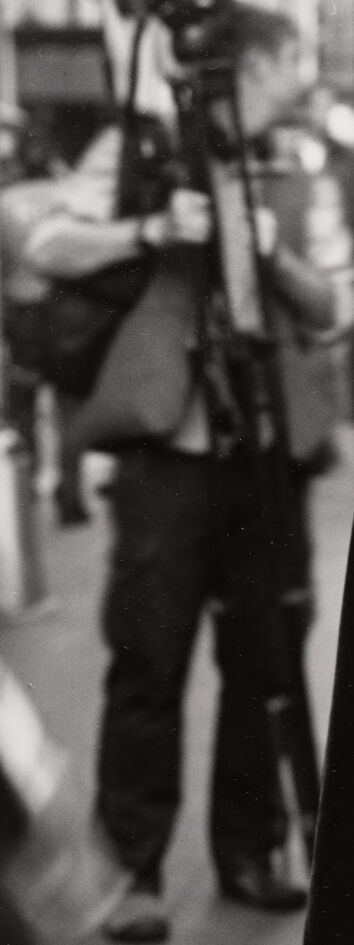

The image shows a person wearing a black shirt with white text that says "One of the 99% I want a fair chance to work, earn and grow in the US I helped to build." The person appears to be standing on a city street with flags and other people visible in the background. The person has curly hair and a warm, friendly expression on their face. The image seems to be conveying a social or political message related to inequality and the desire for equal opportunities.

Created by claude-3-opus-20240229 on 2025-01-01

The black-and-white photograph depicts a young Black woman standing on a city sidewalk, smiling at the camera. She is wearing a long-sleeved black shirt with white handwritten text on it that reads:

"One of

the

99%

I want a fair

chance to work,

learn and grow

in the US I helped

to build."

The woman has curly dark hair and appears to be wearing boots. In the background, other people can be seen walking on the sidewalk near some storefronts and parked vehicles. The photo seems to have been taken during some kind of protest or demonstration, based on the message written on the woman's shirt expressing a desire for fairness, opportunity and recognition of her contributions to the country. The "99%" reference suggests this may be related to the Occupy Wall Street movement which highlighted wealth and income inequality.

Created by claude-3-5-sonnet-20241022 on 2025-01-01

This is a powerful black and white photograph taken at what appears to be a protest or demonstration. The subject is wearing a black dress with white text written on it that reads "One of the 99%" and "I want a fair chance to work, learn and grow in the US I helped to build." They are wearing tall boots and standing on what appears to be a city street. There are other people and camera equipment visible in the background, suggesting this was taken at a media-covered event. The image appears to be from the Occupy Wall Street movement, which popularized the concept of "the 99%" versus "the 1%" in discussions about economic inequality.

Text analysis

Amazon