Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

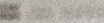

| Age | 1-7 |

| Gender | Female, 100% |

| Sad | 96.9% |

| Calm | 44.5% |

| Fear | 6.9% |

| Surprised | 6.4% |

| Angry | 0.7% |

| Disgusted | 0.6% |

| Confused | 0.2% |

| Happy | 0.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 99.4% | |

Categories

Imagga

created on 2023-01-11

| paintings art | 58.2% | |

| people portraits | 41.3% | |

Captions

Microsoft

created by unknown on 2023-01-11

| a person sitting in front of a computer | 74.8% | |

| a girl sitting in front of a computer | 58.9% | |

| a person sitting in front of a computer | 58.8% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip-2 on 2025-06-30

two children sitting at a table with a television in the background

Created by general-english-image-caption-blip on 2025-05-04

a photograph of a couple of children sitting at a table

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-16

The image depicts an indoor scene with a vintage aesthetic. The foreground features a patterned tablecloth on a table, and behind it is a cabinet displaying assorted crockery and decorative items. On top of the cabinet, there is an old-fashioned television and a radio alongside other objects, including a small doll. A curtain with a floral design is visible on the right side, suggesting a window nearby. The background includes intricate wall decor that adds texture and depth to the room.

Created by gpt-4o-2024-08-06 on 2025-06-16

The image is a black-and-white photograph depicting an indoor setting with a vintage aesthetic. In the background, there is a cabinet with glass doors that displays various dishware and decorative items, including cups, saucers, and figurines. Atop the cabinet sits an old-fashioned television with knobs, placed on a crocheted doily. To the side of the television, there is a radio and a small doll with outstretched arms. The foreground shows a checkered tablecloth draped over a table, and there are two children sitting at the table. One of the children is wearing a patterned shirt and rests their hand on the table. The setting suggests a cozy, domestic atmosphere.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-27

This appears to be a vintage black and white photograph taken in what looks like a home dining area in the 1960s or 1970s. In the background, there's a china cabinet displaying dishes and decorative items, with a vintage television set and a doll placed on top. The TV appears to be an old box-style model with an antenna. The foreground shows a table with a checkered tablecloth. The scene has a distinctly nostalgic, mid-century domestic feel, complete with lace curtains visible at the edge of the frame. The composition captures a moment of everyday life from this era, with the mix of modern technology (the TV) and traditional home furnishings creating an interesting snapshot of the period.

Created by claude-3-haiku-20240307 on 2025-01-01

The image shows a black and white photograph of two young children sitting at a table in what appears to be a home setting. In the background, there is a television set and various household items and toys. The children seem to be engaged in some activity, with one child thoughtfully resting their chin on their hand. The image has a nostalgic and evocative quality, capturing a moment in time from what seems to be a family home.

Created by claude-3-opus-20240229 on 2025-01-01

The black and white photograph shows two young children sitting at a table in what appears to be a living room. The table is covered with a checkered tablecloth and there are various items on shelves behind the TV set, including decorative plates and figurines.

The older child, a girl, is looking straight at the camera with a thoughtful expression. The younger child, a boy, has his chin resting on his hand and seems to be more distracted, looking off to the side.

Overall, the image has a nostalgic, vintage feel capturing a candid moment from the children's daily life at home in an earlier era, based on details like the older television set and home decor visible.

Created by claude-3-5-sonnet-20241022 on 2025-01-01

This is a black and white photograph that appears to be from the 1970s or 1980s, showing a domestic interior scene. In the background, there's a china cabinet displaying dishes and decorative items, with a vintage television set and what appears to be a radio sitting on top. There's also a doll displayed on top of the TV. The foreground shows a table with a checkered tablecloth. The setting appears to be a typical middle-class home of that era, complete with lace curtains visible at the side of the frame. The decor and furnishings reflect the domestic style common in that time period.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-09

The image is a black-and-white photograph of two children sitting at a table in a living room.

The child on the left has dark, curly hair and is wearing a short-sleeved shirt with a pattern. The child on the right has short, dark hair and is wearing a short-sleeved shirt with a pattern. They are both sitting at a table with a checkered tablecloth.

In the background, there is a television on a cabinet, and a doll is standing on top of the cabinet. There are also various dishes and other items on the shelves below the television.

The overall atmosphere of the image suggests that it was taken in the 1950s or 1960s, based on the style of clothing and the furniture. The image appears to be a candid shot of the children, possibly taken by a family member or friend.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-09

This image is a black-and-white photograph of two children sitting at a table in front of a television. The child on the left has dark, curly hair and is wearing a shirt with a pattern of various objects, including what appears to be a camera. The child on the right has short, dark hair and is wearing a shirt with stripes.

The table they are sitting at is covered with a checkered tablecloth. Behind them, there is a television on top of a cabinet, with a doll standing next to it. The background of the image shows a wall with a window, and a shelf with various objects on it.

The overall atmosphere of the image suggests that it was taken in the 1950s or 1960s, based on the style of the clothing and the technology depicted. The image appears to be a candid shot of two children enjoying some time together, perhaps watching TV or playing games.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-06-09

The image is a black-and-white photograph of two children sitting at a table in a room. The room is well-lit, and the children are the main focus of the image. They are sitting at a table with a checkered tablecloth, and they appear to be eating something. The boy on the left is holding a piece of food in his mouth, while the boy on the right is holding a bag of chips. Behind them, there is a cabinet with various items on top, including a television, a doll, and other objects. The image has a nostalgic and old-fashioned feel to it.

Created by amazon.nova-pro-v1:0 on 2025-06-09

The black-and-white photo shows two kids sitting at a table in a room. The boy on the left has curly hair and is eating something. He is wearing a shirt with a pattern. Next to him is a boy with short hair, who is also eating something. In front of them is a table with a checkered tablecloth. Behind them is a cabinet with a TV on top and a shelf with cups and plates.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-04

Here's a description of the image:

The black-and-white photograph captures a domestic scene, likely from a past era. Two young boys are the central focus, positioned at a table covered with a checkered tablecloth. The boy on the left has curly hair and is looking directly at the camera, with his finger up to his mouth. The boy on the right is partially obscured, peeking from behind a curtain, also with his hand near his mouth.

Behind them, a wooden cabinet dominates the background. On top of the cabinet sits an old television set, with a doll standing next to it. A small radio sits next to the doll. The cabinet below is glass-fronted, displaying various objects, including dishware, figurines, and other decorative items. The backdrop is a patterned wall covering. A patterned curtain frames the right side of the scene. The overall impression is one of a family scene from a bygone era.

Created by gemini-2.0-flash on 2025-05-04

This black and white photograph depicts two young children at a table in what appears to be a home interior. The child on the left has curly hair and wears a patterned shirt with what might be suspenders. They are looking directly at the camera with their fingers to their mouth, seemingly in a thoughtful or contemplative manner. The other child, who has straight hair, is leaning against a curtain to the right, also with fingers near their mouth, perhaps eating something.

The table in front of them is covered with a checkered tablecloth. In the background, there's a wooden cabinet topped with a vintage television covered with a cloth. A doll and a radio are also on top of the cabinet. The cabinet has glass doors, behind which are visible various dishes and decorative items.

The setting looks like a modest home, possibly dating back to the mid-20th century, based on the style of the furniture and television. The image's grainy texture and composition contribute to a nostalgic or documentary feel.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-06-01

This black-and-white photograph depicts a domestic scene with two children sitting at a table. The child on the left, with curly hair, is seated at the table with their hand resting on their chin, appearing thoughtful or contemplative. The child on the right is leaning on the table, partially obscured by a curtain, and looking towards the camera with a more relaxed posture.

The table is covered with a checkered tablecloth, and there are some items on it, including what appears to be a small toy or figurine. Behind the children, there is a wooden cabinet with glass doors, displaying various dishes and decorative items. On top of the cabinet, there is a television set with a cloth draped over it, a small doll, and a plant. The room has a vintage feel, suggesting the photo might be from an earlier era. The background includes a window with lace curtains, adding to the homely atmosphere of the scene.

Qwen

Created by Qwen/Qwen2.5-VL-7B-Instruct on 2025-07-14

The image is a black-and-white photograph that appears to be a candid, domestic scene. It captures two children sitting at a table with a checkered tablecloth. The child on the left has curly hair and is holding their hand to their mouth, while the child on the right is leaning on the table with their hand resting on their cheek. The background features a piece of furniture with glass doors displaying decorative items such as plates, bowls, and small figurines. On top of the furniture sits a vintage television set, a doll, and other small objects. The room is partially framed by a curtain with a floral pattern, and there is a window with a view of a bridge in the distance. The overall atmosphere of the photograph suggests a quiet, domestic moment, possibly from the mid-20th century.

Created by Qwen/Qwen2.5-VL-72B-Instruct on 2025-07-14

This black-and-white photograph captures a domestic scene featuring two children sitting at a table covered with a checkered tablecloth. The child on the left has curly hair and appears to be eating something with their hand, while the child on the right is partially hidden behind a curtain and is also eating.

In the background, there is a cabinet with a glass door displaying various items, including dishes and decorative objects. On top of the cabinet, there is an old-fashioned television set with a cloth draped over it, and a doll standing next to the TV. The setting suggests a cozy, lived-in home environment, with a mix of everyday items and personal touches. The overall mood of the image is nostalgic and intimate.