Machine Generated Data

Tags

Amazon

created on 2022-08-13

| Person | 99.6 | |

|

| ||

| Human | 99.6 | |

|

| ||

| Interior Design | 99.6 | |

|

| ||

| Indoors | 99.6 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Person | 99.3 | |

|

| ||

| Person | 99.2 | |

|

| ||

| Art | 89.5 | |

|

| ||

| Metropolis | 78.3 | |

|

| ||

| Building | 78.3 | |

|

| ||

| Urban | 78.3 | |

|

| ||

| City | 78.3 | |

|

| ||

| Town | 78.3 | |

|

| ||

| Screen | 64.8 | |

|

| ||

| Electronics | 64.8 | |

|

| ||

| Art Gallery | 61.4 | |

|

| ||

| Photography | 60.3 | |

|

| ||

| Photo | 60.3 | |

|

| ||

| Home Decor | 57.9 | |

|

| ||

| Monitor | 57.2 | |

|

| ||

| Display | 57.2 | |

|

| ||

| Collage | 56 | |

|

| ||

| Poster | 56 | |

|

| ||

| Advertisement | 56 | |

|

| ||

| Flooring | 55.1 | |

|

| ||

Clarifai

created on 2023-10-31

| indoors | 98.7 | |

|

| ||

| family | 97 | |

|

| ||

| people | 95.8 | |

|

| ||

| room | 95.3 | |

|

| ||

| exhibition | 94.6 | |

|

| ||

| television | 93.5 | |

|

| ||

| man | 92 | |

|

| ||

| art | 91.5 | |

|

| ||

| museum | 90.7 | |

|

| ||

| painting | 89.8 | |

|

| ||

| technology | 89 | |

|

| ||

| mirror | 85.4 | |

|

| ||

| wall | 85.2 | |

|

| ||

| contemporary | 85.2 | |

|

| ||

| light | 83.7 | |

|

| ||

| woman | 82.7 | |

|

| ||

| furniture | 80.7 | |

|

| ||

| bedroom | 80.2 | |

|

| ||

| interior design | 80 | |

|

| ||

| window | 79.9 | |

|

| ||

Imagga

created on 2022-08-13

Google

created on 2022-08-13

| Art | 82.8 | |

|

| ||

| Rectangle | 76.6 | |

|

| ||

| Space | 74.4 | |

|

| ||

| Event | 73.7 | |

|

| ||

| Electronic device | 73.6 | |

|

| ||

| Automotive design | 73.5 | |

|

| ||

| Display device | 68.9 | |

|

| ||

| Visual arts | 68.3 | |

|

| ||

| Font | 65.2 | |

|

| ||

| Multimedia | 64.4 | |

|

| ||

| Projector accessory | 64 | |

|

| ||

| Room | 63.9 | |

|

| ||

| Exhibition | 60.8 | |

|

| ||

| Painting | 60 | |

|

| ||

| Flooring | 59.4 | |

|

| ||

| Tourist attraction | 54.5 | |

|

| ||

| Modern art | 54 | |

|

| ||

| Graphics | 53.5 | |

|

| ||

| Art exhibition | 53.3 | |

|

| ||

| Projection screen | 52.9 | |

|

| ||

Microsoft

created on 2022-08-13

| wall | 97.3 | |

|

| ||

| computer | 95.5 | |

|

| ||

| person | 93.1 | |

|

| ||

| television | 93 | |

|

| ||

| art | 90 | |

|

| ||

| human face | 85.5 | |

|

| ||

| screenshot | 81.6 | |

|

| ||

| gallery | 72.6 | |

|

| ||

| laptop | 71.7 | |

|

| ||

| screen | 71.5 | |

|

| ||

| man | 69.6 | |

|

| ||

| text | 66.4 | |

|

| ||

| design | 66.3 | |

|

| ||

| room | 65.5 | |

|

| ||

| clothing | 64.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 23-31 |

| Gender | Female, 100% |

| Calm | 100% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0% |

| Angry | 0% |

| Happy | 0% |

| Disgusted | 0% |

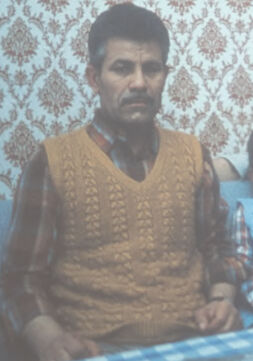

AWS Rekognition

| Age | 48-54 |

| Gender | Male, 99.9% |

| Calm | 66.4% |

| Sad | 47.5% |

| Surprised | 6.8% |

| Fear | 6.4% |

| Confused | 1.9% |

| Disgusted | 1.8% |

| Angry | 1.2% |

| Happy | 0.5% |

AWS Rekognition

| Age | 45-51 |

| Gender | Male, 100% |

| Calm | 98.7% |

| Surprised | 6.4% |

| Fear | 5.9% |

| Sad | 2.2% |

| Confused | 0.6% |

| Angry | 0.1% |

| Happy | 0.1% |

| Disgusted | 0.1% |

AWS Rekognition

| Age | 2-10 |

| Gender | Female, 97.9% |

| Calm | 97.1% |

| Surprised | 6.4% |

| Fear | 6.2% |

| Sad | 2.3% |

| Confused | 0.7% |

| Happy | 0.2% |

| Disgusted | 0.1% |

| Angry | 0.1% |

Microsoft Cognitive Services

| Age | 42 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 42 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 28 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 7 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| interior objects | 97.8% | |

|

| ||

| food drinks | 1.6% | |

|

| ||

Captions

Microsoft

created on 2022-08-13

| a flat screen television | 55.2% | |

|

| ||

| a flat screen television on the wall | 55.1% | |

|

| ||

| a flat screen tv sitting on top of a television | 37.8% | |

|

| ||