Azure OpenAI

Created by gpt-4 on 2024-11-17

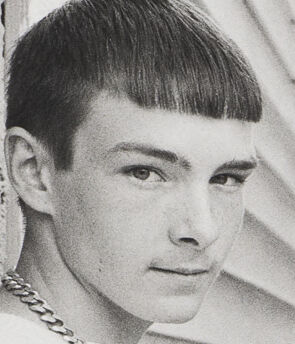

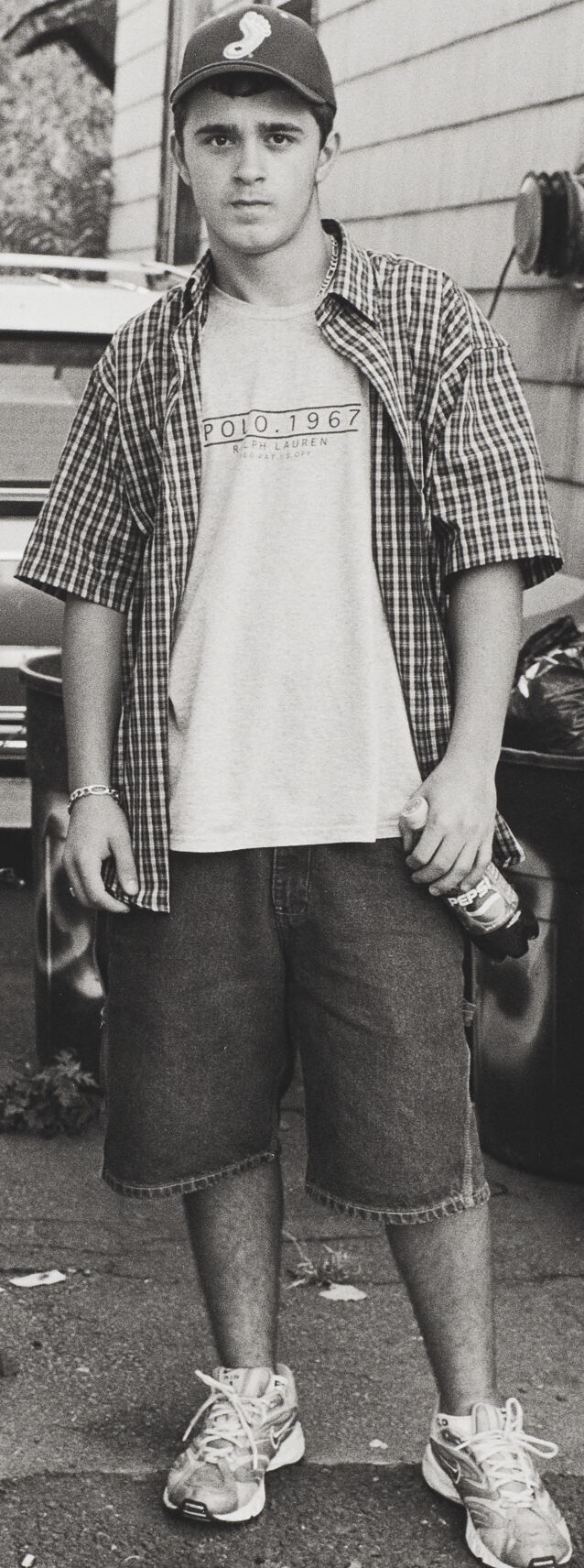

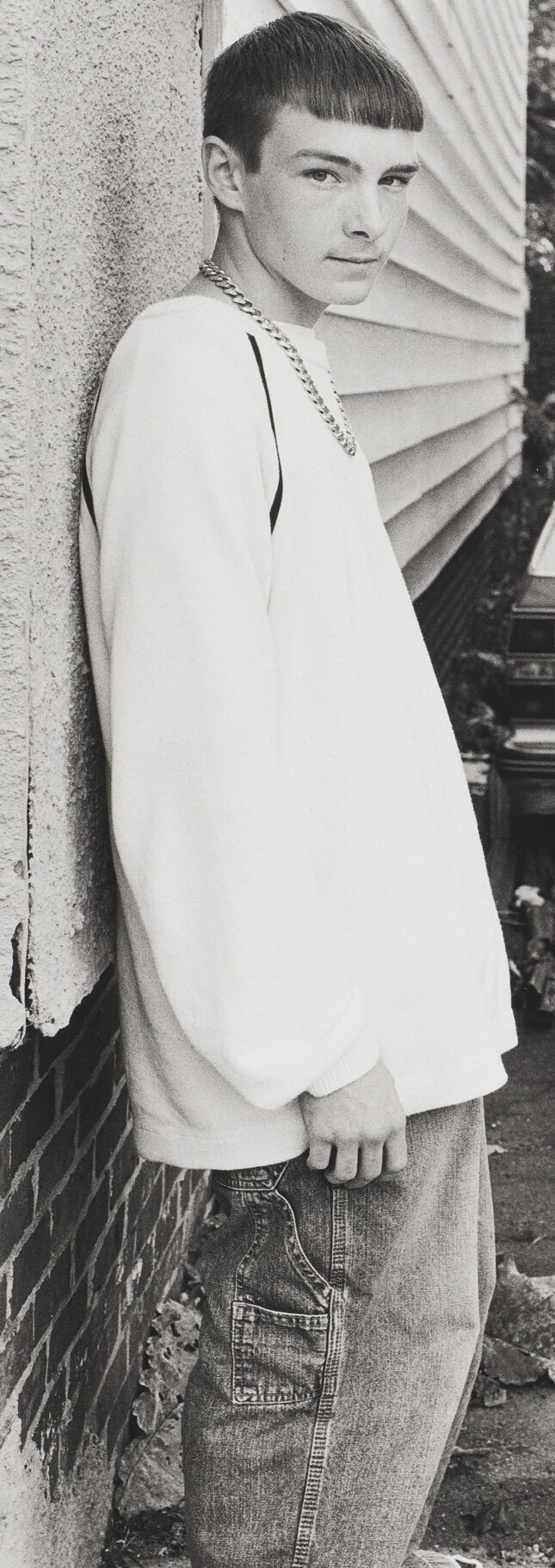

The image shows two individuals standing in an alleyway next to an old car. The person on the left is wearing a long-sleeve white t-shirt and loose denim jeans, partially leaning against a pale wall near the rear of the car. The other individual is wearing a checkered button-up shirt over a white t-shirt, paired with long cargo shorts and sneakers. Between them, there is a large black garbage bin with faded graffiti and a smaller plastic tub on the ground. The old car, trash bins, and outer wall of the house give the setting an urban, possibly neglected appearance. The photograph is in black and white, which adds to the gritty feel of the scene.

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-11-17

The image shows two young men standing in an outdoor setting. One man is leaning against the side of a wooden building, wearing a white shirt and jeans. The other man is wearing a plaid shirt, shorts, and a baseball cap. There is a car parked near them and some other items such as trash cans and what appears to be a box or container on the ground. The overall scene has a casual, everyday feel to it.

Meta Llama

Created on 2024-12-01

The image depicts two young men standing in an alleyway, with a car parked behind them. The man on the left has short dark hair and is wearing a white sweatshirt, blue jeans, and a silver chain. He is leaning against a wall. The man on the right has short dark hair and is wearing a baseball cap, a plaid shirt over a white t-shirt, denim shorts, and sneakers. He is holding a can in his hand.

In the background, there is a car parked behind the men, and a trash can with graffiti on it. The alleyway is narrow and cluttered, with debris scattered on the ground. The walls of the buildings are made of wood or brick, and there are trees visible in the distance. The overall atmosphere of the image suggests that it was taken in a urban or suburban setting, possibly in the United States.