Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

| Age | 23-37 |

| Gender | Female, 52.3% |

| Fear | 50.1% |

| Sad | 46.1% |

| Confused | 45.7% |

| Surprised | 45.2% |

| Disgusted | 45.2% |

| Calm | 47.5% |

| Happy | 45% |

| Angry | 45.2% |

Feature analysis

Amazon

| Person | 99.1% | |

Categories

Imagga

| people portraits | 38.5% | |

| food drinks | 28.7% | |

| events parties | 8.6% | |

| paintings art | 8.4% | |

| interior objects | 6.5% | |

| pets animals | 3.6% | |

| cars vehicles | 3.1% | |

| text visuals | 1.1% | |

Captions

Microsoft

created by unknown on 2020-03-11

| a group of people in a room | 93.8% | |

| a group of people riding on the back of a white building | 73.1% | |

| a group of people sitting in a room | 73% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-11

| a photograph of a group of people sitting on a couch in a room | -100% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-31

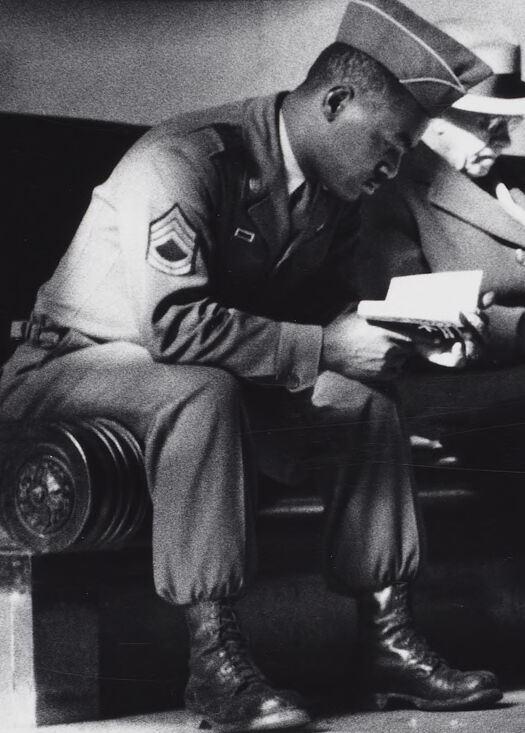

The black and white image depicts a group of people seated on benches in what appears to be a waiting area, possibly a train station or a public building. The people are dressed in mid-20th century attire, including suits and hats, and one man is dressed in a military uniform. The room features tall, stone walls and a large, empty picture frame or notice board on the wall. There are strong patterns of light and shadow cast on the floor, suggesting sunlight filtering through large windows. The atmosphere appears calm and quiet, with individuals engaged in reading or waiting.

Created by gpt-4 on 2024-11-13

The image captures a scene with a group of individuals seated on benches in what appears to be a large, well-lit interior space, possibly a public building or a train station, given the architectural style and sense of transit. The individuals are dressed in what looks like mid-20th-century attire, including suits, military-style uniforms, and hats typical of the era. Many of them are engaged in reading, with some holding newspapers. The room has high ceilings, and sunlight streams through windows or skylights, creating a pattern of strong shadows that contrasts with lit areas, adding a sense of depth and texture to the photograph. The setting evokes a sense of quiet, perhaps a moment captured in a busy day where people have paused to read and wait.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-27

This is a powerful black and white photograph that appears to be from the mid-20th century. The image shows multiple people seated on a long bench in what appears to be a public building or waiting area. The most striking visual element is the dramatic lighting, with strong geometric shadows cast across the floor in a repeating pattern. At the front of the bench is a serviceman in military uniform wearing a cap. The others are dressed in civilian attire including hats typical of the era. Everyone appears to be reading newspapers or documents. The composition is particularly notable for its use of light and shadow, creating a strong graphic quality that draws the eye across the frame. The architectural setting appears formal, with what looks like classical details visible in the background.

Created by claude-3-haiku-20240307 on 2024-11-14

The image appears to be a black and white photograph of an indoor public space. In the foreground, several people are seated on chairs, some reading or resting, while others are walking by in the background. The setting seems to be a large, formal hall or lobby, with architectural details such as arched windows and columns visible. The lighting casts striking shadows on the floor, creating a dramatic and atmospheric scene.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-25

The image depicts a black-and-white photograph of a group of men sitting on benches in a large room, likely a train station or similar public space. The men are dressed in various attire, including suits, hats, and military uniforms, suggesting a diverse range of individuals.

In the foreground, the men sit on dark-colored benches, some reading newspapers or books, while others appear to be engaged in conversation. The atmosphere seems relaxed, with the warm sunlight streaming through the windows and casting shadows on the floor.

The background of the image features a large wall with a prominent square shape, possibly a window or a piece of artwork. The overall setting appears to be a public area, possibly a waiting room or lobby, where people gather to socialize or wait for transportation.

The image conveys a sense of community and everyday life, capturing a moment in time when people came together to share space and engage in quiet activities. The use of black and white photography adds a nostalgic quality to the image, evoking a sense of history and tradition.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-21

The image depicts a black-and-white photograph of a large room with a high ceiling, featuring a group of men sitting on benches. The men are dressed in various attire, including suits, hats, and military uniforms. They appear to be engaged in reading newspapers or documents, with some holding cups or plates.

The room is well-lit, with sunlight streaming through the windows and casting shadows on the floor. The walls are made of stone or brick, and the floor is composed of polished stone or tile. In the background, other people can be seen sitting or standing, adding to the sense of activity and community in the space.

Overall, the image conveys a sense of quiet contemplation and social interaction, with the men gathered together in a shared space. The use of black and white photography adds a timeless quality to the image, evoking a sense of nostalgia and historical significance.