Machine Generated Data

Tags

Amazon

created on 2019-07-20

| Human | 99.7 | |

|

| ||

| Person | 99.7 | |

|

| ||

| Person | 99.7 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Person | 99.6 | |

|

| ||

| Person | 99.1 | |

|

| ||

| Person | 99 | |

|

| ||

| Person | 99 | |

|

| ||

| Person | 99 | |

|

| ||

| Person | 98.1 | |

|

| ||

| Furniture | 96.9 | |

|

| ||

| Chair | 96.9 | |

|

| ||

| Person | 94.1 | |

|

| ||

| School | 94 | |

|

| ||

| Room | 93.4 | |

|

| ||

| Indoors | 93.4 | |

|

| ||

| Classroom | 93 | |

|

| ||

| Person | 92.9 | |

|

| ||

| Chair | 91.6 | |

|

| ||

| Person | 90.5 | |

|

| ||

| Apparel | 86.7 | |

|

| ||

| Clothing | 86.7 | |

|

| ||

| Table | 75.9 | |

|

| ||

| People | 67.9 | |

|

| ||

| Kindergarten | 65.9 | |

|

| ||

| Face | 62.8 | |

|

| ||

| Kid | 60.9 | |

|

| ||

| Child | 60.9 | |

|

| ||

| Workshop | 58.2 | |

|

| ||

| Chair | 55.8 | |

|

| ||

| Person | 52.2 | |

|

| ||

| Person | 46 | |

|

| ||

Clarifai

created on 2019-07-20

Imagga

created on 2019-07-20

Google

created on 2019-07-20

| Social group | 91.1 | |

|

| ||

| Room | 78 | |

|

| ||

| Event | 69.4 | |

|

| ||

| Class | 67.1 | |

|

| ||

| Family | 63 | |

|

| ||

| Black-and-white | 56.4 | |

|

| ||

| Monochrome | 54.4 | |

|

| ||

| House | 53.7 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 29-45 |

| Gender | Male, 100% |

| Disgusted | 6.7% |

| Sad | 5.9% |

| Happy | 15.6% |

| Confused | 10.1% |

| Angry | 11.3% |

| Surprised | 2.8% |

| Calm | 47.6% |

AWS Rekognition

| Age | 4-9 |

| Gender | Female, 97% |

| Happy | 2.9% |

| Sad | 71.5% |

| Disgusted | 1.1% |

| Confused | 2.3% |

| Calm | 5.3% |

| Surprised | 1.8% |

| Angry | 15.2% |

AWS Rekognition

| Age | 4-9 |

| Gender | Male, 99.3% |

| Angry | 1.6% |

| Disgusted | 0.3% |

| Happy | 0.3% |

| Sad | 3.2% |

| Calm | 90.6% |

| Surprised | 0.4% |

| Confused | 3.7% |

AWS Rekognition

| Age | 9-14 |

| Gender | Male, 98.4% |

| Disgusted | 0.1% |

| Surprised | 0.4% |

| Angry | 0.3% |

| Calm | 97.7% |

| Confused | 0.6% |

| Happy | 0.3% |

| Sad | 0.6% |

AWS Rekognition

| Age | 4-9 |

| Gender | Female, 76% |

| Calm | 3.9% |

| Sad | 85.7% |

| Angry | 6.3% |

| Surprised | 0.8% |

| Happy | 1.7% |

| Confused | 1% |

| Disgusted | 0.6% |

AWS Rekognition

| Age | 4-7 |

| Gender | Female, 96% |

| Calm | 88.6% |

| Sad | 3.2% |

| Angry | 2% |

| Surprised | 2.5% |

| Happy | 0.8% |

| Confused | 2.3% |

| Disgusted | 0.6% |

AWS Rekognition

| Age | 12-22 |

| Gender | Male, 99.6% |

| Disgusted | 2.8% |

| Sad | 4.3% |

| Confused | 4.1% |

| Surprised | 1.2% |

| Happy | 65.9% |

| Angry | 3.4% |

| Calm | 18.4% |

AWS Rekognition

| Age | 15-25 |

| Gender | Male, 96.6% |

| Disgusted | 2.7% |

| Surprised | 2.7% |

| Calm | 72.2% |

| Sad | 5.7% |

| Angry | 8.3% |

| Happy | 2.5% |

| Confused | 5.9% |

AWS Rekognition

| Age | 6-13 |

| Gender | Female, 64.4% |

| Calm | 93.5% |

| Sad | 4.7% |

| Angry | 0.4% |

| Surprised | 0.2% |

| Happy | 0.2% |

| Confused | 0.7% |

| Disgusted | 0.2% |

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 57.5% |

| Disgusted | 2.9% |

| Sad | 2.5% |

| Happy | 1.7% |

| Confused | 2.4% |

| Angry | 2.4% |

| Surprised | 0.8% |

| Calm | 87.3% |

AWS Rekognition

| Age | 11-18 |

| Gender | Male, 94.9% |

| Disgusted | 5.8% |

| Surprised | 1.7% |

| Angry | 13.5% |

| Calm | 58.3% |

| Confused | 7.1% |

| Happy | 1.7% |

| Sad | 11.9% |

AWS Rekognition

| Age | 35-52 |

| Gender | Female, 96.5% |

| Happy | 94.9% |

| Calm | 1.5% |

| Confused | 0.7% |

| Angry | 0.6% |

| Sad | 0.6% |

| Disgusted | 1.4% |

| Surprised | 0.4% |

Microsoft Cognitive Services

| Age | 33 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 37 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 25 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 27 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 24 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 9 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 4 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 9 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 12 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 33 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 5 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 1 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

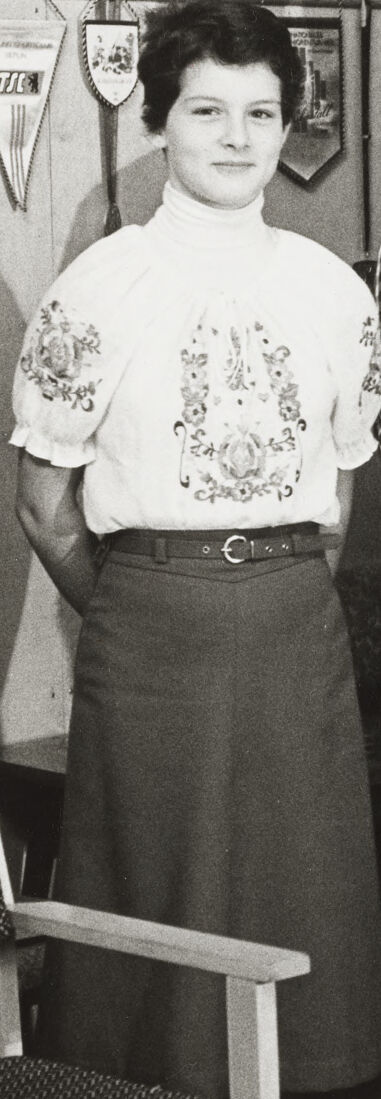

| people portraits | 100% | |

|

| ||

Captions

Microsoft

created on 2019-07-20

| Billy Hughes et al. sitting at a table | 97.2% | |

|

| ||

| Billy Hughes et al. sitting at a table posing for the camera | 97.1% | |

|

| ||

| Billy Hughes et al. sitting around a table | 97% | |

|

| ||

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-31

The black and white photograph shows a group of people in a home or domestic setting. The scene features a large family or group standing together in front of a wall with wooden cabinetry. There are several chairs and a table in the foreground, with a tablecloth and a bowl on the table. The people are dressed in various attire typical of mid to late 20th century fashion, including sweaters, collared shirts, and patterned clothing. The room's decor includes floral wallpaper, hanging pennants, and ceramic or glassware displayed in a cabinet. The overall setting suggests a family gathering or an occasion marked by a large group photo.

Created by gpt-4 on 2024-11-13

The image is a black and white photograph that appears to be taken in the interior of a home, possibly dating back a few decades, given the style of clothing and decor. The space is furnished with wooden cabinets, a table with a striped tablecloth, chairs, and decorative items like pennants, dishes in a glass-fronted cabinet, and wall decorations that include posters or pictures. The room is carpeted, and on the walls, there is patterned wallpaper. Seven individuals are visible standing together as if posing for a family portrait. They are dressed in an assortment of clothing that includes patterned and striped tops, dresses, and skirts, suggesting a variety of ages among them. The attire looks quite traditional, further hinting at the photo being from an earlier era. A bowl or dish rests on the table in the forefront, and overall, the scene resembles a mid-century domestic setting.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-27

This appears to be a black and white family photograph from what looks like the 1970s or early 1980s, taken in what appears to be a home dining area or kitchen. The room has wood cabinets along the wall, floral wallpaper, and some pennants or flags displayed. In the image are 11 people of varying ages, including several children and adults, arranged in a group pose. They're dressed in typical casual 1970s/80s style clothing - sweaters, plaid shirts, and patterned tops. There's a dining table with chairs visible in the foreground. The interior design and furniture style is characteristic of the era, with modernist-style wooden cabinets and textured upholstery on the chairs.

Created by claude-3-haiku-20240307 on 2024-11-13

The image appears to be a family portrait. It shows a group of people, mostly adults and children, standing and sitting in what looks like a school or community center setting. The individuals are dressed in clothing typical of the mid-20th century, with the adults wearing casual outfits like plaid shirts and the children wearing sweaters and other casual attire. The room has wooden lockers or cabinets along the walls, and there are some decorative items like posters or artwork hanging on the walls. The people in the image seem to be a family or group, possibly posed for a formal portrait or photograph.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-25

The image depicts a black-and-white photograph of a large family gathered in a kitchen or dining room. The family consists of 12 individuals, including adults and children, who are standing together in front of a wall featuring wooden cabinets and a refrigerator adorned with various items such as pennants, pictures, and a clock. The room is furnished with a table and chairs, and the walls are decorated with wallpaper. The overall atmosphere of the image suggests a family gathering or celebration, possibly during the holiday season.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-21

The image is a black and white photograph of a family of 11 standing in a kitchen. The family consists of two adults, a man and a woman, and nine children, ranging in age from infant to teenager. The man is holding a baby in his arms, while the woman stands beside him. The children are dressed in various outfits, including dresses, sweaters, and button-down shirts. In the foreground, there is a table with chairs and a bowl on it. The background of the image features a kitchen with wooden cabinets and a wall covered in wallpaper. There are also some decorative items on the wall, such as a clock and a picture frame. Overall, the image appears to be a family portrait taken in the 1960s or 1970s, based on the clothing and decor of the time period.

Text analysis

Amazon