Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 35-52 |

| Gender | Male, 54.9% |

| Sad | 48.9% |

| Calm | 48.7% |

| Surprised | 45.2% |

| Disgusted | 45.5% |

| Happy | 45.3% |

| Angry | 45.5% |

| Confused | 45.9% |

Feature analysis

Amazon

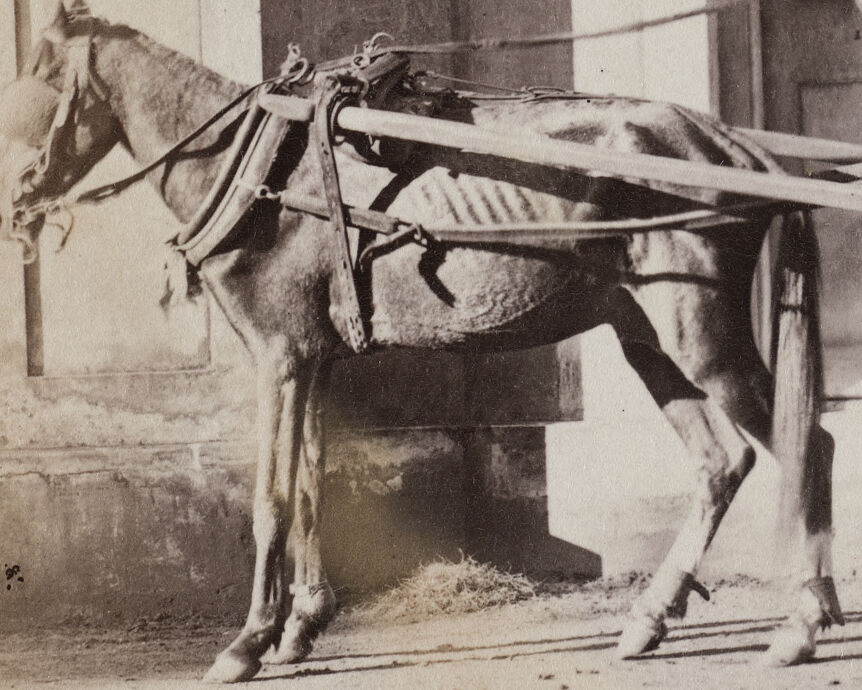

| Horse | 99.7% | |

Categories

Imagga

| interior objects | 50% | |

| paintings art | 26.3% | |

| streetview architecture | 18.5% | |

| beaches seaside | 2.1% | |

| pets animals | 1.1% | |

| nature landscape | 0.8% | |

| food drinks | 0.4% | |

| events parties | 0.4% | |

| macro flowers | 0.2% | |

| people portraits | 0.2% | |

Captions

Microsoft

created on 2018-10-18

| a man riding a horse drawn carriage in front of a building | 98.2% | |

| a man riding a horse drawn carriage in front of a brick building | 97.9% | |

| a horse drawn carriage in front of a building | 97.8% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-31

The image depicts a vintage scene featuring a horse-drawn carriage parked in front of a building with arched doorways and shuttered windows. The horse is harnessed and standing patiently as a person holds the reins from the driver's seat of the carriage. The carriage itself has large wooden wheels and is occupied by several passengers. The architecture of the building in the background has ornate elements, contributing to the historical ambiance of the scene.

Created by gpt-4 on 2024-11-21

This is a sepia-toned historical photograph showing a scene with a horse-drawn carriage. The carriage is parked in front of what appears to be an entrance to a building with tall arched doorways and shutters. The architecture has decorative elements above the doors. The carriage has large spoked wheels and seems to be designed for carrying multiple passengers. A horse harnessed to the front of the carriage is visible on the left. The horse's body language suggests either movement or stillness, but it is outfitted with a harness, ready to pull the carriage. The location or historical period of the image isn't clear from the details provided.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-27

This is a historical black and white photograph showing a horse-drawn carriage in what appears to be a colonial or tropical setting. The architecture features Gothic-style arched doorways and windows. The carriage is a simple transport wagon with large wooden wheels and is being pulled by a single horse. Several passengers can be seen in and around the carriage. The overall composition suggests this image was taken in the late 19th or early 20th century. The building in the background has distinctive architectural elements including decorative quatrefoil designs above the arched doorway. The image provides an interesting glimpse into historical transportation methods and colonial architecture.

Created by claude-3-haiku-20240307 on 2024-11-21

The image appears to depict a street scene in what seems to be an older, historical setting. A horse-drawn cart is visible, with several individuals riding in it or standing around it. The cart is positioned in front of a building with an ornate architectural detail above the doorway. The individuals in the image are wearing clothing that suggests this is an older time period, possibly late 19th or early 20th century. The overall scene gives a sense of a bustling, lively street activity in a historical urban environment.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

The image depicts a black-and-white photograph of a horse-drawn carriage, with four men standing around it. The carriage is positioned in front of a building, and the men are dressed in traditional Indian attire. **Key Features:** * **Carriage:** The carriage is pulled by a single horse and has a wooden frame with a roof. It appears to be a simple, open-air vehicle. * **Men:** There are four men in the image, all wearing traditional Indian clothing such as turbans and dhotis. One man is sitting in the carriage, while the other three are standing around it. * **Building:** The building behind the carriage has a large arched doorway with shutters. The walls of the building are made of stone or brick, and there are decorative carvings above the doorway. * **Horse:** The horse pulling the carriage is a small, dark-colored animal with a harness around its neck and body. **Overall Impression:** The image suggests that it was taken in India during the colonial era, possibly in the late 19th or early 20th century. The traditional clothing and architecture in the image are consistent with this time period. The image may have been taken by a European photographer who was traveling in India at the time.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-01

The image depicts a horse-drawn carriage, likely from the late 19th or early 20th century, based on the style of clothing and the architecture in the background. * The carriage is pulled by a horse that is harnessed to the front of the carriage. * The horse is brown and appears to be a draft horse. * The harness consists of a yoke and breastplate that are attached to the carriage. * The reins are held by a man sitting on the carriage, who is wearing a light-colored shirt and shorts. * The carriage itself is a large, open vehicle with a roof and sides. * It has a wooden frame and a canvas or leather top. * There are several people inside the carriage, including a man and woman sitting in the back and two men standing on the steps at the front. * The carriage has a large wheel with spokes that is attached to the front axle. * In the background, there is a building with a large doorway and windows. * The building appears to be made of stone or brick and has an ornate design. * There are several people standing outside the building, including a man in a turban and a woman in a long dress. * The overall atmosphere of the image suggests that it was taken in a warm climate, possibly in India or another part of South Asia. * The people in the image are dressed in clothing that is typical of the region, and the architecture of the building is also consistent with the style found in South Asia during this time period. Overall, the image provides a glimpse into the daily life of people in a small town or city in South Asia during the late 19th or early 20th century.