Machine Generated Data

Tags

Color Analysis

Feature analysis

Amazon

| Monitor | 79.6% | |

Categories

Imagga

| food drinks | 74.8% | |

| cars vehicles | 22.5% | |

| interior objects | 1.7% | |

Captions

Microsoft

created by unknown on 2018-11-05

| a close up of a screen | 69.2% | |

| a close up of a computer screen | 58.1% | |

| a close up of a sign | 58% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-12

| a photograph of a toy car with a red and white stripe on it | -100% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-31

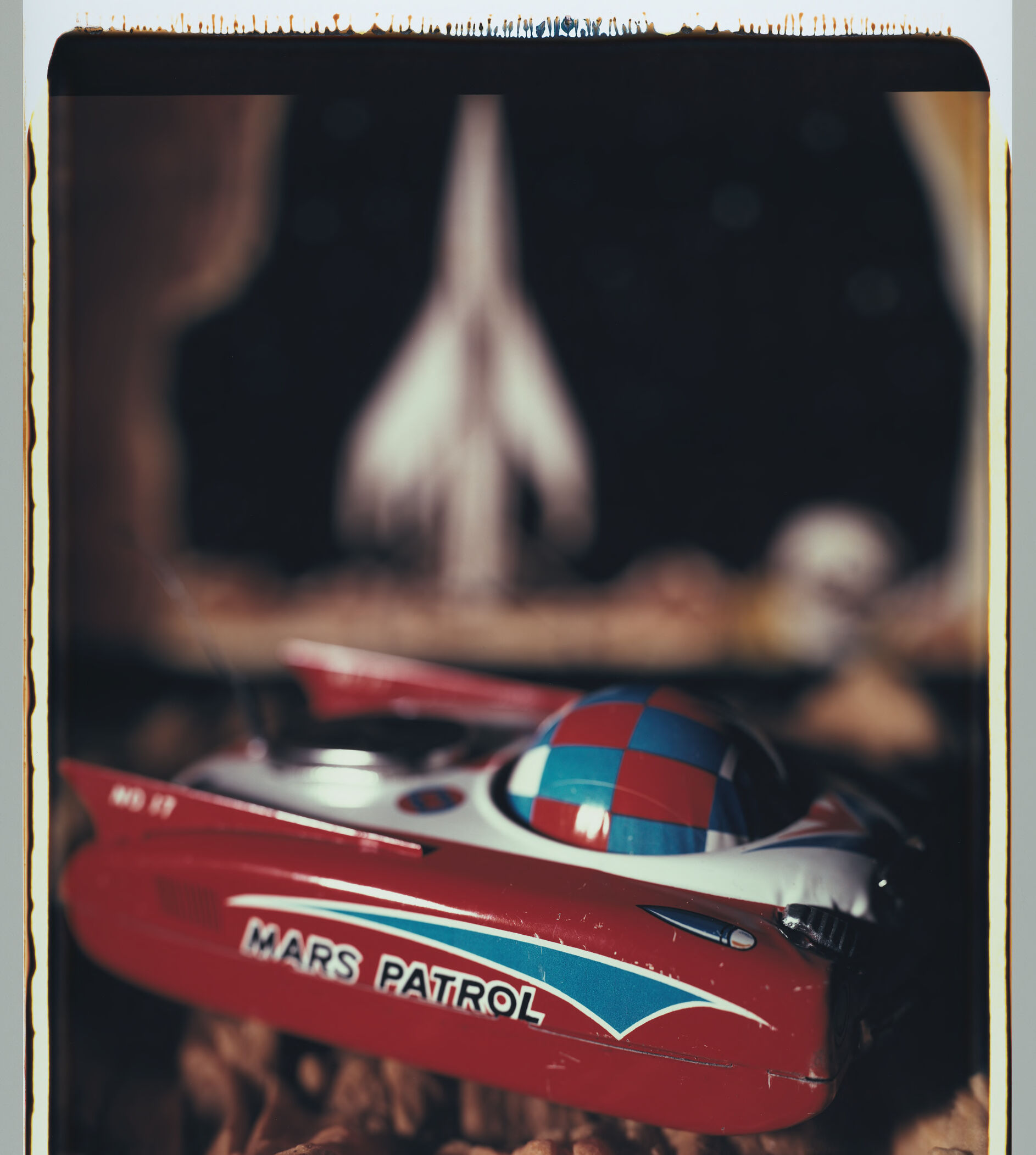

The image features a toy model that seems to embody a retro, sci-fi theme. In the foreground, there is a detailed model of a red spacecraft labeled "Mars Patrol." The design of the spacecraft includes some distinguishing features like a red and blue checkered dome and sleek aerodynamic contours typical of mid-20th-century sci-fi aesthetics. In the blurred background, there appears to be a large model of a futuristic rocket, pointing upwards against a dark backdrop. The image has an overall vintage feel, emphasized by the border, which includes some visible distress and edging indicative of an old print or photograph. The bottom edge of the image includes a signature dating the piece to 2007.

Created by gpt-4 on 2024-11-19

This image features a vintage-style toy shown in an artistic setting. The toy resembles a red space vehicle with the words "MARS PATROL" written on its side, a clear plastic bubble canopy on top, and a checkered pattern on the rounded front section. The vehicle is slightly out of focus and rests on a surface that appears to be covered with small, irregularly shaped objects that could be rocks, simulating a Martian landscape.

In the background, there is a blurred shape that resembles a flame or rocket exhaust, which may suggest that the toy is preparing for a simulated lift-off, adding a sense of motion or purpose to the toy's portrayal. The image is framed with a border that has the characteristics of a Polaroid, including a thicker lower edge where it seems to have been signed by the photographer with the date '2007' and the numerator/denominator form '1/1', suggesting this could be a unique print or part of a limited series.

The overall look imparts a nostalgic and artistic representation of the toy, which could be appealing to collectors and enthusiasts of vintage or space-related memorabilia. The image exudes a sense of wonder and adventure that is often associated with space exploration toys and the era from which it may have originated.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-27

This image shows a vintage toy "Mars Patrol" spacecraft or vehicle. It appears to be from the retro space age era, with a distinctive red and blue color scheme. The vehicle has a sleek, futuristic design typical of 1950s or 1960s space toys, with "MARS PATROL" written on its side in bold letters. The toy features a checkered blue and red dome or cockpit section. The photograph has a nostalgic quality, with a shallow depth of field that blurs the background while keeping the toy in sharp focus. It seems to be shot in a Polaroid or vintage photographic style, with characteristic border effects around the edges of the frame.

Created by claude-3-haiku-20240307 on 2024-11-19

The image shows a vintage toy vehicle, specifically a Mars Patrol vehicle. The vehicle appears to be a red and white toy spacecraft or rocket, with a checkered pattern on the sides and a rounded top. In the background, there is an indistinct and blurred scene, creating a sense of depth and atmosphere. The overall image has a nostalgic and vintage feel, reminiscent of classic science fiction or space-themed toys and collectibles.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

The image depicts a toy spaceship with the words "MARS PATROL" written on it, set against a blurred background. The toy is red and white, with a blue and red checkered pattern on its top. It has a distinctive shape, with a pointed nose and a curved tail section. The words "MARS PATROL" are written in white letters on the side of the toy, with a blue arrow pointing towards the front.

The background of the image is blurry, but it appears to be a dark-colored surface with some lighter-colored objects in the distance. The overall effect is one of a toy spaceship sitting on a table or shelf, with the background blurred out of focus.

The image has a vintage feel to it, with a sepia-toned color scheme and a distressed border around the edges. This suggests that the image may have been taken using an older camera or film stock, or that it has been intentionally processed to give it a retro look. Overall, the image is a charming and nostalgic depiction of a toy spaceship, evoking memories of childhood play and imagination.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-01

The image shows a toy Mars Patrol spaceship with a red, white, and blue color scheme. The spaceship has a distinctive design, with a red body and a white top section featuring a blue and red checkered pattern. The words "MARS PATROL" are written in white letters on the side of the spaceship.

In the background, there is a blurry image of a rocket ship, which appears to be a model or a toy. The overall atmosphere of the image suggests that it was taken in a home or office setting, possibly as part of a collection of toys or models.

The image has a vintage feel to it, with a distressed border around the edges that gives it a retro look. The overall effect is one of nostalgia and playfulness, evoking memories of childhood and adventure.