Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 29-45 |

| Gender | Male, 55% |

| Sad | 45.6% |

| Angry | 45.2% |

| Calm | 53.9% |

| Surprised | 45% |

| Happy | 45.1% |

| Disgusted | 45% |

| Confused | 45.2% |

AWS Rekognition

| Age | 57-77 |

| Gender | Female, 52.4% |

| Disgusted | 45.6% |

| Sad | 45.5% |

| Confused | 46.1% |

| Angry | 45.8% |

| Happy | 46.4% |

| Calm | 50.1% |

| Surprised | 45.4% |

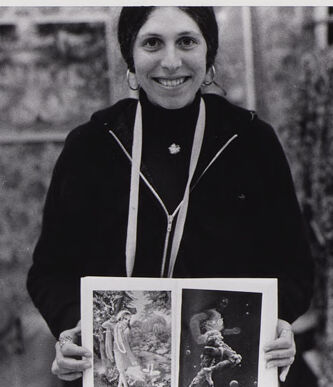

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 99.6% |

| Angry | 0.4% |

| Disgusted | 0.6% |

| Sad | 0.2% |

| Happy | 97.1% |

| Confused | 0.4% |

| Calm | 0.3% |

| Surprised | 1% |

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 54.9% |

| Surprised | 45.3% |

| Calm | 48.7% |

| Angry | 45.8% |

| Sad | 46.5% |

| Happy | 46.8% |

| Confused | 46.2% |

| Disgusted | 45.6% |

AWS Rekognition

| Age | 48-68 |

| Gender | Male, 54.9% |

| Confused | 45.2% |

| Surprised | 45.1% |

| Sad | 45.9% |

| Calm | 47.1% |

| Angry | 45.4% |

| Disgusted | 45.1% |

| Happy | 51.2% |

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 54.8% |

| Surprised | 45.3% |

| Happy | 48.6% |

| Calm | 47.9% |

| Sad | 46.6% |

| Disgusted | 45.5% |

| Confused | 45.6% |

| Angry | 45.5% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 54.7% |

| Angry | 45.1% |

| Surprised | 45.1% |

| Sad | 45.1% |

| Happy | 53.7% |

| Confused | 45.1% |

| Calm | 45.9% |

| Disgusted | 45.1% |

AWS Rekognition

| Age | 48-68 |

| Gender | Male, 53.1% |

| Confused | 45% |

| Angry | 45.1% |

| Surprised | 45% |

| Calm | 45.1% |

| Disgusted | 45% |

| Happy | 45% |

| Sad | 54.8% |

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 53.4% |

| Calm | 45% |

| Sad | 45.2% |

| Happy | 54.5% |

| Surprised | 45% |

| Angry | 45.1% |

| Confused | 45.1% |

| Disgusted | 45% |

AWS Rekognition

| Age | 20-38 |

| Gender | Male, 50.3% |

| Calm | 49.6% |

| Angry | 49.5% |

| Surprised | 49.5% |

| Sad | 49.5% |

| Happy | 50.3% |

| Confused | 49.5% |

| Disgusted | 49.5% |

AWS Rekognition

| Age | 57-77 |

| Gender | Female, 54.6% |

| Confused | 45.1% |

| Disgusted | 45.1% |

| Sad | 53.1% |

| Angry | 45.2% |

| Calm | 46% |

| Happy | 45.5% |

| Surprised | 45.1% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 55% |

| Angry | 45.1% |

| Sad | 45.1% |

| Disgusted | 45.3% |

| Surprised | 45.5% |

| Happy | 45.7% |

| Confused | 45.1% |

| Calm | 53.1% |

AWS Rekognition

| Age | 30-47 |

| Gender | Female, 53.8% |

| Disgusted | 45.1% |

| Sad | 45.2% |

| Confused | 45.1% |

| Angry | 45.2% |

| Happy | 53.9% |

| Calm | 45.3% |

| Surprised | 45.1% |

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 50.2% |

| Sad | 49.5% |

| Happy | 49.5% |

| Confused | 49.5% |

| Disgusted | 50.4% |

| Surprised | 49.5% |

| Angry | 49.5% |

| Calm | 49.5% |

AWS Rekognition

| Age | 30-47 |

| Gender | Male, 54.1% |

| Calm | 49.8% |

| Surprised | 45.3% |

| Angry | 45.4% |

| Happy | 48.5% |

| Disgusted | 45.4% |

| Confused | 45.2% |

| Sad | 45.4% |

AWS Rekognition

| Age | 26-44 |

| Gender | Male, 54.1% |

| Calm | 52.7% |

| Disgusted | 45.3% |

| Surprised | 45.1% |

| Angry | 45.9% |

| Happy | 45.1% |

| Confused | 45.2% |

| Sad | 45.8% |

AWS Rekognition

| Age | 35-52 |

| Gender | Female, 52% |

| Happy | 47.8% |

| Confused | 45.3% |

| Calm | 50.1% |

| Surprised | 45.8% |

| Disgusted | 45.4% |

| Sad | 45.2% |

| Angry | 45.3% |

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 50.5% |

| Calm | 49.9% |

| Surprised | 49.5% |

| Confused | 49.6% |

| Disgusted | 49.6% |

| Happy | 49.6% |

| Sad | 49.7% |

| Angry | 49.6% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 50.5% |

| Angry | 49.5% |

| Disgusted | 49.5% |

| Happy | 49.5% |

| Calm | 49.5% |

| Sad | 50.4% |

| Confused | 49.5% |

| Surprised | 49.5% |

AWS Rekognition

| Age | 35-52 |

| Gender | Female, 50.5% |

| Sad | 50.2% |

| Happy | 49.7% |

| Confused | 49.5% |

| Disgusted | 49.5% |

| Surprised | 49.5% |

| Angry | 49.5% |

| Calm | 49.5% |

AWS Rekognition

| Age | 27-44 |

| Gender | Female, 50.5% |

| Disgusted | 49.5% |

| Sad | 50.5% |

| Confused | 49.5% |

| Calm | 49.5% |

| Angry | 49.5% |

| Surprised | 49.5% |

| Happy | 49.5% |

AWS Rekognition

| Age | 10-15 |

| Gender | Female, 50.4% |

| Confused | 49.6% |

| Happy | 49.8% |

| Calm | 49.5% |

| Disgusted | 49.6% |

| Angry | 49.6% |

| Sad | 49.8% |

| Surprised | 49.6% |

Microsoft Cognitive Services

| Age | 42 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 42 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 59 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 37 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 27 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 59 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Possible |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Possible |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very likely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very likely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| paintings art | 97.3% | |

| text visuals | 2.7% | |

Captions

Microsoft

created on 2018-12-17

| a room with pictures on the wall | 73.7% | |

| a room with art on the wall | 61.1% | |

| a photo of a room | 61% | |

Azure OpenAI

Created on 2024-11-19

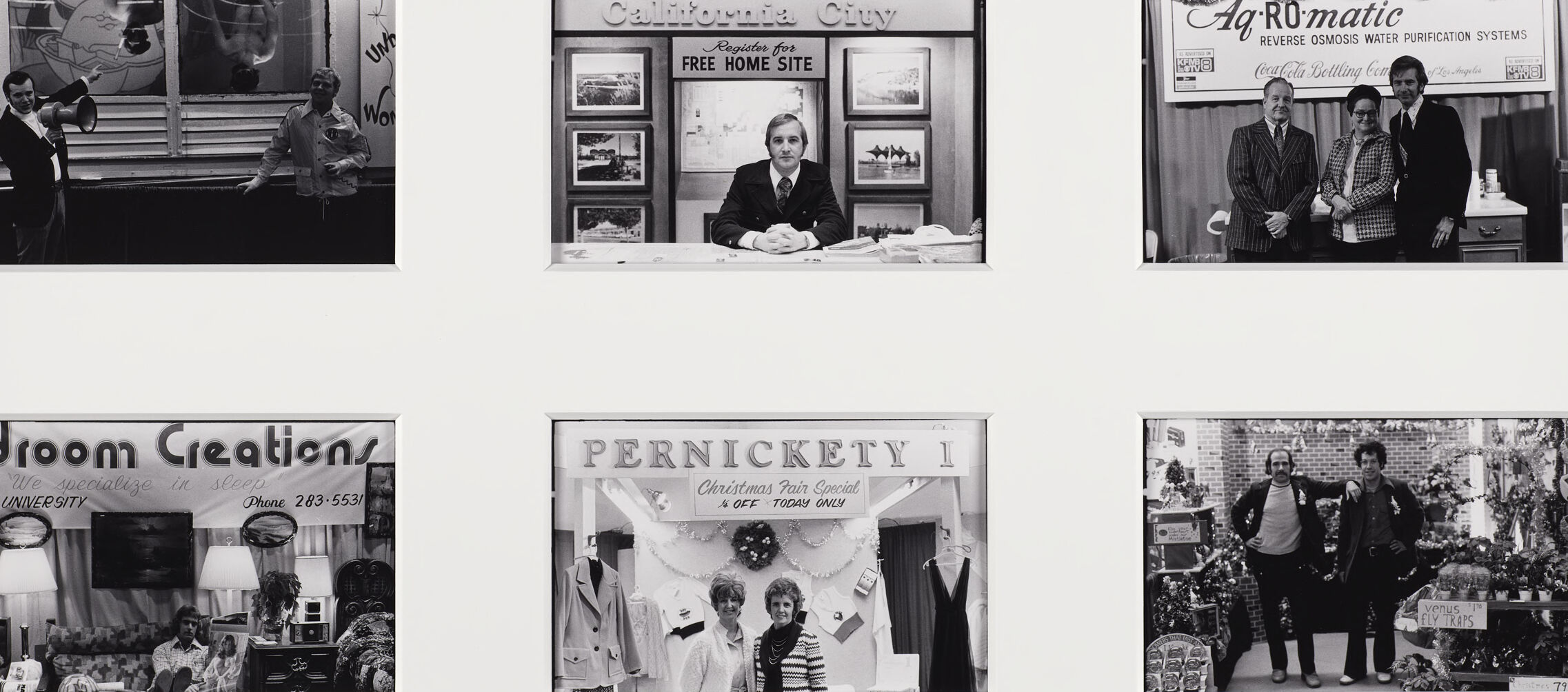

The image is a series of nine framed black and white photographs arranged in a three-by-three grid. Each photograph appears to capture a different scene, likely from commercial settings, featuring mannequins, products, and signs that suggest a retail or business environment. Starting from the top left corner and moving to the right, the first photo shows a store display with mannequins dressed in fashion attire. The shop name is partially visible at the top. The second image features an interior scene with clothes on display and a prominent central sign with the name "Kay." In the third photo, a scene includes a pair of individuals standing next to each other. The middle row, from left to right, begins with an outdoor shot of a person seated near a window display. The second image in this row shows a person behind a desk and the text "California City" above, with additional words mentioning a "FREE HOME SITE." The third photo captures two individuals standing behind a counter with a sign reading "Ag-Ro-Matic" above them. The bottom row features three more scenes, starting on the left with an interior setting that includes furniture and decor, with "Bedroom Creations" written at the top. The middle photograph shows two individuals inside a store with a sign that reads "PERNICKETY I" above them. The final photo on the bottom right captures two individuals in a store or market setting with a variety of items visible around them. Each photograph seems to offer a glimpse into different aspects of commerce or daily life, highlighted by the presence of advertising and commercial products. The collection has an artistic or documentary style, capturing scenes that might tell stories about the places and the era in which they were taken.

Anthropic Claude

Created on 2024-11-19

This image appears to be a collage of various black and white photographs depicting different scenes from what seems to be a period in history. The photographs show a variety of people, businesses, and activities, providing a glimpse into the culture and daily life of that time. The images range from a group of women in a shop, to a man sitting at a desk in a storefront, to a group of men posing for a photograph. The overall impression is one of a historical record captured through the lens of a photographer.

Meta Llama

Created on 2024-12-01

The image presents a collection of nine black-and-white photographs, arranged in three rows of three, each featuring a distinct scene. The photographs are set against a white background and appear to be from the same event or exhibition, likely a trade show or exhibition. **Photograph 1:** The top-left photograph depicts four women standing in front of a table, with a sign reading "Kay" in the background. The women are dressed in attire that suggests they may be vendors or exhibitors. **Photograph 2:** The top-center photograph shows three individuals seated at a table, with a sign behind them that reads "California City." The individuals are dressed in business attire, and the sign suggests that this may be a promotional display for the city. **Photograph 3:** The top-right photograph features a woman holding up a picture, with a sign behind her that reads "Ag-Romatic." The woman is dressed in a dark-colored jacket, and the sign suggests that this may be a product display. **Photograph 4:** The middle-left photograph shows two men standing in front of a window, with a sign on the wall that reads "Bedroom Creations." The men are dressed in suits, and the sign suggests that this may be a display for a furniture or home decor company. **Photograph 5:** The middle-center photograph depicts a man sitting at a desk, with a sign behind him that reads "California City." The man is dressed in a suit, and the sign suggests that this may be a promotional display for the city. **Photograph 6:** The middle-right photograph shows three men standing in front of a sign that reads "Ag-Romatic." The men are dressed in suits, and the sign suggests that this may be a product display. **Photograph 7:** The bottom-left photograph features a sign that reads "Bedroom Creations," with a lamp and other furniture items in the background. The sign suggests that this may be a display for a furniture or home decor company. **Photograph 8:** The bottom-center photograph depicts a sign that reads "Pernickety," with a woman standing in front of it. The woman is dressed in a striped shirt, and the sign suggests that this may be a display for a clothing or accessory company. **Photograph 9:** The bottom-right photograph shows two men standing in front of a sign that reads "Ag-Romatic." The men are dressed in suits, and the sign suggests that this may be a product display. Overall, the photographs appear to be showcasing various products, services, and attractions from the event or exhibition. The signs and displays suggest that the event may have been focused on home and garden products, as well as local businesses and attractions.