Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 96.8% |

| Surprised | 0.7% |

| Disgusted | 88.3% |

| Confused | 1.7% |

| Happy | 0.5% |

| Calm | 1.5% |

| Sad | 0.7% |

| Angry | 6.6% |

Feature analysis

Amazon

| Person | 83.4% | |

Categories

Imagga

| nature landscape | 72.3% | |

| pets animals | 8.8% | |

| paintings art | 8.8% | |

| food drinks | 5.1% | |

| interior objects | 2.6% | |

| streetview architecture | 1.4% | |

| events parties | 0.4% | |

| people portraits | 0.4% | |

| cars vehicles | 0.1% | |

Captions

Microsoft

created on 2018-02-19

| a man sitting on a table | 35.2% | |

| a man sitting at a table | 35.1% | |

| a person sitting on a table | 35% | |

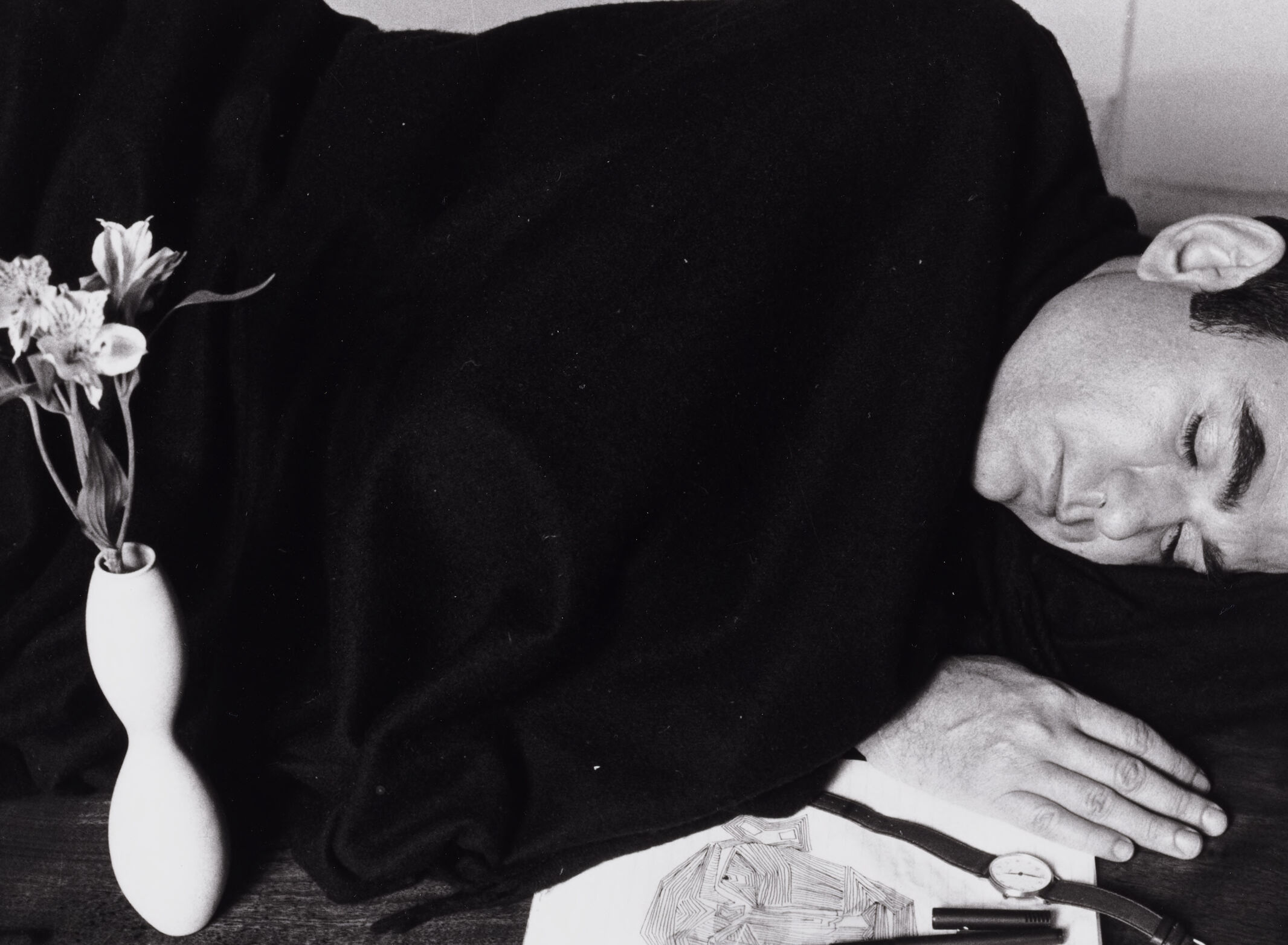

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-30

The black-and-white image features a man lying on his side with his eyes closed, covered in a dark blanket, possibly asleep or resting. The man's head is resting on a surface, and his arm is extended with his hand close to some objects in front of him. These objects include a wristwatch, a pen, and a drawing or intricate sketch on a piece of paper. Additionally, there is a vase with flowers in it placed prominently in the foreground, creating a juxtaposition between the natural elements and the resting figure. The overall setting is calm and introspective.

Created by gpt-4 on 2024-11-18

You are looking at a black and white photograph that features a person with their eyes closed, appearing to be resting or perhaps posing as if asleep. The person is lying on their side on a flat surface, with their head resting on an outstretched arm. In the foreground, there is a vase with a floral arrangement on the left, and at the bottom right, you can see some items arranged next to the person's other hand: a wristwatch, a pen, and a piece of paper with what looks like a drawing or diagram. The backdrop and the surface the person is lying on are covered with a dark cloth, which provides a stark contrast to the white vase and the lighter-toned objects. The image conveys a serene and somewhat artistic composition, combining elements which could symbolize time (the wristwatch), creativity or work (the pen and paper), and life or growth (the flowers). The choice of black and white enhances the textural details and lends the image a timeless quality.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-27

This is a black and white artistic photograph showing a dramatic composition. In the foreground, there's a white vase with delicate flowers, likely lilies, and beside it lies a piece of paper with what appears to be a geometric sketch or drawing. A fountain pen rests near the paper. The background is dominated by dark fabric or cloth, creating a strong contrast with the white elements. A person appears to be resting or sleeping in the frame, with just the side of their head visible at the edge of the image. The overall composition has a contemplative, perhaps melancholic mood, suggesting themes of creativity, rest, and artistic expression.

Created by claude-3-haiku-20240307 on 2024-11-18

The image shows a black and white photograph depicting a person reclining on a couch with a vase of flowers beside them. The person's face is visible, and they appear to be sleeping or resting with their eyes closed. The photograph has a somber, contemplative mood, with the contrast between the stark black and white tones and the delicate flowers creating a sense of solemnity and introspection.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

The image is a black-and-white photograph of a man sleeping on a table, with his head resting on his arm. The man has short dark hair and is wearing a dark-colored shirt. His right hand is resting on top of a piece of paper with a drawing on it, and there are two pens or pencils next to his hand. To the left of the man is a white vase with flowers in it. The background of the image is dark, with a white wall visible behind the man's head. The overall atmosphere of the image is one of quiet contemplation, with the man's peaceful sleep and the stillness of the objects around him creating a sense of calm.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-01

The image depicts a man sleeping at a desk, with his head resting on his arm and his hand positioned over a piece of paper featuring a sketch. The man is dressed in a dark-colored sweater and has short hair. A vase containing flowers sits to his left, accompanied by a pen and watch on the desk. The background of the image appears to be a wall, although it is not clearly visible due to the man's position. The overall atmosphere of the image suggests that the man may have been working on the sketch before falling asleep, possibly due to exhaustion or boredom. The presence of the flowers and the watch on the desk adds a touch of elegance and sophistication to the scene, which contrasts with the man's relaxed and sleepy demeanor.