Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

| Age | 0-6 |

| Gender | Female, 51.6% |

| Calm | 98.1% |

| Surprised | 6.3% |

| Fear | 5.9% |

| Sad | 2.3% |

| Happy | 0.9% |

| Disgusted | 0.2% |

| Confused | 0.1% |

| Angry | 0.1% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 98.6% | |

Categories

Imagga

created on 2023-07-06

| paintings art | 51.4% | |

| nature landscape | 25.7% | |

| food drinks | 8.8% | |

| streetview architecture | 4.2% | |

| pets animals | 3.2% | |

| people portraits | 3.1% | |

| cars vehicles | 2% | |

Captions

Microsoft

created by unknown on 2023-07-06

| a group of stuffed animals on a city street | 51.1% | |

| a group of people on a city street | 51% | |

| a person holding a dog | 45.5% | |

Clarifai

No captions written

Salesforce

Created by general-english-image-caption-blip-2 on 2025-07-01

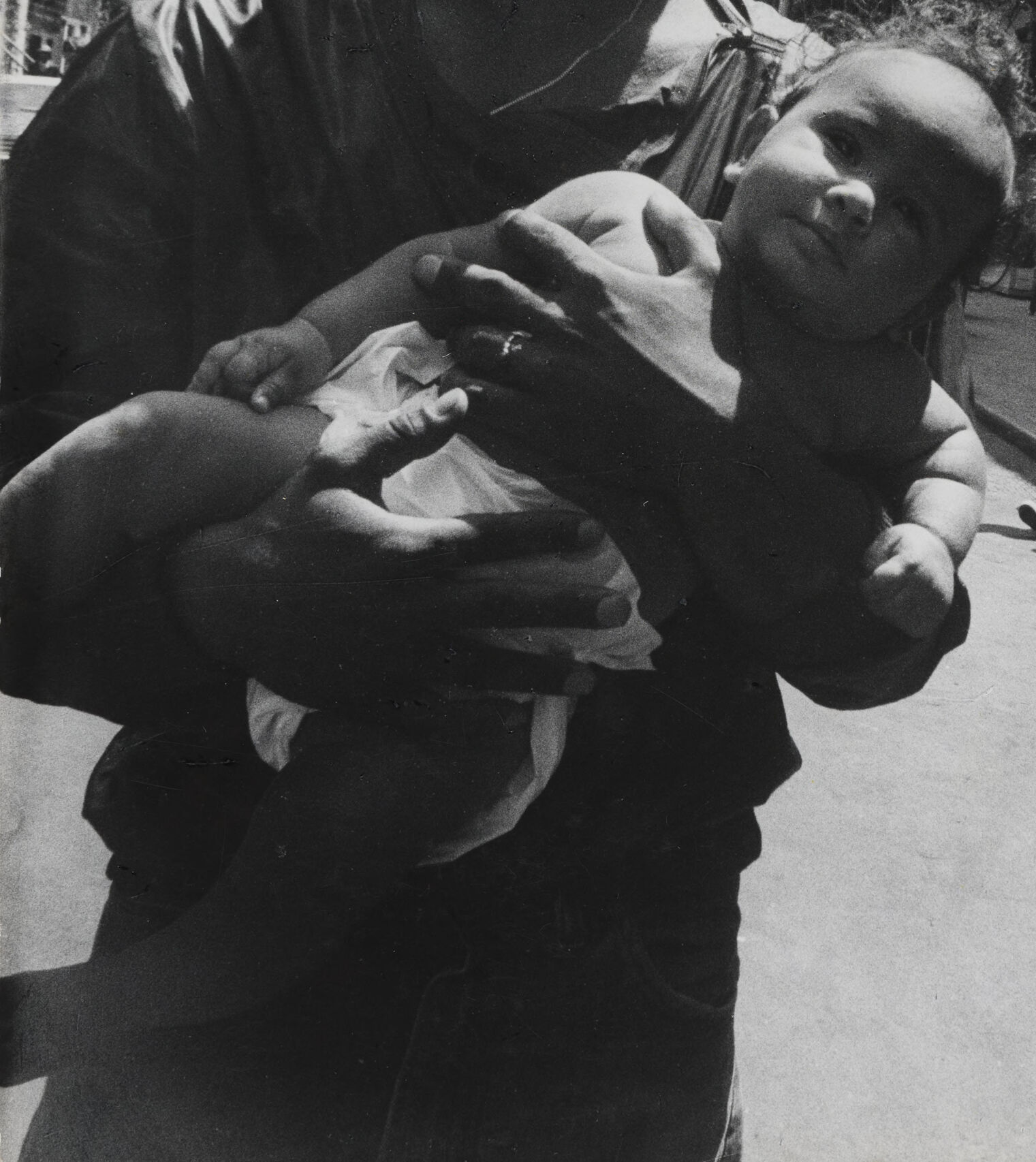

a man holding a baby in the middle of a city street

Created by general-english-image-caption-blip on 2025-05-04

a photograph of a man holding a baby in a city street

OpenAI GPT

Created by gpt-4o-2024-11-20 on 2025-06-16

The image depicts an individual wearing a jacket and holding a small child in their arms. The setting appears to be an urban neighborhood, with buildings featuring fire escapes and streetlights visible in the background. The street has a few pedestrians, creating a casual, everyday city scene. The photograph is in black and white, adding a timeless quality to the composition.

Created by gpt-4o-2024-08-06 on 2025-06-16

The image depicts an urban street scene with a person holding a baby. The person is wearing what appears to be a leather jacket and stands in the foreground of the image. The baby is wrapped in a light cloth or garment and is cradled in the person's arms. In the background, there are several multi-story buildings with typical urban architectural features, such as fire escapes. The street has a few other people visible, including someone in a striped shirt walking in the distance. The image appears to be in black and white or has a grayscale effect.

Anthropic Claude

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-28

This is a black and white photograph taken on what appears to be a New York City street, likely from several decades ago. The image shows a parent holding a young child in their arms on a city sidewalk. The urban setting is characterized by tall apartment buildings with fire escapes, and there are some string lights or decorations visible hanging across the street. The composition has an intimate, candid quality, capturing a tender moment between parent and child against the backdrop of city life. The photograph appears to be shot from a low angle, looking upward at the subjects, which creates a dramatic perspective against the urban landscape.

Created by claude-3-haiku-20240307 on 2025-01-01

The image depicts two individuals, a man carrying another man, in what appears to be an urban setting. The background shows buildings, lights, and other figures in the street. The scene conveys a sense of intimacy and care, with the man supporting the other figure. The black and white photograph creates a stark, dramatic tone to the image.

Created by claude-3-opus-20240229 on 2025-01-01

The black and white photograph shows a man holding an infant child on a city street. The man has a beard and is looking down at the child cradled in his arms. The street behind them has old brick buildings lining both sides, with fire escapes and clotheslines visible. A few other people can be seen walking on the sidewalk in the background. The scene gives an intimate glimpse of a tender moment between an adult and child in an urban neighborhood setting.

Created by claude-3-5-sonnet-20241022 on 2025-01-01

This is a black and white photograph taken on what appears to be a New York City street, likely from several decades ago. The image shows what appears to be a parent and child moment, captured on a city block lined with classic apartment buildings with fire escapes. String lights can be seen hanging above the street, and there's a figure visible in the background. The architecture and urban setting are characteristic of neighborhoods like the Lower East Side or similar areas of Manhattan. The composition creates an intimate street photography perspective that captures a slice of city life.

Meta Llama

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-06-04

The image is a black-and-white photograph of a man holding a baby on a city street. The man is wearing a dark shirt and has short hair. He is holding the baby with his right arm, and the baby is wearing a white onesie. The baby has dark hair and is looking at the camera.

In the background, there are several buildings, including a row of brownstones with fire escapes. There are also some people walking on the sidewalk, and a few trees and streetlights. The sky is bright and sunny.

The overall atmosphere of the image is one of warmth and happiness, with the man and baby enjoying a sunny day in the city.

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-06-04

The image is a black-and-white photograph of a man holding a baby in his arms, standing on a city street. The man has short hair and is wearing a dark shirt. He is looking up at the sky with the baby in his arms. The baby is wearing a white shirt and appears to be looking at the camera.

In the background, there are tall buildings lining the street, with fire escapes visible on the sides of the buildings. There are also people walking on the sidewalk and string lights hanging above the street. The overall atmosphere of the image suggests a warm and sunny day in an urban setting.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-05-28

The image is a black-and-white photograph of a man carrying a baby on his shoulder. The man is standing on a street, and there are buildings on either side of the street. The man is looking directly at the camera, and the baby is looking to the left. The baby is wearing a white cloth, and the man is wearing a jacket and a beanie. In the background, there are people walking on the sidewalk, and there are trees and streetlights.

Created by amazon.nova-pro-v1:0 on 2025-05-28

The black-and-white image features a man holding a baby in his arms. The man is wearing a hat and a ring on his finger. The baby is looking directly at the camera. The man and the baby are standing on the street. In the distance, there are people walking on the sidewalk. There are buildings on the side of the street. There are also some hanging lights and fire escapes on the buildings.

Google Gemini

Created by gemini-2.0-flash on 2025-04-30

The black and white photograph, taken from a low angle, features a man holding a baby in his arms in the foreground. The man appears to be of African descent with dark hair, a mustache, and is wearing a plain t-shirt with a thin chain around his neck. He is looking directly into the camera with a somber expression. The baby in his arms is also looking at the camera, with a neutral expression on their face, and is wearing a diaper.

The background shows a street scene in what seems to be a city environment. The buildings on both sides of the street are typical of older urban architecture, with multiple stories and fire escapes visible. There are string lights hung between the buildings, suggesting some kind of celebration or event.

A few other people are visible in the background. On the right side of the frame, a boy wearing a striped shirt and shorts is seen standing on the sidewalk. Other figures can be seen further down the street, though they are not as clear.

The photograph has a gritty and documentary feel, possibly taken in the 1970s or 1980s. The lighting is somewhat harsh, creating strong contrasts and emphasizing the textures of the buildings and clothing.

Created by gemini-2.0-flash-lite on 2025-04-30

Here's a description of the image:

The photograph captures a tender moment between a man and a baby. The man, who appears to be Black or of African descent, is holding the baby close to his chest. He gazes up towards the camera, appearing to be the primary subject, while the baby gazes towards the camera as well.

The image is taken in a bustling urban setting. In the background, tall buildings with fire escapes and multiple windows line the street. There are a few figures of people on the sidewalk, which suggests the location is a residential area or a place with pedestrian traffic. The perspective of the image is taken looking upwards, and the camera is relatively close to the subjects, giving a feeling of intimacy to the scene. The photograph is in black and white, suggesting a vintage feel. The overall atmosphere of the photo exudes a sense of warmth, connection, and tenderness.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-29

The black-and-white photograph depicts an urban street scene with a man holding a young child. The man is wearing a dark shirt and has a beard and mustache. He is holding the child in front of him, with both of them looking directly at the camera. The child appears to be a toddler, dressed in a light-colored outfit and wearing a headband.

The background shows a city street lined with multi-story buildings, some of which have fire escapes on their facades. The buildings have a vintage appearance, suggesting an older part of the city. The street is relatively quiet, with a few people visible in the distance. One person is walking away from the camera, and another person is standing near a parked vehicle. The overall atmosphere of the image is calm and intimate, capturing a moment of connection between the man and the child.

Qwen

No captions written