Microsoft

created on 2018-02-10

Azure OpenAI

Created on 2024-11-28

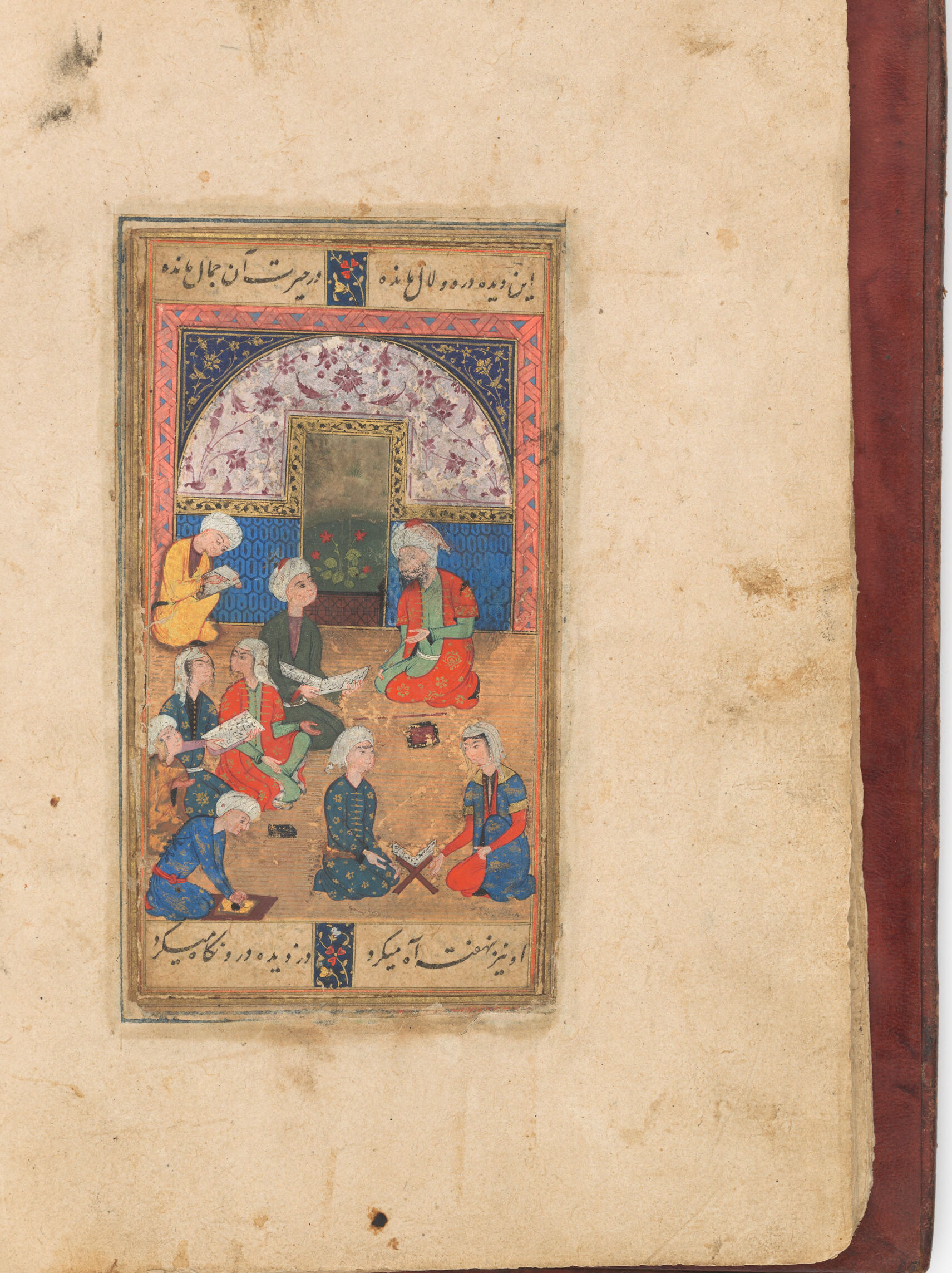

The image is of a page from an illuminated manuscript, likely of Persian origin, judging from the style of the artwork and the script at the top. The illustration shows several seated individuals engaged in what appears to be a courtly or scholarly gathering. The figures are dressed in traditional garments and various hues, with some wearing turbans, indicating a historical or cultural setting.

The central scene is framed by an ornate arch, which is decorated with intricate patterns and floral motifs. Above the arch, there is a band of calligraphy, which is common in Persian miniatures, where text and image often accompany each other.

The paper has signs of age and wear, such as staining and discoloration. This particular page has been bound into a larger volume, as evidenced by the red-and-brown marbled binding visible on the right edge. The image showcases the detailed artistry and cultural richness of the manuscript tradition from the region it originates.

Anthropic Claude

Created on 2024-11-27

The image appears to be an illuminated manuscript page from what seems to be a Persian or Islamic text. It depicts a group of individuals, likely scholars or sages, gathered in what appears to be an interior setting. The central figure is seated and surrounded by several others, all dressed in traditional Middle Eastern attire. The page is adorned with intricate floral and geometric border designs, typical of Islamic manuscript art. The overall composition and style suggest this is a historical or religious manuscript from the Middle Eastern region.

Meta Llama

Created on 2024-11-26

The image depicts a page from an old book, featuring a colorful illustration of people in traditional attire gathered around a table. The scene is set against a backdrop of intricate patterns and calligraphy.

* The page is yellowed with age, indicating that it is a vintage or antique item.

* The page has a worn and weathered appearance, suggesting that it has been handled extensively over time.

* The yellowing of the page is likely due to the natural aging process, which can cause paper to turn yellow or brown over time.

* The illustration is rendered in vibrant colors, showcasing a group of people dressed in traditional clothing.

* The individuals are depicted in various poses, some seated on the floor while others stand or sit on chairs.

* The clothing is ornate and detailed, with intricate patterns and designs that reflect the cultural heritage of the region.

* The background of the illustration features a series of arches and geometric shapes, creating a sense of depth and texture.

* The arches are adorned with floral motifs and other decorative elements, adding to the overall aesthetic of the image.

* The geometric shapes are arranged in a symmetrical pattern, creating a sense of balance and harmony.

* The text surrounding the illustration is written in a script that appears to be Arabic or Persian.

* The text is likely a poem or passage of text that accompanies the illustration, providing context and meaning to the image.

* The script is ornate and decorative, with intricate flourishes and calligraphy that adds to the overall beauty of the page.

In summary, the image presents a stunning example of traditional art and craftsmanship, showcasing the skill and attention to detail of the artist who created it. The use of vibrant colors, intricate patterns, and ornate calligraphy creates a visually striking image that is both beautiful and meaningful.