Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 66.3% |

| Surprised | 6.9% |

| Angry | 17.2% |

| Disgusted | 27.2% |

| Confused | 10.8% |

| Happy | 6.6% |

| Calm | 19.9% |

| Sad | 11.3% |

Feature analysis

Amazon

| Person | 99.1% | |

Categories

Imagga

| interior objects | 100% | |

Captions

Microsoft

created on 2018-02-09

| a black and white photo of a person | 73.5% | |

| a person standing in a room | 73.4% | |

| a person in a white room | 73.3% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-29

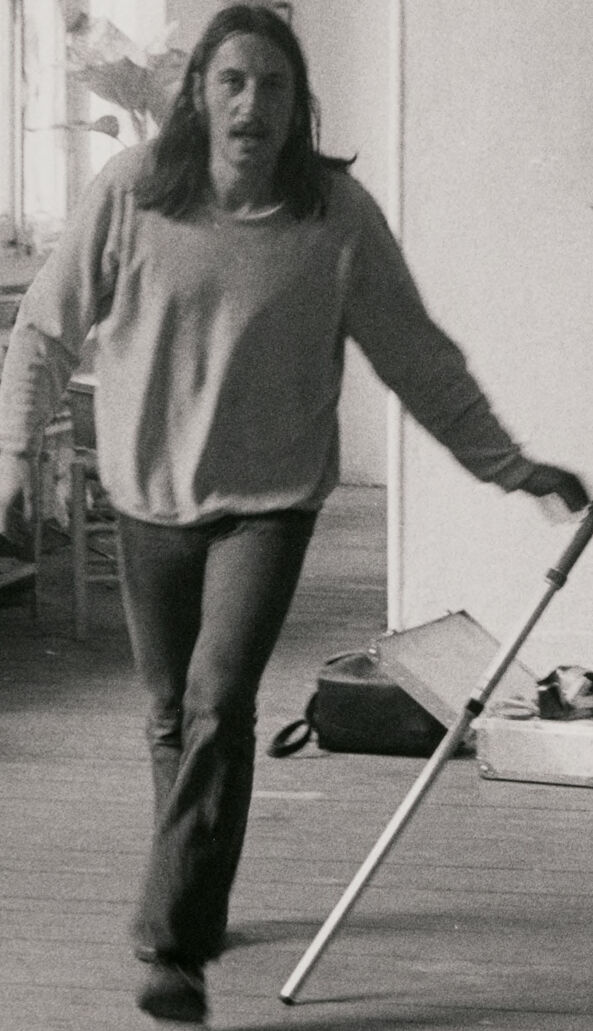

The black-and-white image depicts a person walking in an indoor studio-like space. The area is filled with various objects and furniture, including a camera on a tripod positioned in the foreground. There's a radiator against the wall, miscellaneous items on the floor, and chairs and tables surrounding the person. The room features large windows on the left side, allowing natural light to stream in, creating a well-lit environment. The overall composition suggests an artistic or work-in-progress setting.

Created by gpt-4 on 2024-11-28

This is a black and white photograph showing an interior space that appears to be a studio with various objects scattered around. In the center, there's a camera mounted on a tripod. To the right of the frame, you can see a radiator, and various geometric shapes, possibly artwork or part of an installation, are positioned around the room. A single window on the left side of the image lets in natural light, creating patterns on the floor and wall. There are several items on the floor around the tripod, including what seems to be a pair of shoes and a bag. The overall scene suggests a creative, working environment.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This is a black and white photograph taken in what appears to be an artist's studio or loft space. The room has wooden floors, tall windows letting in natural light, and a radiator visible along the wall. In the center of the image is a camera mounted on a tripod. A person wearing a casual sweater and pants is captured in motion, appearing to be walking or moving through the space. The overall composition has an informal, documentary feel, typical of art studio photography from what seems to be the 1970s or 1980s. The space has a minimalist quality with white walls and sparse furnishings, including what looks like a chair and some equipment or materials in the background.

Created by claude-3-haiku-20240307 on 2024-11-27

The image shows a person, likely a man, standing in what appears to be an art studio or workshop. The person is holding what looks like a camera or photographic equipment mounted on a tripod. The room contains various art supplies, furniture, and other objects, suggesting it is a creative workspace. The image is captured in black and white, giving it a vintage or historical feel.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

The image depicts a black-and-white photograph of a man in a studio setting. The man, with long hair, is dressed in a light-colored shirt and dark pants. He appears to be walking towards the camera, his right arm extended as if he is about to touch a tripod positioned in front of him. The tripod holds a camera, which is likely capturing the scene.

In the background, various objects are visible, including a radiator, a chair, and a table. The room's walls are painted white, and the floor appears to be made of wood. Natural light enters the room through windows on the left side, casting shadows on the floor. The overall atmosphere suggests that the man is engaged in some form of artistic or creative activity, possibly photography or videography.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-25

The image depicts a woman walking through a room, likely a studio or workspace. She has long hair and is dressed in a light-colored shirt and dark pants. The room is cluttered with various objects, including a camera on a tripod, a radiator, a chair, and several boxes and bags. The walls are white, and the floor appears to be made of wood. The overall atmosphere suggests a creative or artistic space, possibly used for photography or other artistic pursuits.