Machine Generated Data

Tags

Color Analysis

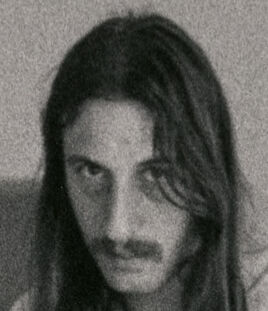

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 74.9% |

| Angry | 9.5% |

| Disgusted | 2.5% |

| Sad | 28.5% |

| Happy | 3% |

| Calm | 48% |

| Surprised | 2.6% |

| Confused | 5.9% |

Feature analysis

Amazon

| Person | 99.2% | |

Categories

Imagga

| interior objects | 89.2% | |

| streetview architecture | 9.7% | |

| events parties | 0.5% | |

| paintings art | 0.3% | |

| pets animals | 0.1% | |

| food drinks | 0.1% | |

| nature landscape | 0.1% | |

Captions

Microsoft

created on 2018-02-09

| a person standing in front of a tripod | 73.9% | |

| a person standing in a room | 73.8% | |

| a person standing in front of a tripod | 65.8% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-29

The image depicts a person standing on a chair, holding and operating a camera that is mounted on a tripod. They are dressed in casual attire, including a sweater and jeans. The setting appears to be an indoor space with wooden flooring and some pipes and objects in the background.

Created by gpt-4 on 2024-11-28

The image is a black and white photograph depicting an individual standing on a wooden stool. The person is operating a camera mounted on a tripod. They are dressed in a casual long-sleeve top and jeans, with shoes that resemble boots or casual footwear. The setting appears to be an indoor space with wooden flooring, and there's at least one white door or barrier partially visible in the background. The environment gives the impression of a spacious room, perhaps a studio or gallery, given the apparent simplicity and minimalistic style.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This is a black and white photograph showing a photographer standing on a chair to work with a camera mounted on a tall tripod. The photographer has long hair and is wearing a light-colored sweatshirt and jeans. The setting appears to be an empty indoor space with wooden floorboards. The image has a casual, behind-the-scenes quality to it, capturing someone in the process of setting up or taking a shot. The composition includes the full length of the figure, the rustic wooden chair, and the professional camera equipment, creating an interesting contrast between the informal and technical elements in the scene.

Created by claude-3-haiku-20240307 on 2024-11-27

The image shows a person, whose face is not clearly visible, standing on a wooden stool and holding a camera on a tripod. The person is dressed in a casual outfit, wearing a long-sleeved sweater and jeans. The background appears to be a room or studio with bare walls and a wooden floor. The overall tone of the image is black and white, giving it a moody and contemplative feel.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

The image is a black-and-white photograph of a man standing in an empty room, holding a camera on a tripod. The man has long hair and is wearing a light-colored sweatshirt, dark pants, and shoes. He is standing on a small chair, with his feet on the seat and his hands on the camera. The camera is mounted on a tripod, which is positioned in front of him.

In the background, there are several objects visible, including a doorway, a wall with a picture or painting on it, and a floor with a few scattered items. The overall atmosphere of the image suggests that the man is preparing to take a photograph, possibly in a studio or other indoor setting.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-26

The image is a black-and-white photograph of a man standing on a stool, holding a camera. The man has long hair and a mustache, and he is wearing a light-colored sweatshirt, dark jeans, and dark shoes. He is standing on a stool with a tripod attached to it, and he is holding a camera with a lens attached to it. The background of the image is a room with white walls and a wooden floor. There are some objects in the background, including a door, a window, and some boxes or bags. The overall atmosphere of the image is one of creativity and focus, as the man is intently looking through the viewfinder of the camera.