Microsoft

created on 2019-06-25

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-31

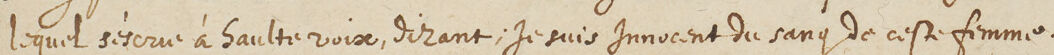

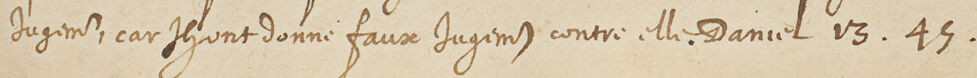

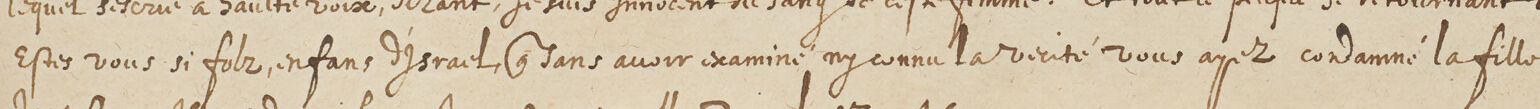

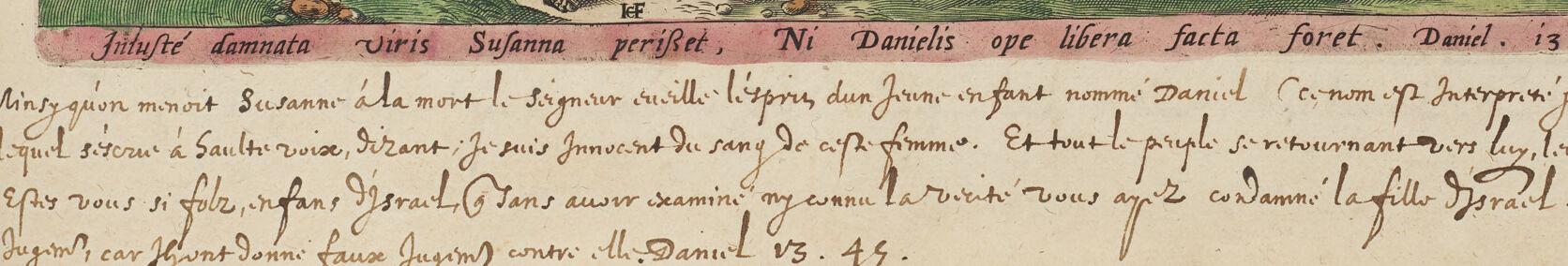

The image is a richly colored illustration from an old book. It depicts an outdoor scene with several people dressed in robes, typical of historical or biblical attire, in a city setting with classical architecture, including domed buildings in the background. The center of the scene appears to involve some form of interaction or gathering, suggesting a moment of narrative significance. There is handwritten text in Latin below the illustration, which likely describes or explains the depicted event.

Created by gpt-4 on 2024-11-13

This is an image of an aged, colorized print from a book, which appears to show a historical or biblical scene set in a public square. Numerous figures are dressed in garments from an older era, featuring robes and tunics in vibrant colors such as red, blue, green, and purple. In the background, we see classic architecture, including buildings with arches and domes suggesting a European city, possibly from the Renaissance or earlier.

The print includes captions and text in Latin, which hint at the illustration being educational or narrative in nature, perhaps part of a historical or religious text. The book's edge is visible on the right side, showing a well-worn page edge and the number 181 at the top, indicating the page number. The careful colorization and the detailed depiction of the scene suggest that this book was a valuable resource, meant to convey its message with visual impact to the reader.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-27

This is a colorful historical illustration depicting a biblical scene from the Book of Daniel, specifically the story of Susanna. The image shows a crowd gathered in what appears to be a Renaissance-era city setting, with domed buildings and towers in the background. The figures are dressed in vibrant medieval/Renaissance style clothing with flowing robes in colors like red, blue, pink, and green.

The scene appears to represent a pivotal moment in the story where Susanna's innocence is proven through Daniel's intervention. The composition includes several groups of people - some standing, some in gesturing poses, arranged in a classical narrative style typical of religious art from this period.

At the bottom of the image, there is text in Latin and what appears to be French, providing context for the biblical scene. The artwork is part of what seems to be an old manuscript or book, as evidenced by the visible page edge and binding on the right side.

The architectural details in the background show a European city setting with characteristic features like arched windows, domed churches, and multi-story buildings, helping to place the biblical narrative in a context familiar to viewers of the time period when this illustration was created.

Created by claude-3-haiku-20240307 on 2024-11-13

The image appears to be an engraved illustration from a historical or religious text. It depicts a crowded street scene in a city, with a group of people dressed in ornate, period-accurate clothing. The buildings in the background feature domed roofs and other architectural details characteristic of an early European or Middle Eastern urban setting. The people in the foreground seem to be engaged in some sort of gathering or event, though the specific context is not entirely clear from the image alone. The illustration has a vintage, hand-colored appearance, suggesting it may be from an older printed work.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-25

This image depicts a page from an antique book, featuring an illustration of a scene from the Bible. The illustration is rendered in vibrant colors and showcases a group of people gathered around a central figure, who appears to be Daniel. The scene is set against the backdrop of a cityscape, with buildings and a church visible in the distance.

The illustration is accompanied by handwritten text in a foreign language, which adds to the overall sense of antiquity and historical significance. The page itself is yellowed with age, suggesting that it has been preserved for centuries. The image provides a unique glimpse into the artistic and cultural traditions of the past, and serves as a testament to the enduring power of religious art to inspire and educate.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-21

The image depicts a page from an antique book, featuring a colorful illustration of a scene from the Bible. The illustration is surrounded by text in an unknown language, possibly Latin or French, and is accompanied by handwritten notes in the same language.

* The illustration shows a group of people gathered in a town square, with some individuals dressed in robes and others in more casual attire. A man in the center of the group appears to be speaking to the others, while a woman stands nearby, looking on.

* The background of the illustration features buildings and a church steeple, suggesting that the scene takes place in a small town or village.

* The overall atmosphere of the illustration is one of community and discussion, with the people gathered together to hear the words of the speaker.

The image provides a glimpse into the artistic and cultural traditions of the time period in which it was created, and offers insight into the ways in which people have interpreted and represented religious stories throughout history.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-02-27

The image shows an open book with a drawing of a scene from the Bible. The drawing is of a man named Daniel, who is being approached by a man in a yellow robe. The man in the yellow robe is raising his hand and pointing at Daniel. There are other people in the drawing, including a man in a red robe and a woman in a blue robe. There is a handwritten note at the bottom of the page.

Created by amazon.nova-lite-v1:0 on 2025-02-27

The image is a page from an old book, featuring a historical illustration and handwritten text. The illustration depicts a scene with several people gathered in an outdoor setting, possibly a town square or a street. The individuals are dressed in ancient Roman clothing, with some wearing togas and others in simpler attire. The scene appears to be a historical event or a depiction of a biblical story.

The illustration is accompanied by handwritten text in Latin, which provides context and description for the image. The text is written in a cursive style and is positioned at the bottom of the page. The book itself is old and has a worn appearance, with a leather cover and a spine that shows signs of wear.

The image captures a moment in history or a religious narrative, with the illustration and text working together to convey the story. The use of Latin suggests that the book may have been written for a scholarly or academic audience, and the illustration adds visual interest and context to the text. Overall, the image offers a glimpse into the past and the rich cultural and historical traditions of ancient Rome or the biblical world.