Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 35-55 |

| Gender | Male, 50.5% |

| Surprised | 45% |

| Angry | 45.1% |

| Happy | 45% |

| Sad | 53.4% |

| Disgusted | 45% |

| Calm | 46.3% |

| Confused | 45.2% |

Feature analysis

Amazon

Clarifai

AWS Rekognition

| Person | 97.2% | |

Categories

Imagga

created on 2019-06-25

| paintings art | 94.5% | |

| text visuals | 3.1% | |

| food drinks | 1.7% | |

Captions

Microsoft

created by unknown on 2019-06-25

| a close up of a box | 40.3% | |

| close up of a box | 33.8% | |

Salesforce

Created by general-english-image-caption-blip on 2025-05-12

a photograph of a book with a picture of a man in a suit and a dog

Created by general-english-image-caption-blip-2 on 2025-06-30

a book with an illustration of people in the countryside

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-31

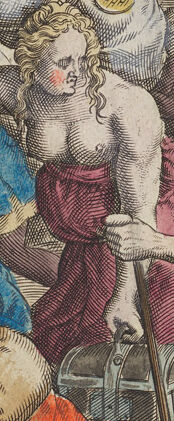

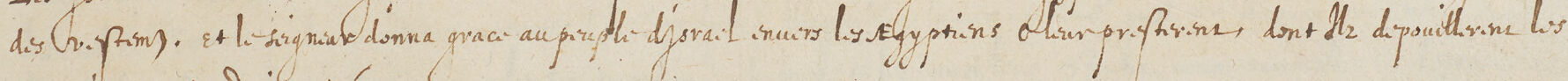

The image depicts a large group of people, seemingly in an ancient setting, filled with hustle and activity. There are individuals carrying sacks and various household items, suggesting that they might be embarking on a journey or exodus. A man with a regal appearance, dressed in a blue tunic with a red cloak and holding a staff, appears to be addressing or leading the group. On the left, there are a few figures, one of whom is kneeling, possibly providing support or aid. In the background, a dense crowd stretches into the distance, emphasizing the large scale of the gathering. The terrain includes a mountainous backdrop with some greenery, and the foreground shows a dog trotting alongside the people. Latin text and a handwritten note appear below the scene, attributing the image to a Biblical event, potentially the Exodus from Egypt.

Created by gpt-4 on 2024-11-19

This image appears to be a page from an illustrated manuscript or an early printed book, showing a colorful, detailed scene set in a historical or possibly biblical context. The image depicts a group of characters dressed in a variety of late medieval or Renaissance garments, suggesting some diversity in social status or role. Several individuals are carrying possessions or guiding animals, suggesting travel or migration.

In the foreground, individuals are engaged in activities such as carrying baskets, some with contents visible, and there's a dog walking through the scene. To the left, one character wearing a red cloak and blue tunic raises a hand, while those around seem to be listening or attending to this figure, indicating some form of leadership or instruction. The background reveals a landscape with mountains, and additional groups of individuals continue the theme of movement with figures seen walking up a slope in the distance.

At the bottom of the illustration, there is a line of Latin text, which is likely to be a caption or description related to the scene depicted above it. The presence of text and page number (55) indicates that this is part of a larger work, such as a chronicle or an illuminated manuscript. The image seems to be hand-colored, which was a common practice for illustrating printed books before the advent of full-color printing technologies.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-27

This appears to be a historical religious illustration depicting the Exodus from Egypt. The scene shows a large group of people carrying possessions, vessels, and goods as they depart. In the foreground, there are figures loading and carrying various items, including baskets and containers. The scene is set against a landscape with turquoise-colored mountains in the background.

The artwork is colorfully rendered with figures dressed in vibrant reds, blues, purples, and greens. A long line of people can be seen winding into the distance, suggesting the mass exodus of the Israelites. There's even a small dog included in the scene.

At the bottom of the image is Latin text, followed by what appears to be French text, likely explaining the biblical scene being depicted. The illustration appears to be from an old book or manuscript, as evidenced by the visible page number and binding.

The style suggests this is likely from a 16th or 17th-century illustrated Bible or religious text, with the characteristic detailed hand-coloring and engraving techniques of that period.

Created by claude-3-haiku-20240307 on 2024-11-19

The image depicts a crowded scene from what appears to be a biblical or historical event. In the center, there are numerous figures engaged in some sort of activity or confrontation, with people of various ages and genders visible. The background includes mountainous terrain and a cloudy sky. The overall scene seems to be chaotic and bustling with activity. The text below the image provides additional context, but I will refrain from identifying or naming any of the human figures shown.

Created by us.anthropic.claude-3-5-sonnet-20241022-v2:0 on 2025-06-26

This appears to be a historical religious illustration depicting the Exodus from Egypt. The image shows a large crowd of people in colorful medieval-style clothing, carrying belongings and traveling together. The scene appears to represent the biblical account of the Israelites leaving Egypt, as indicated by the Latin text below the image which references Exodus 12.

The illustration is hand-colored and features vibrant blues, reds, purples, and greens. The people are shown walking through a mountainous landscape with turquoise-colored hills in the background. Some figures are carrying staffs and bundles, while others are leading animals. The style suggests this is likely from a medieval or Renaissance-era manuscript or religious text.

The composition is dynamic, with the crowd moving from left to right across the image. The artwork combines elements of biblical narrative with artistic conventions of the period in which it was created, as evidenced by the European-style clothing and architectural details.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

The image depicts a page from an antique book, featuring a colorful illustration of a scene with people and animals. The illustration is accompanied by handwritten text in a foreign language, likely Latin or French, at the bottom of the page.

Illustration:

- The illustration showcases a diverse group of people, including men, women, and children, engaged in various activities.

- Some individuals are carrying baskets, while others are holding weapons or tools.

- A dog is also present in the scene, adding to the dynamic atmosphere.

- The background of the illustration features a mountainous landscape with a body of water, creating a sense of depth and context.

Handwritten Text:

- The handwritten text at the bottom of the page appears to be a caption or description of the illustration.

- The text is written in a flowing script, with some words underlined for emphasis.

- Although the language is not immediately recognizable, it is likely a historical or literary text that provides context for the illustration.

Book Page:

- The book page itself is aged and worn, with visible signs of use and handling.

- The paper is yellowed, and the edges are rough and torn in some places.

- The binding of the book is visible on the right side of the image, with a ribbon bookmark attached to the page.

Overall, the image presents a fascinating glimpse into the past, showcasing a beautifully illustrated page from an antique book. The combination of the colorful illustration, handwritten text, and aged book page creates a captivating visual experience that invites further exploration and interpretation.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-12-01

The image depicts a page from an old book, featuring a colorful illustration of a biblical scene. The illustration is surrounded by text in Latin, which appears to be a description of the scene.

Illustration:

- The illustration shows a group of people gathered around a man who is kneeling and holding a basket.

- The man is wearing a red hat and an orange tunic, and he has a beard and a staff in his hand.

- There are several other people in the scene, including women and children, who are all dressed in traditional clothing.

- In the background, there are mountains and a body of water, which may represent the Mediterranean Sea.

- There are also animals present in the scene, including a dog and a donkey.

Text:

- The text surrounding the illustration is written in Latin and appears to be a description of the scene.

- The text includes phrases such as "Aegypto egrediens populus capit aurea vafa" and "Imponique humeris, tollere quod potuit."

- The text also includes a title at the top of the page, which reads "Exod. 12."

Book:

- The book itself appears to be old and worn, with yellowed pages and a brown cover.

- The book is bound with a leather strap and has a decorative cover with gold lettering.

- The book is open to a page with a large illustration, and the text is written in a decorative font.

Overall, the image suggests that the book is a historical or religious text, possibly from the 16th or 17th century. The illustration and text appear to be related to the story of the Israelites' exodus from Egypt, which is a central theme in the Bible.

Amazon Nova

Created by amazon.nova-lite-v1:0 on 2025-02-27

The image shows a page from an old book with an illustration of a crowd of people. The illustration depicts a large group of people, possibly from ancient times, gathered together in a field. Some of them are carrying baskets, while others are holding sticks or spears. The people seem to be engaged in some kind of activity, possibly a festival or a celebration.

The illustration is surrounded by a pink border, and there is a caption written in a foreign language below it. The caption appears to be a description of the scene depicted in the illustration. The book itself has a worn and aged appearance, with a brown leather cover and a faded spine.

The image provides a glimpse into the past and offers a visual representation of a historical event or cultural practice. The illustration captures the essence of the scene and provides insight into the lives of people from a bygone era.

Created by amazon.nova-pro-v1:0 on 2025-02-27

A page of an open book with a painting of people on the left side and text on the right side. The painting depicts a man standing with a stick in his hand and another man beside him. Several people are walking on the road, and a dog is walking beside them. A mountain is located behind them. The text is written in French.

Google Gemini

Created by gemini-2.0-flash on 2025-04-27

Here is a description of the image:

This is an illustration in a vintage book, possibly a Bible or a religious text, depicting a scene from the Exodus story.

Scene Details:

- Foreground: The image focuses on a group of people, presumably Israelites, leaving Egypt. They are carrying their belongings, including baskets, chests, and sacks, indicating they are taking possessions with them. The figures are dressed in a variety of colors, including reds, blues, purples, and greens.

- Central Figures: In the front left, a man in a red shirt and a wide-brimmed hat is kneeling and gathering items into a basket. A baby sits beside him, holding a fruit or small object. To their right, other people are carrying burdens, including a woman carrying a large basket on her back and a child walking alongside her. A dog runs in front of them.

- Background: In the distance, a large crowd of people is moving away, presumably also Israelites. Some are riding on animals, while others are on foot. They are carrying spears or staffs. The landscape includes green hills and mountains.

- Moses: On the left side of the image, there is a tall figure, likely Moses, dressed in a blue and red robe. He is raising his hand in a gesture of leading or commanding. Another figure stands beside him, holding a staff or spear.

- Text: Below the image is Latin text.

Style and Context:

- The illustration is hand-colored and has a somewhat naive or archaic style, consistent with older book illustrations.

- The illustration has an aged look, with slight discoloration and wear on the pages. The binding is visible on the right side of the image, showing the aged pages.

- The numbering is 55 on the top corner.

Created by gemini-2.0-flash-lite on 2025-04-27

Here's a description of the image:

Overall Scene:

The image is a detailed, colorful illustration from what appears to be an old book or manuscript. The scene depicts a historical or biblical event, likely the Exodus of the Israelites from Egypt. It's a vibrant, hand-colored print, with a lot of figures and activity.

Key Elements and Composition:

- Foreground:

- Figures in the foreground are in the midst of collecting and carrying items. A man dressed in red is pouring things from a basket, a child is seated holding a bowl of fruit, and a dog runs in the scene.

- Middle Ground:

- A large group of people are walking, carrying their belongings. Some are bearing large packs, and there are animals like donkeys.

- Background:

- A line of soldiers on horseback and foot are visible on a grassy hill in the distance, possibly chasing the Israelites.

- A man in red and blue robes, possibly Moses, is the center of attention, raising his arm and looking back toward a crowd of people.

- The illustration features a mountainous background.

Color and Style:

- The image is richly colored, with a palette of reds, blues, greens, yellows, and flesh tones.

- The style is reminiscent of historical illustrations, possibly from the Renaissance or Baroque periods, with a focus on detail and narrative.

Additional Information:

- Latin text and the word "Exod" (Exodus) suggest it could be a depiction of a biblical event.

- There is a page number, "55," on the top right-hand side.

- The book's edge is visible, showing aging and wear, giving it a sense of history.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-04-27

The image is an illustration from an old book, depicting a biblical scene. It shows a group of people, likely the Israelites, leaving Egypt. The scene is colorful and detailed, with various figures in traditional attire, carrying belongings and livestock.

Key elements of the illustration:

Foreground Figures:

- A central figure in red garments is kneeling and holding a child, with another child sitting beside him.

- Nearby, a woman in a green dress is holding a basket on her head.

- A man in a red cloak and crown is pointing towards the distance, possibly indicating the direction of travel.

- A soldier with a spear stands next to the man in the red cloak.

Background:

- The background shows a large group of people walking away, carrying belongings and leading animals, such as donkeys.

- The setting appears to be a hilly landscape, with a body of water visible in the distance.

Text:

- At the bottom of the illustration, there is text in Latin: "Aegypto egressus populus capita aurca vafa, Impoñitque humeris, tollere quod potuit."

- Below this, there is additional text in French, which seems to describe the scene in more detail.

The illustration captures a moment of exodus, with people leaving their homes and carrying their possessions, reflecting a sense of urgency and determination. The detailed clothing and the presence of livestock add to the authenticity of the biblical narrative being depicted.