Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 30-47 |

| Gender | Male, 99.6% |

| Angry | 5.7% |

| Confused | 20.6% |

| Disgusted | 1.7% |

| Happy | 0.8% |

| Surprised | 3.7% |

| Sad | 10.3% |

| Calm | 57.1% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 67.8% |

| Disgusted | 1.4% |

| Sad | 35.7% |

| Angry | 57.1% |

| Calm | 3.4% |

| Surprised | 0.9% |

| Happy | 0.7% |

| Confused | 0.9% |

AWS Rekognition

| Age | 49-69 |

| Gender | Male, 81.4% |

| Surprised | 4.1% |

| Happy | 0.8% |

| Disgusted | 1.4% |

| Calm | 85.4% |

| Sad | 3.1% |

| Angry | 3.3% |

| Confused | 1.9% |

AWS Rekognition

| Age | 30-47 |

| Gender | Male, 99.8% |

| Calm | 45.5% |

| Confused | 10.9% |

| Surprised | 4% |

| Disgusted | 5.2% |

| Happy | 1.4% |

| Angry | 18.5% |

| Sad | 14.5% |

AWS Rekognition

| Age | 35-52 |

| Gender | Male, 73.7% |

| Disgusted | 1.9% |

| Sad | 50.4% |

| Calm | 12.1% |

| Surprised | 4.2% |

| Happy | 2.3% |

| Confused | 3% |

| Angry | 26.1% |

AWS Rekognition

| Age | 15-25 |

| Gender | Male, 87.4% |

| Calm | 79.4% |

| Disgusted | 3.6% |

| Angry | 4.8% |

| Happy | 3.1% |

| Confused | 1.7% |

| Sad | 4% |

| Surprised | 3.4% |

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 95.8% |

| Disgusted | 2.5% |

| Calm | 59.4% |

| Happy | 1.2% |

| Angry | 6.6% |

| Sad | 22.5% |

| Confused | 5.8% |

| Surprised | 2.1% |

AWS Rekognition

| Age | 20-38 |

| Gender | Female, 54.1% |

| Happy | 45.3% |

| Surprised | 45.2% |

| Disgusted | 45.3% |

| Confused | 45.2% |

| Angry | 45.3% |

| Calm | 47.7% |

| Sad | 50.9% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 51% |

| Disgusted | 45.2% |

| Surprised | 45.4% |

| Happy | 48.1% |

| Confused | 45.2% |

| Calm | 46% |

| Sad | 49.9% |

| Angry | 45.3% |

Microsoft Cognitive Services

| Age | 27 |

| Gender | Female |

Microsoft Cognitive Services

| Age | 30 |

| Gender | Male |

Microsoft Cognitive Services

| Age | 38 |

| Gender | Male |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| streetview architecture | 81.5% | |

| paintings art | 11.3% | |

| interior objects | 2.1% | |

| text visuals | 1.9% | |

| people portraits | 1.5% | |

| pets animals | 0.8% | |

| cars vehicles | 0.3% | |

| events parties | 0.2% | |

| nature landscape | 0.2% | |

| food drinks | 0.1% | |

| beaches seaside | 0.1% | |

Captions

Microsoft

created on 2018-02-09

| a group of people standing in front of a building | 94.8% | |

| a group of people standing in front of a window | 92% | |

| a group of people posing for a photo | 91.9% | |

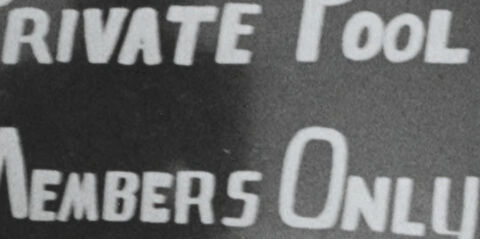

Azure OpenAI

Created on 2024-11-28

The image is a black and white photo depicting a group of individuals standing in front of a doorway. A large sign reads "Private Pool Members Only," suggesting that the location is a private swimming facility with restricted access. The people appear to be in casual attire, and the setting seems to be during a warm weather season given the choice of clothing. The scene suggests a moment from the past, given the vintage feel of the photo and clothing style. The individuals' postures and the presence of the sign hint at a possibly socially significant context, but without further information, it's difficult to ascertain the specific circumstances or the nature of the event captured in the image.

Anthropic Claude

Created on 2024-11-27

The image depicts a group of people, primarily African American men, standing outside a building with a sign that reads "Private Pool Members Only". The sign suggests that this pool is restricted to members only, and the group of individuals appears to be excluded or denied access. The image captures a historical moment of segregation and inequality, highlighting the discriminatory practices that existed at the time.

Meta Llama

Created on 2024-11-26

The image depicts a group of men standing outside a building, with one man holding a sign that reads "PRIVATE POOL MEMBERS ONLY." The men are dressed in casual attire, with some wearing hats and others in shorts or pants. They appear to be waiting in line to enter the building. The sign is prominently displayed on a table, indicating that the pool is restricted to members only. The image suggests that the pool may be a private club or facility, and the men are likely waiting to gain access to it. Overall, the image conveys a sense of exclusivity and membership, with the sign clearly indicating who is allowed to enter the pool area.