Machine Generated Data

Tags

Color Analysis

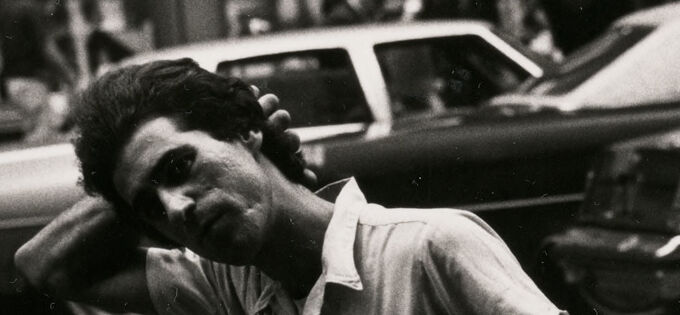

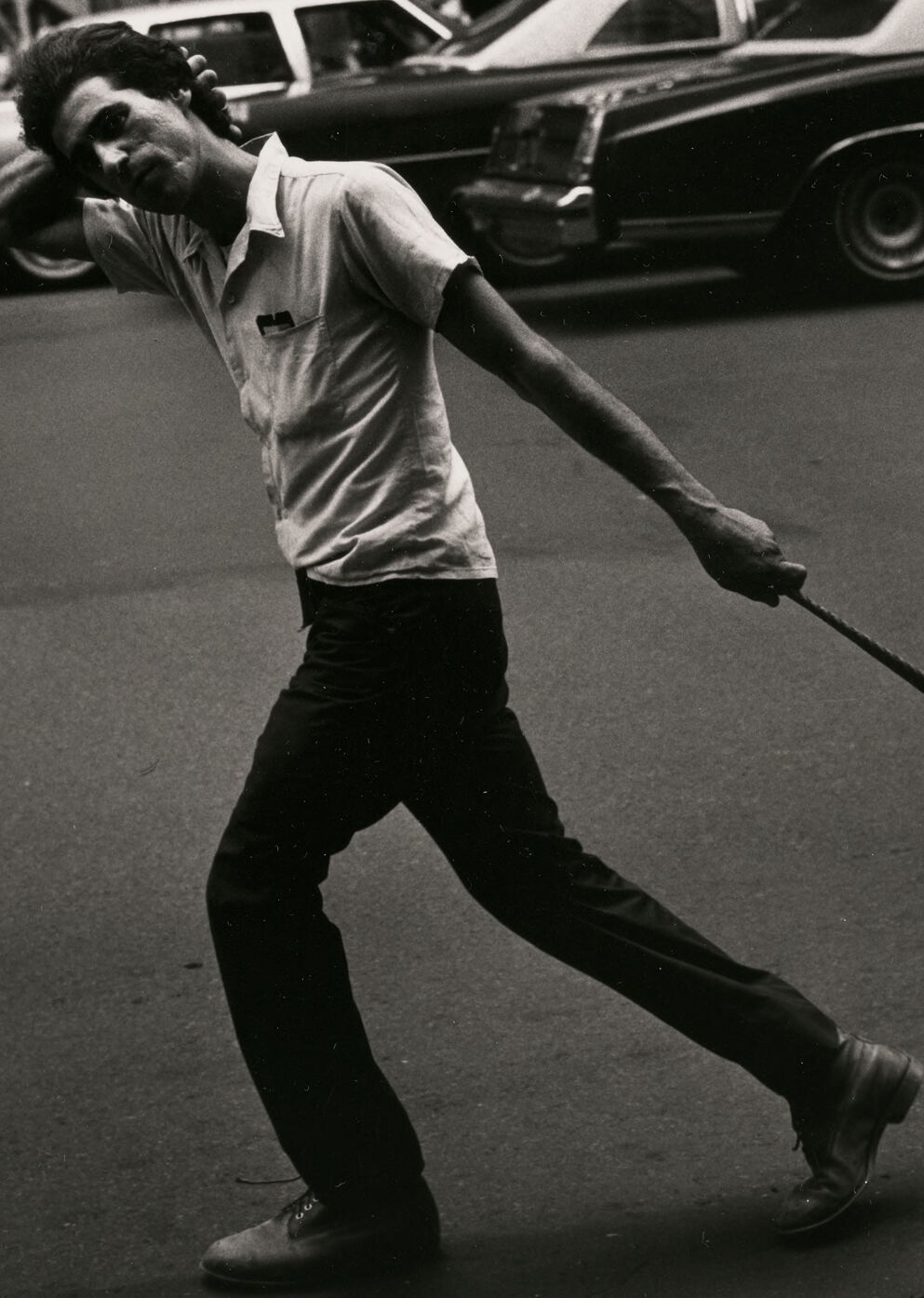

Face analysis

Amazon

AWS Rekognition

| Age | 30-47 |

| Gender | Male, 99% |

| Happy | 0.3% |

| Angry | 14.8% |

| Surprised | 2.4% |

| Sad | 13.5% |

| Disgusted | 2% |

| Calm | 57.2% |

| Confused | 9.8% |

Feature analysis

Amazon

| Person | 99.8% | |

Categories

Imagga

| events parties | 94.9% | |

| cars vehicles | 2.4% | |

| food drinks | 1% | |

| paintings art | 0.6% | |

| nature landscape | 0.5% | |

| people portraits | 0.2% | |

| pets animals | 0.2% | |

| interior objects | 0.2% | |

| text visuals | 0.1% | |

| streetview architecture | 0.1% | |

Captions

Microsoft

created on 2018-02-10

| a man walking down the street | 97.5% | |

| a man is walking down the street | 97.4% | |

| a man walking down a street | 97.3% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-30

The image is a black-and-white photograph depicting a person crossing a street in an urban environment. The individual is dressed in a short-sleeved shirt and pants, and they are pulling on a leash or rope that appears to be taut. The street is filled with vintage cars, and there are several people walking on the sidewalk and shopping in stores with signs reading "SALE" in the background. The overall scene suggests a bustling city atmosphere.

Created by gpt-4 on 2024-11-28

The image is a black and white photograph capturing a moment on a busy street. In the foreground, an individual is walking across the street, with a focused stride, wearing a short-sleeved polo shirt and dark pants. The person is holding a slender object that extends to the ground, suggesting movement or direction. The street is lined with parked cars that appear to be of designs popular in the latter half of the 20th century. Several shops with signage can be glimpsed in the background, and part of another pedestrian is visible at the far right edge of the frame. The atmosphere suggests an urban setting, and the attire of the individuals indicates a casual or workday environment. The capture of motion in the pedestrians and the stationary nature of the surrounding cars create a dynamic contrast in this slice-of-life scene.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This is a dynamic black and white street photograph showing someone in an expressive, dance-like pose on what appears to be a city street. The subject is wearing a light-colored shirt and dark pants, and appears to be leaning back dramatically while holding some kind of pole or stick. In the background, there are several parked cars and people walking on the sidewalk. The image has a spontaneous, candid quality that captures a moment of movement and energy in an urban setting. The composition creates an interesting diagonal line through the subject's tilted posture.

Created by claude-3-haiku-20240307 on 2024-11-27

The image depicts a person in casual clothing, likely a man, standing on a city street. He appears to be in the act of moving or dancing, with his arms and legs extended and his expression suggesting a sense of movement or energy. In the background, there are parked cars and other urban elements, creating a street scene context. The image has a black and white, documentary-style aesthetic, suggesting it may be from an earlier era.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

This image is a black-and-white photograph of a man walking down the street, pulling on a leash attached to another person's arm. The man in the foreground is wearing a white collared shirt, dark pants, and dress shoes. He has his right arm extended behind him, holding the leash, while his left arm is bent at the elbow and held close to his head. His body is angled to the right, with his right leg extended behind him and his left leg bent in front of him. In the background, there are several cars parked along the street, as well as people walking around. The overall atmosphere of the image suggests that it was taken in an urban setting, possibly during the daytime. The man's pose and expression convey a sense of tension or struggle, as if he is trying to pull the other person along with him.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-25

The image depicts a man in the midst of a violent act, swinging a long stick or whip at someone who is out of frame. The man is wearing a white polo shirt, dark pants, and shoes, and has short, dark hair. He appears to be in motion, with his right leg extended behind him and his left arm holding the stick or whip. The background of the image shows a busy street scene, with several cars parked along the side of the road and people milling about. A storefront with a "SALE" sign in the window is visible in the distance. The overall atmosphere of the image is one of chaos and violence.