Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

| Age | 30-47 |

| Gender | Male, 97.2% |

| Happy | 1.8% |

| Disgusted | 9% |

| Confused | 20.6% |

| Calm | 30.4% |

| Angry | 16.4% |

| Sad | 17% |

| Surprised | 4.8% |

Microsoft Cognitive Services

| Age | 36 |

| Gender | Male |

Feature analysis

Amazon

| Person | 99.5% | |

Categories

Imagga

| paintings art | 87.4% | |

| people portraits | 9.8% | |

| cars vehicles | 1.4% | |

| food drinks | 0.5% | |

| streetview architecture | 0.4% | |

| pets animals | 0.4% | |

| interior objects | 0.1% | |

Captions

Microsoft

created on 2018-02-10

| a man sitting on a bench | 53% | |

| a man sitting on a bench next to a window | 31.3% | |

| a man sitting on a bench in front of a window | 31.2% | |

Azure OpenAI

Created on 2024-11-28

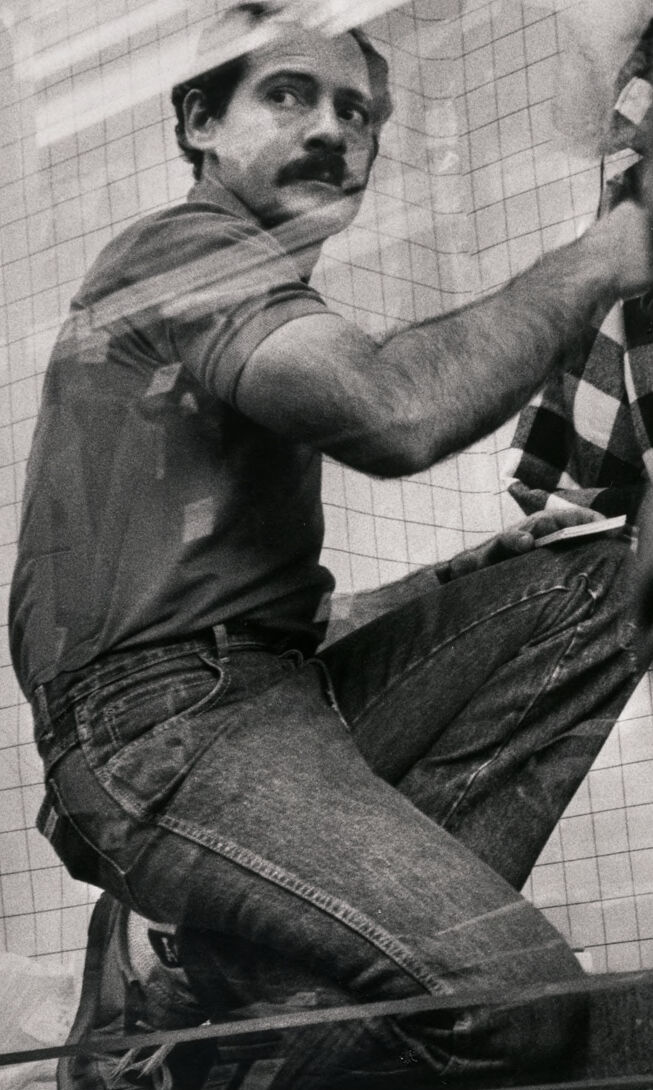

This is a black and white photograph showing an individual seated inside what appears to be a ticket booth or service window. The person inside the booth is wearing a casual short-sleeved shirt and jeans. Directly in the foreground, part of someone's arm is visible, wearing a jacket with distinctive studded decorations on the sleeves. The composition of the image gives the impression of a casual, possibly candid moment captured at some type of service window where the foreground figure might be awaiting service from the individual inside the booth.

Anthropic Claude

Created on 2024-11-27

The image shows a man sitting in a window display, likely meant to be part of a store display or advertisement. He is dressed in casual clothing, including jeans and a jacket, and has a mustache. In the foreground of the image, a hand is visible, gesturing or interacting with the man in the window. The overall scene has a gritty, black and white aesthetic, giving it a somewhat gritty and industrial feel.

Meta Llama

Created on 2024-11-26

The image depicts a black-and-white photograph of two men in a window, with one man sitting on the sill and the other standing outside. The man inside is wearing a t-shirt, jeans, and sneakers, and is holding a striped scarf. He has dark hair and a mustache, and is looking at the camera with a serious expression. The man outside is wearing a dark jacket with white polka dots on the cuffs and is reaching out to touch the window. The background of the image shows a building with white tiles and a balcony, suggesting that the scene is taking place in an urban setting. The overall atmosphere of the image is one of introspection and contemplation, with the two men appearing to be lost in thought.