Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Imagga

AWS Rekognition

| Age | 26-43 |

| Gender | Male, 84.2% |

| Happy | 1% |

| Angry | 4.3% |

| Disgusted | 1.4% |

| Calm | 56.4% |

| Sad | 23.1% |

| Surprised | 5.8% |

| Confused | 8% |

Feature analysis

Amazon

| Person | 97.2% | |

Categories

Imagga

| streetview architecture | 80.1% | |

| paintings art | 8.7% | |

| interior objects | 3.7% | |

| pets animals | 3.2% | |

| cars vehicles | 2.2% | |

| people portraits | 1% | |

| nature landscape | 0.4% | |

| food drinks | 0.4% | |

| text visuals | 0.1% | |

| beaches seaside | 0.1% | |

Captions

Microsoft

created by unknown on 2018-03-23

| a close up of a car | 27.7% | |

| close up of a car | 26.5% | |

| a black car | 12.8% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-11

| a photograph of a woman is looking out the window of a plane | -100% | |

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-29

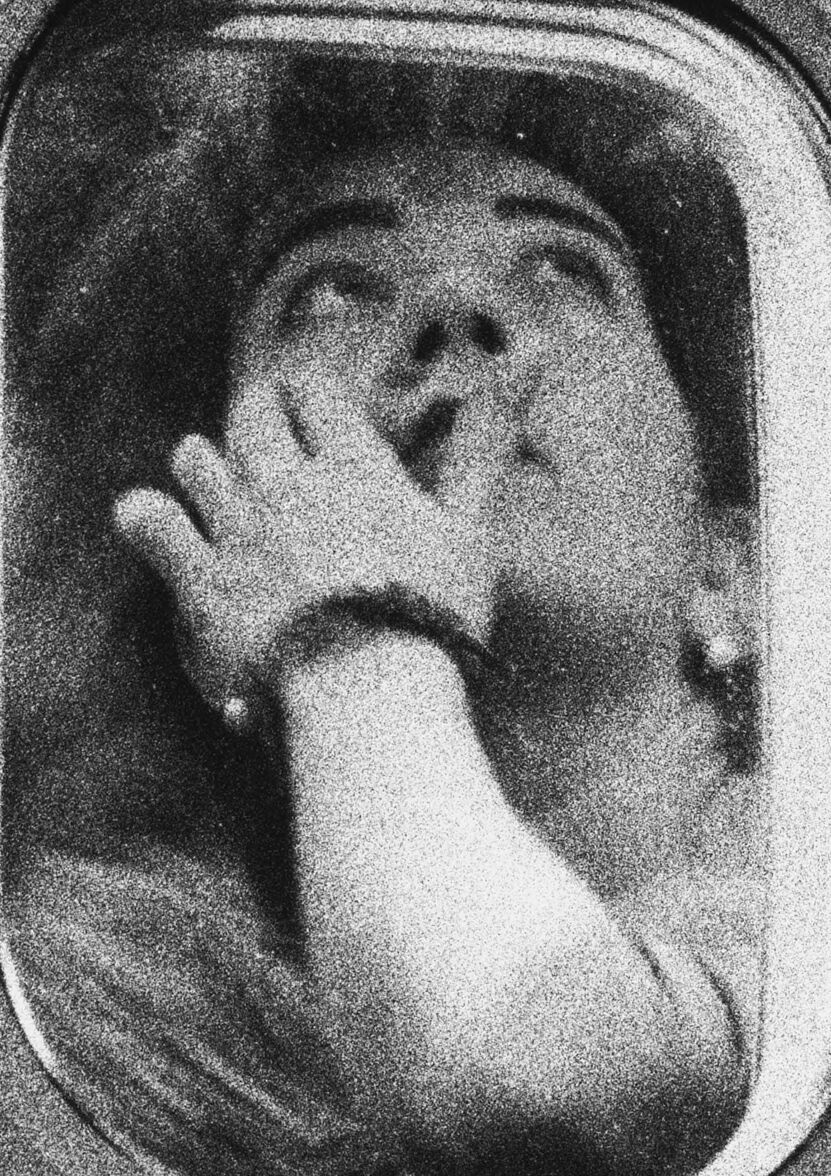

The image shows a close-up view of a person's face seen through a window or porthole. The face appears to be in profile, with the person's hand covering their mouth or eyes. The image has a grainy, black and white aesthetic, giving it a somewhat distorted or surreal quality.

Created by claude-3-opus-20240229 on 2024-12-29

The black and white image shows a person looking out the window of what appears to be an airplane or vehicle. The window has a rounded rectangular shape. The person has their hand raised near their face, possibly in a startled or distressed gesture. The grainy, high-contrast nature of the photograph gives it a documentary or candid feeling, capturing a fleeting moment of the person's emotional state while traveling.

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This is a black and white photograph taken through an airplane window. The image shows a passenger appearing to be asleep or resting against the window, with their head tilted back. The composition creates an intimate, candid moment framed by the oval shape of the aircraft window. The lighting and grainy texture of the black and white photography adds to the artistic and documentary feel of the image.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2025-05-24

The image depicts a woman looking out of an airplane window, with her hand raised to her face. The woman has dark hair and is wearing a light-colored top, along with a bracelet on her right wrist. She appears to be gazing upwards, possibly at the sky or clouds outside the plane.

The window itself is rectangular with rounded corners, featuring a thick frame that is likely part of the airplane's structure. The surrounding area of the plane is visible, with a darker section below the window and a lighter section above it. The overall atmosphere suggests that the woman is on a flight, possibly during takeoff or landing, given her gaze upwards.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2025-05-24

The image depicts a black and white photograph of a person's head and hand pressed against the window of a train car. The person is wearing a hat and has their right hand pressed against the window, with their fingers spread apart. The window is small, rounded, and has a thick frame. The background of the image is the exterior of the train car, which is made of metal or another dark material. The overall atmosphere of the image suggests that the person is trying to get someone's attention or is looking out at something outside the train.

Amazon Nova

Created by amazon.nova-pro-v1:0 on 2025-05-24

The image is a black-and-white photograph of a person inside a train. The person is seen through the window of the train, with their face partially visible. The person's right hand is on their mouth, and they are looking out of the window. The person is wearing a ring on their left hand and a bracelet on their right hand. The train's window is open, and the sunlight is shining through it.

Created by amazon.nova-lite-v1:0 on 2025-05-24

The image is a black-and-white photograph of a person inside a vehicle, possibly a train, looking out of the window. The person is a woman, and she is sitting inside the vehicle, with her hand on her mouth and her eyes wide open, possibly in surprise or shock. The window is open, and the view outside is blurry, making it difficult to identify the surroundings. The woman's hair is messy, and she is wearing a bracelet on her wrist. The image has a grainy texture, which adds to the sense of nostalgia or old-fashioned feel.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-22

Here's a description of the image:

The image is a black and white photograph featuring a woman looking out of a train or airplane window. The window is a rounded rectangular shape. She has her hand raised to her mouth, with her fingers touching her lips. Her gaze is directed upwards, suggesting she's looking at something above. Her hair appears to be styled. The surrounding structure is dark, likely the side of the vehicle. The overall tone is grainy, indicative of the age of the photo.

Created by gemini-2.0-flash on 2025-05-22

A monochrome photograph shows a woman looking out of a rectangular window, seemingly on a train or plane. Her face is tilted upwards, her eyes gazing intently at something above her. Her right hand is raised, with her fingers lightly touching her lips, possibly in a gesture of contemplation or disbelief. She appears to be wearing a bracelet on her wrist. The image has a grainy texture, giving it a vintage feel. The window frame is a light color, contrasting with the darker, textured surface surrounding it.

Mistral Pixtral

Created by us.mistral.pixtral-large-2502-v1:0 on 2025-05-24

The image is a black-and-white photograph showing a person waving through an airplane window. The person's face is partially obscured by their hand, which is raised in a wave. The window frame is visible, and the background outside the window appears to be blurry, possibly indicating the plane is in motion or the photo was taken from a distance. The person seems to be wearing a ring on their ring finger. The overall tone of the image suggests a moment of departure or farewell.