Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

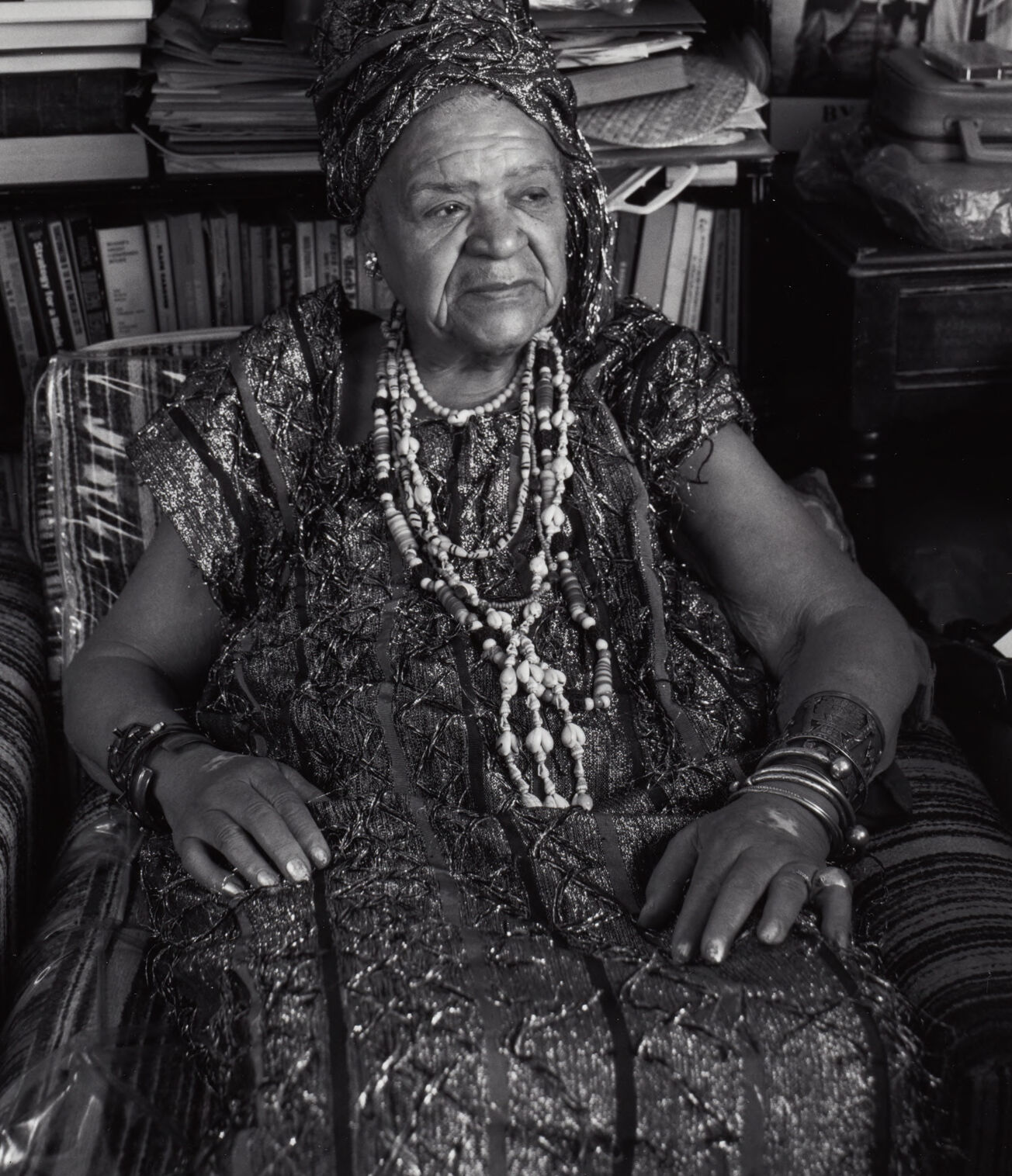

| Age | 57-77 |

| Gender | Female, 54.3% |

| Happy | 7.2% |

| Sad | 5.9% |

| Calm | 68.3% |

| Confused | 2.2% |

| Disgusted | 6.1% |

| Surprised | 2.7% |

| Angry | 7.7% |

Feature analysis

Amazon

| Person | 98.7% | |

Categories

Imagga

| interior objects | 87.2% | |

| paintings art | 9.5% | |

| people portraits | 0.9% | |

| streetview architecture | 0.9% | |

| pets animals | 0.7% | |

| food drinks | 0.7% | |

| cars vehicles | 0.1% | |

| text visuals | 0.1% | |

Captions

Microsoft

created on 2018-02-10

| Malcolm Little et al. sitting in a room | 77.9% | |

| Malcolm Little et al. in a room | 77.8% | |

| Malcolm Little sitting in a room | 77.7% | |

OpenAI GPT

Created by gpt-4o-2024-05-13 on 2024-12-30

The image depicts a person seated on a striped armchair in a cluttered room. The person is dressed in ornate traditional attire, adorned with numerous strands of beads and bracelets. The room features a bookshelf filled with books and various items, additional artwork, and various objects such as a wine bottle and a cup on a shelf. There are multiple framed photos and paintings on the wall behind the person, as well as additional decorative items and a suitcase on another shelf to the right. The atmosphere appears busy and filled with eclectic cultural and personal artifacts.

Created by gpt-4 on 2024-11-19

This black and white photograph depicts a person dressed in an ornate and patterned garment, adorned with an abundance of beads and bracelets. The attire suggests a cultural or traditional significance. They are seated in a room that appears to be filled with an eclectic collection of items, including books, artworks, photographs, and various decorative objects, which collectively create a rich, textured backdrop. The setting has the appearance of a personal space or a studio, reflecting a sense of individuality and perhaps artistic or intellectual pursuits, given the visible presence of books and artwork. There is also a visual contrast between the patterns and textures of the clothing and the surrounding environment, adding to the intricate composition of the image.

Anthropic Claude

Created by claude-3-5-sonnet-20241022 on 2024-12-26

This is a striking black and white photograph showing an elderly woman seated in an armchair, wearing elaborate traditional African-inspired attire. She's dressed in a richly textured, patterned dress or robe, with a matching head wrap, and adorned with multiple strands of beaded necklaces and bracelets. The setting appears to be a cluttered but lived-in space, with bookshelves, stacks of papers, photographs, and various artifacts visible in the background. The chair she sits in has a striped pattern, and the overall composition suggests this is someone of cultural significance, photographed in what appears to be their personal space. The photograph has a documentary quality to it, capturing both the subject's dignity and the cultural richness of their environment.

Created by claude-3-haiku-20240307 on 2024-11-19

The image depicts an elderly woman sitting in a chair in what appears to be her home. She is wearing traditional-looking jewelry and clothing, and the background shows bookshelves, artwork, and other personal effects, suggesting this is her living space. The image has a sense of depth and perspective, creating an intimate and introspective atmosphere. The black and white format adds a timeless quality to the scene.

Meta Llama

Created by us.meta.llama3-2-90b-instruct-v1:0 on 2024-12-24

The image is a black-and-white photograph of an elderly woman sitting in a chair, surrounded by various objects and artwork. The woman is dressed in a dark-colored dress with a patterned headscarf and multiple necklaces. She sits in a striped armchair, with her hands resting on the armrests. Behind her, there are several shelves filled with books, objects, and artwork. The shelves appear to be cluttered, with items stacked haphazardly. On the top shelf, there is a framed portrait of a woman, a doll, and other objects. Below that, there are more books and objects, including a framed photograph of a man. To the right of the woman, there is a small table with a vase of flowers and a mug. On the wall behind her, there are several pieces of artwork, including a painting of a man's face and a sculpture of a human head. The overall atmosphere of the image suggests that the woman is sitting in her home, surrounded by her personal belongings and treasures. The cluttered shelves and artwork on the walls give the impression of a cozy and eclectic space.

Created by us.meta.llama3-2-11b-instruct-v1:0 on 2024-11-30

The image is a black-and-white photograph of an elderly woman sitting in a chair, surrounded by various objects and artwork. The woman is wearing a head wrap and a dress with a patterned design. She has multiple necklaces and bracelets on, and her hands are resting on her lap. The background of the image features a bookshelf filled with books, as well as several framed photographs and other decorative items. The overall atmosphere of the image suggests a cozy and intimate setting, possibly a home or office. The presence of the woman's jewelry and the artwork in the background adds a touch of elegance and sophistication to the scene.