Machine Generated Data

Tags

Color Analysis

Face analysis

Amazon

Microsoft

Imagga

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 54.8% |

| Happy | 45.8% |

| Confused | 45.8% |

| Sad | 46.1% |

| Angry | 46.8% |

| Calm | 48.3% |

| Disgusted | 45.5% |

| Surprised | 46.5% |

Feature analysis

Amazon

| Handbag | 100% | |

Categories

Imagga

| people portraits | 66.9% | |

| paintings art | 24% | |

| events parties | 8.3% | |

| streetview architecture | 0.3% | |

| nature landscape | 0.2% | |

| pets animals | 0.1% | |

| food drinks | 0.1% | |

Captions

Microsoft

created on 2018-03-23

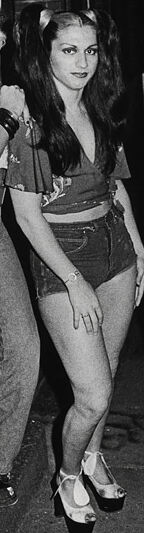

| Dale Bozzio et al. posing for a photo | 96.4% | |

| Dale Bozzio et al. posing for the camera | 96.3% | |

| Dale Bozzio et al. posing for a picture | 96.2% | |

Clarifai

created by general-english-image-caption-blip on 2025-05-11

| a photograph of three women standing next to each other on a sidewalk | -100% | |

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-31

The image depicts three women posing together in what appears to be a dark, dimly lit alley or doorway. The women are dressed in stylish outfits typical of the 1970s era, with flared pants, platform shoes, and voluminous hairstyles. They seem to be in a celebratory mood, with expressions of joy or excitement on their faces. The black and white photograph lends an atmospheric, vintage feel to the scene.

Created by claude-3-opus-20240229 on 2024-12-31

The black and white image shows three young women outside at night. They are dressed up, likely for a night out, wearing fashionable outfits including cropped tops and high-waisted, wide-legged pants or skirts. One woman is carrying a large handbag. They have styled hair and appear to be talking and laughing together as they walk down what looks like an alleyway or narrow street with old wooden doors or shutters in the background. The grainy, high-contrast style suggests it may be an artistic fashion photograph from a past decade, likely the 1970s based on the clothing and hairstyles.

Created by claude-3-5-sonnet-20241022 on 2024-12-31

This is a black and white photograph from what appears to be the 1970s, showing three women standing together at night. The image has a casual, candid quality to it. The women are dressed in the fashion of the era - two are wearing wide-leg pants while the one in the middle is wearing shorts or a brief outfit. They're all wearing platform shoes, which were very popular during that time period. One woman carries a large shoulder bag. The setting appears to be outside a building with brick walls and window panes visible. The lighting creates a nightlife atmosphere, suggesting this might have been taken outside a club or entertainment venue. The photograph captures a moment of what seems to be a fun night out, with the subjects appearing to be in good spirits.

Google Gemini

Created by gemini-2.0-flash-lite on 2025-05-09

Here's a description of the image:

Overall Impression: The image is a black and white photograph of three individuals, likely outside at night. The style and clothing suggest a scene from the late 1970s or early 1980s. The image has a candid, almost documentary-like feel.

People:

- There are three individuals in the image. It appears they are all in drag, wearing wigs, makeup, and clothing.

- The person on the left has a short, curled blonde wig, wearing a t-shirt, flared jeans, and a large shoulder bag.

- The person in the middle has dark hair in pigtails and is wearing a cropped top and shorts.

- The person on the right has wavy, long hair and is wearing a simple black top and wide-legged pants. They hold a small purse and appear to be mid-speech.

Setting:

- The background is mostly dark, indicating it is night.

- A building or structure with a door or window is visible on the left.

- The ground appears to be a sidewalk or a similar paved surface.

- A fire hydrant and a trash bag are visible on the right.

Atmosphere: The image captures a sense of nightlife, freedom, and self-expression. It likely depicts a moment of social interaction outside of a venue. The lighting and composition give a gritty, yet intimate feeling.

Created by gemini-2.0-flash on 2025-05-09

Here's a description of the image:

This is a black and white image showing three people posing on the street at night. The lighting creates a slightly dramatic, grainy effect.

The person on the left has short, blonde hair styled in waves. They are wearing a t-shirt and wide-legged jeans, accessorized with a chunky bracelet and a large bag slung over their shoulder.

The person in the middle has dark hair styled in pigtails. They are wearing a short, wrap-style top and denim shorts, along with platform shoes.

The person on the right has long, wavy hair and appears to be talking or singing with their mouth open. They are wearing a darker top and high-waisted pants. They also have a small bag over their shoulder.

The background includes a building with dark windows on the left and blurred lights on the right, indicating an urban setting. The overall aesthetic suggests a snapshot of nightlife from a past era.