Machine Generated Data

Tags

Amazon

created on 2019-04-05

| Person | 99.7 | |

|

| ||

| Human | 99.7 | |

|

| ||

| Person | 99.7 | |

|

| ||

| Person | 99.7 | |

|

| ||

| Person | 98.6 | |

|

| ||

| Person | 98.6 | |

|

| ||

| Bike | 97.6 | |

|

| ||

| Vehicle | 97.6 | |

|

| ||

| Transportation | 97.6 | |

|

| ||

| Bicycle | 97.6 | |

|

| ||

| Person | 97.3 | |

|

| ||

| Bicycle | 94.5 | |

|

| ||

| Machine | 91.7 | |

|

| ||

| Wheel | 91.7 | |

|

| ||

| Person | 91 | |

|

| ||

| Motorcycle | 89 | |

|

| ||

| Person | 88.1 | |

|

| ||

| Wheel | 87.9 | |

|

| ||

| Musical Instrument | 82.7 | |

|

| ||

| Musician | 82.7 | |

|

| ||

| Apparel | 80.4 | |

|

| ||

| Clothing | 80.4 | |

|

| ||

| Wheel | 77.2 | |

|

| ||

| People | 71.4 | |

|

| ||

| Bicycle | 70.7 | |

|

| ||

| Person | 68.3 | |

|

| ||

| Face | 67.9 | |

|

| ||

| Wheel | 66.7 | |

|

| ||

| Leisure Activities | 66.5 | |

|

| ||

| Music Band | 66.3 | |

|

| ||

| Advertisement | 64.9 | |

|

| ||

| Spoke | 64.4 | |

|

| ||

| Poster | 64.3 | |

|

| ||

| Photo | 63.3 | |

|

| ||

| Photography | 63.3 | |

|

| ||

| Portrait | 63.3 | |

|

| ||

| Collage | 59.9 | |

|

| ||

| Guitar | 58.8 | |

|

| ||

| Person | 56.1 | |

|

| ||

| Skin | 56 | |

|

| ||

| Shorts | 55.3 | |

|

| ||

| Motorcycle | 53.7 | |

|

| ||

Clarifai

created on 2018-03-22

Imagga

created on 2018-03-22

| brass | 45.9 | |

|

| ||

| wind instrument | 43 | |

|

| ||

| musical instrument | 38.5 | |

|

| ||

| cornet | 29.3 | |

|

| ||

| sax | 25.9 | |

|

| ||

| man | 24.2 | |

|

| ||

| male | 21.4 | |

|

| ||

| person | 20.9 | |

|

| ||

| people | 19.5 | |

|

| ||

| trombone | 18.8 | |

|

| ||

| city | 18.3 | |

|

| ||

| adult | 16.9 | |

|

| ||

| portrait | 16.2 | |

|

| ||

| urban | 15.7 | |

|

| ||

| guitar | 14.4 | |

|

| ||

| device | 13.9 | |

|

| ||

| black | 13.8 | |

|

| ||

| music | 13 | |

|

| ||

| street | 12.9 | |

|

| ||

| men | 12.9 | |

|

| ||

| bicycle | 12.5 | |

|

| ||

| stringed instrument | 11.6 | |

|

| ||

| human | 10.5 | |

|

| ||

| standing | 10.4 | |

|

| ||

| business | 10.3 | |

|

| ||

| building | 10.2 | |

|

| ||

| lifestyle | 10.1 | |

|

| ||

| sport | 10 | |

|

| ||

| attractive | 9.8 | |

|

| ||

| old | 9.7 | |

|

| ||

| youth | 9.4 | |

|

| ||

| musician | 9.3 | |

|

| ||

| playing | 9.1 | |

|

| ||

| fashion | 9 | |

|

| ||

| posing | 8.9 | |

|

| ||

| businessman | 8.8 | |

|

| ||

| looking | 8.8 | |

|

| ||

| bike | 8.8 | |

|

| ||

| architecture | 8.6 | |

|

| ||

| outdoor | 8.4 | |

|

| ||

| outdoors | 8.2 | |

|

| ||

| protection | 8.2 | |

|

| ||

| happy | 8.1 | |

|

| ||

| to | 8 | |

|

| ||

| horn | 7.9 | |

|

| ||

| guitarist | 7.9 | |

|

| ||

| play | 7.7 | |

|

| ||

| travel | 7.7 | |

|

| ||

| wall | 7.7 | |

|

| ||

| window | 7.5 | |

|

| ||

| silhouette | 7.4 | |

|

| ||

| transport | 7.3 | |

|

| ||

| danger | 7.3 | |

|

| ||

| smiling | 7.2 | |

|

| ||

| sexy | 7.2 | |

|

| ||

| road | 7.2 | |

|

| ||

Google

created on 2018-03-22

| photograph | 94.8 | |

|

| ||

| black and white | 90.6 | |

|

| ||

| vehicle | 86.8 | |

|

| ||

| bicycle | 85.4 | |

|

| ||

| snapshot | 81.8 | |

|

| ||

| monochrome photography | 73.4 | |

|

| ||

| sports equipment | 65.4 | |

|

| ||

| monochrome | 63.8 | |

|

| ||

| recreation | 63.3 | |

|

| ||

| vintage clothing | 51.1 | |

|

| ||

Color Analysis

Face analysis

Amazon

Microsoft

AWS Rekognition

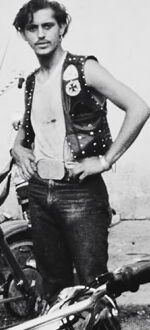

| Age | 26-43 |

| Gender | Male, 53.8% |

| Sad | 45.7% |

| Confused | 45.8% |

| Disgusted | 45.2% |

| Surprised | 45.5% |

| Calm | 51.8% |

| Angry | 45.7% |

| Happy | 45.2% |

AWS Rekognition

| Age | 38-59 |

| Gender | Male, 55% |

| Angry | 47.5% |

| Calm | 49.9% |

| Disgusted | 45.1% |

| Sad | 46.7% |

| Surprised | 45.1% |

| Confused | 45.7% |

| Happy | 45.1% |

AWS Rekognition

| Age | 27-44 |

| Gender | Male, 54.9% |

| Angry | 45.8% |

| Calm | 46.2% |

| Sad | 52.4% |

| Disgusted | 45.1% |

| Surprised | 45% |

| Confused | 45.4% |

| Happy | 45% |

AWS Rekognition

| Age | 11-18 |

| Gender | Male, 50.4% |

| Calm | 49.9% |

| Angry | 49.6% |

| Happy | 49.5% |

| Disgusted | 49.5% |

| Surprised | 49.5% |

| Confused | 49.6% |

| Sad | 49.9% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 50.8% |

| Sad | 48% |

| Calm | 45% |

| Confused | 45% |

| Angry | 52% |

| Surprised | 45% |

| Happy | 45% |

| Disgusted | 45% |

AWS Rekognition

| Age | 26-43 |

| Gender | Female, 50.4% |

| Disgusted | 49.5% |

| Confused | 49.5% |

| Sad | 50.3% |

| Surprised | 49.5% |

| Calm | 49.6% |

| Angry | 49.6% |

| Happy | 49.5% |

AWS Rekognition

| Age | 48-68 |

| Gender | Male, 50.5% |

| Calm | 50.1% |

| Disgusted | 49.5% |

| Sad | 49.7% |

| Happy | 49.5% |

| Surprised | 49.5% |

| Angry | 49.5% |

| Confused | 49.5% |

AWS Rekognition

| Age | 35-53 |

| Gender | Male, 54.9% |

| Disgusted | 45% |

| Sad | 50.9% |

| Confused | 45.3% |

| Angry | 45.1% |

| Calm | 48.6% |

| Happy | 45.1% |

| Surprised | 45.1% |

AWS Rekognition

| Age | 23-38 |

| Gender | Male, 50.5% |

| Happy | 49.5% |

| Calm | 49.8% |

| Angry | 49.8% |

| Disgusted | 49.6% |

| Sad | 49.8% |

| Surprised | 49.5% |

| Confused | 49.5% |

Microsoft Cognitive Services

| Age | 27 |

| Gender | Female |

Google Vision

| Surprise | Very unlikely |

| Anger | Very unlikely |

| Sorrow | Very unlikely |

| Joy | Very unlikely |

| Headwear | Very unlikely |

| Blurred | Very unlikely |

Feature analysis

Categories

Imagga

| people portraits | 93.3% | |

|

| ||

| streetview architecture | 3.1% | |

|

| ||

| paintings art | 2.2% | |

|

| ||

| events parties | 0.6% | |

|

| ||

| nature landscape | 0.3% | |

|

| ||

| pets animals | 0.2% | |

|

| ||

| interior objects | 0.1% | |

|

| ||

| text visuals | 0.1% | |

|

| ||

Captions

Microsoft

created on 2018-03-22

| a vintage photo of a group of people posing for the camera | 88.9% | |

|

| ||

| a group of people posing for a photo | 88.8% | |

|

| ||

| a vintage photo of a group of people posing for a picture | 88.7% | |

|

| ||

Anthropic Claude

Created by claude-3-haiku-20240307 on 2024-12-29

The image shows a group of people standing in front of a building with the sign "Outlaw's Territory" above the entrance. The people appear to be a group of motorcycle enthusiasts or biker gang members, wearing leather jackets and posing in front of various motorcycles. The image has a gritty, black and white aesthetic that suggests it may be from an earlier era. The overall scene conveys a sense of the outlaw or rebel culture associated with motorcycle gangs.

Created by claude-3-5-sonnet-20241022 on 2024-12-29

This black and white photograph shows a motorcycle club hangout with "OUTLAW'S TERRITORY" painted on the white wall above. Several motorcycles are parked in the foreground on what appears to be a dirt or gravel surface with some litter scattered about. A group of people wearing motorcycle club vests and casual attire are positioned both in front of and in the doorway of the two-story building. The image has a gritty, documentary-style quality that captures the motorcycle club culture, likely from the 1960s or 1970s. The building features several windows and appears somewhat weathered, adding to the overall raw aesthetic of the scene.

Text analysis

Amazon